Hand and eye coordinate converting method of visual positioning robot

A robot hand and coordinate transformation technology, applied in the field of coordinate transformation, can solve problems such as insufficient precision, inconsistent sizes in all directions, irregular errors, etc., and achieve strong robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] In this embodiment, the hand-eye coordinate conversion method of the visual positioning robot is:

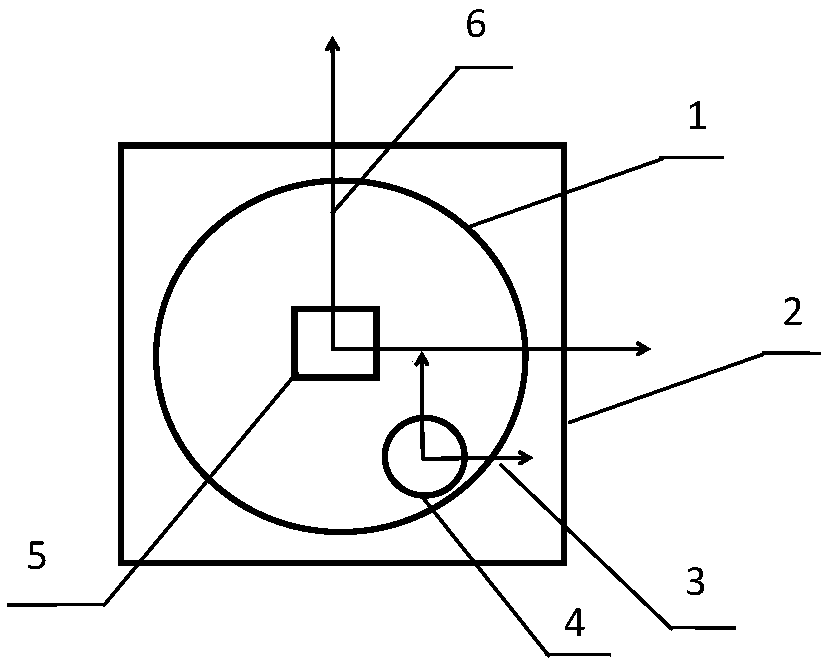

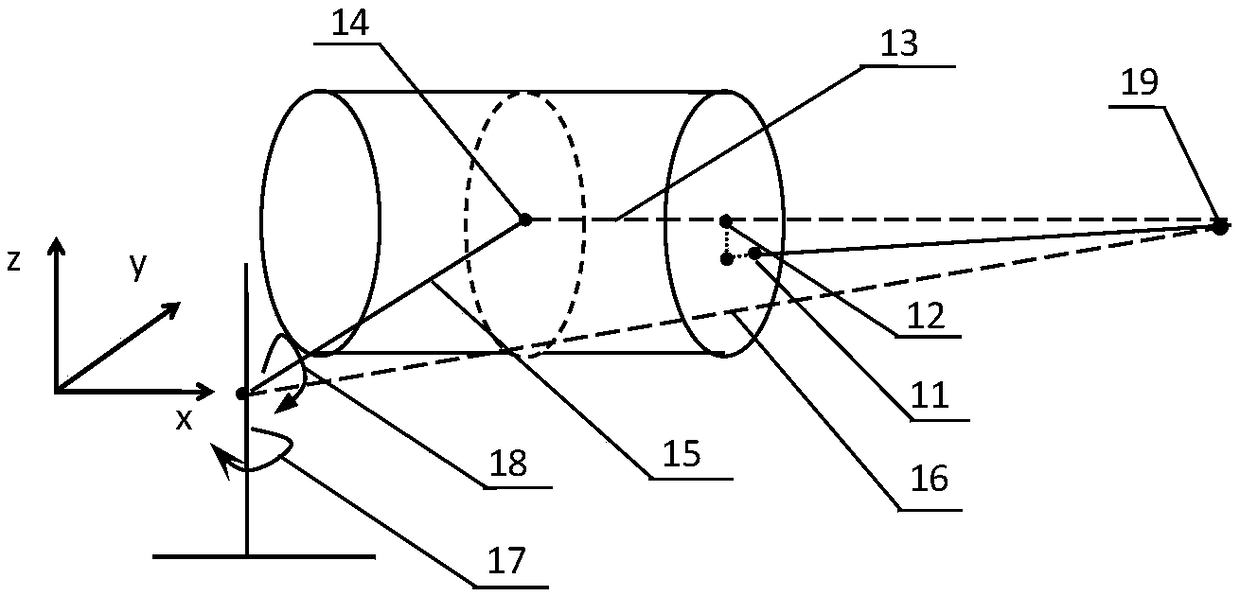

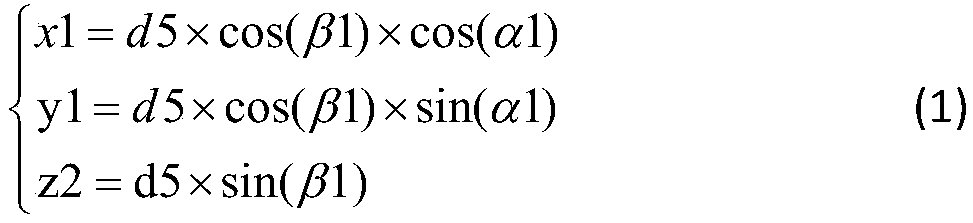

[0044] see figure 1 , the rotary platform 1 of the mechanical arm chassis is set at the position of the central area 5 of the fixed support device 2, and the visual sensor platform 4 is arranged on the rotary platform 1, and the visual sensor platform 4 rotates with the rotation of the rotary platform 1; The sensor platform 4 itself has two degrees of freedom of horizontal rotation and vertical rotation, forming a biaxial rotation structure with a horizontal rotation shaft and a vertical rotation shaft, and a visual sensor is set on the visual sensor platform 4, and the visual sensor is used to obtain detection The data includes the laser distance sensor used to detect the linear distance d from the target to the visual sensor, the horizontal angle sensor used to detect the horizontal rotation angle α of the visual sensor, and the vertical angle sensor used to detect the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com