A static gesture recognition method based on a multi-scale convolution neural network

A technology of convolutional neural network and gesture recognition, which is applied in the field of static gesture recognition of multi-scale convolutional neural network, can solve the problems of large influence of external environment, insufficient extraction of features, fineness and poor stability, etc., so as to reduce consumption, The effect of dimensionality reduction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

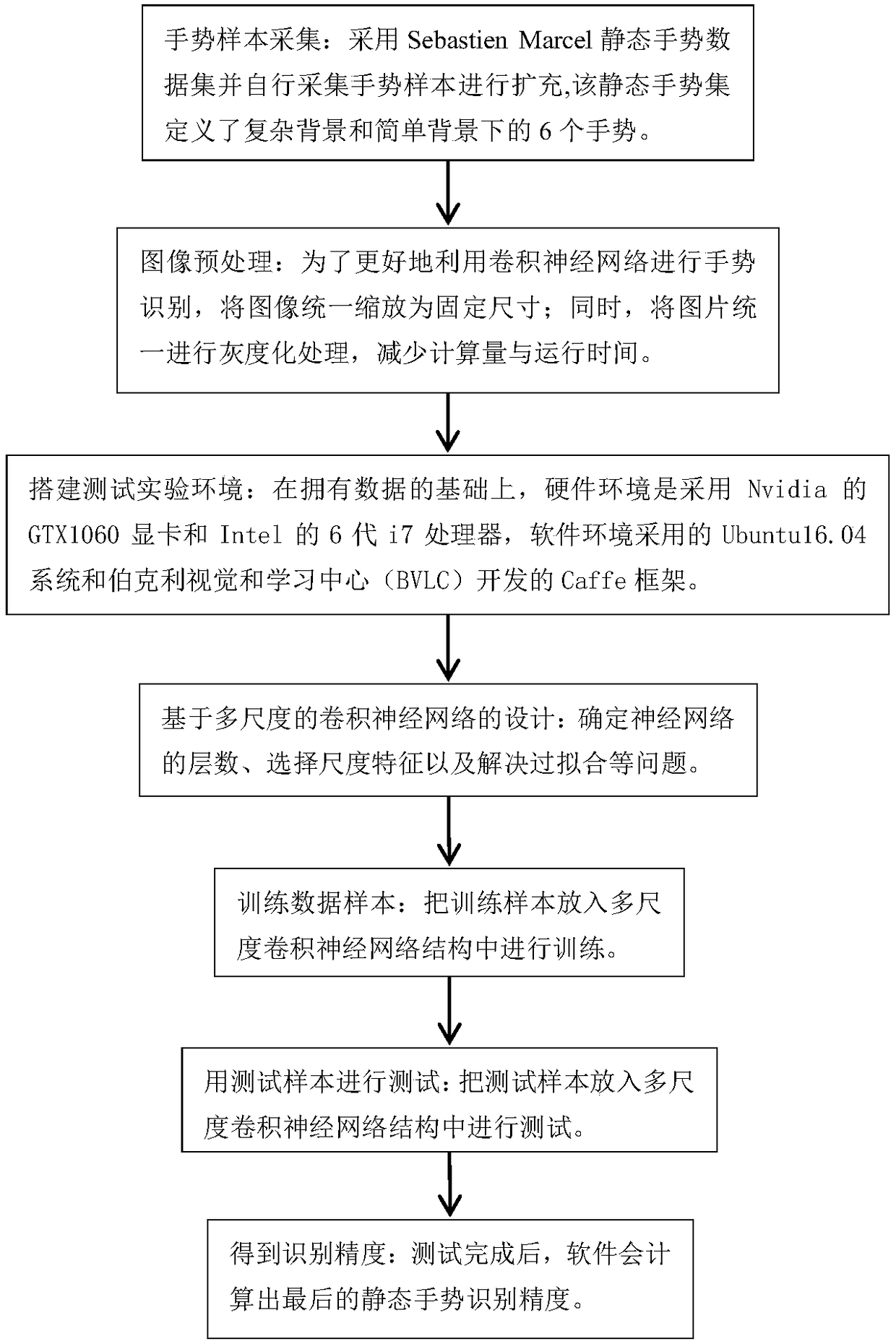

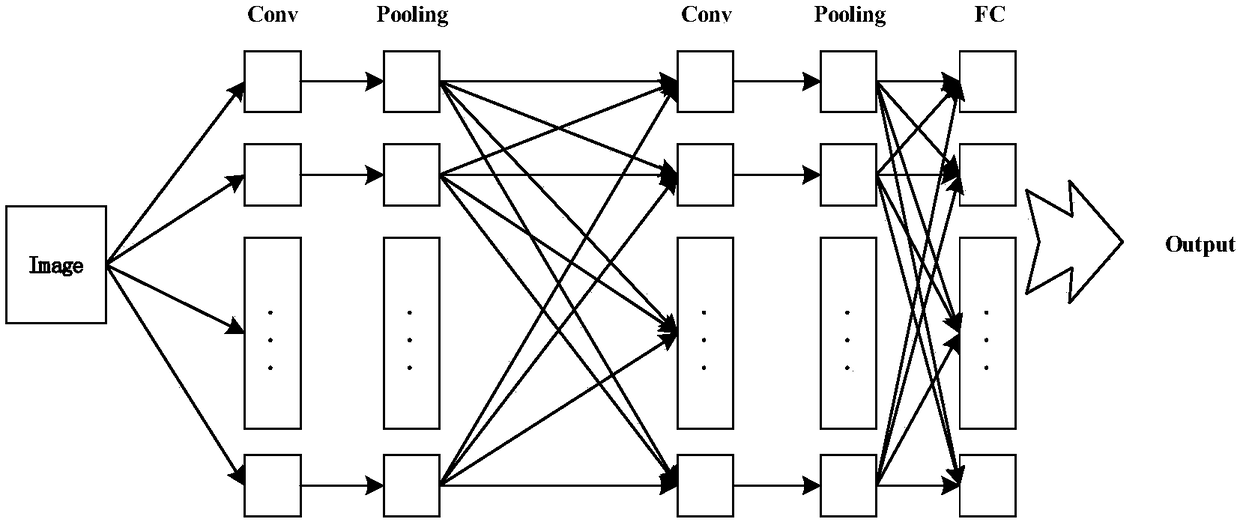

[0077] Embodiment 1 first collects and preprocesses static gesture picture data under simple background and complex background, and the data is divided into training data and test data; after obtaining the data, the experimental test environment is built, which is divided into hardware and software The hardware environment uses Nvidia's GTX1060 graphics card and Intel's 6th generation i7 processor, and the software environment uses the Ubuntu 16.04 system and the Caffe framework developed by the Berkeley Vision and Learning Center (BVLC); followed by multi-scale convolutional neural networks Network design, that is: determine the number of neural network layers, select appropriate scale features, etc.; then put the marked training data into this network structure for learning; finally input test data samples for testing, and obtain the final static gesture recognition Accuracy; compared with the experimental accuracy obtained by the convolutional neural network framework under ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com