A monocular static gesture recognition method based on multi-feature fusion

A multi-feature fusion and gesture recognition technology, applied in the field of image recognition, can solve the problems of low recognition accuracy, single gesture feature, and not widely used, and achieve the effects of easy promotion and application, high recognition accuracy, and low equipment cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

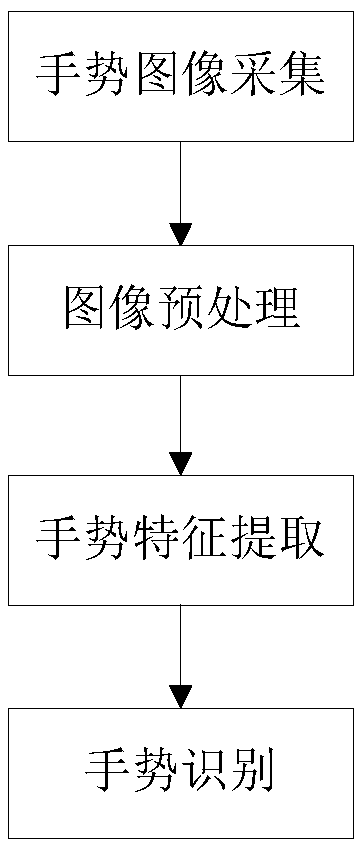

[0071] Such as figure 1 As shown, a monocular static gesture recognition method based on multi-feature fusion, its process is as follows: gesture image acquisition step, image preprocessing step, gesture feature extraction step and gesture recognition step.

[0072] Wherein, S1, gesture image acquisition steps:

[0073] Use a monocular camera to collect RGB images containing gestures. The monocular camera should be located directly in front of the human body. In the collected images, the human face and hands are the two larger areas of all skin-color and skin-like areas.

[0074] Wherein, S2, image preprocessing step:

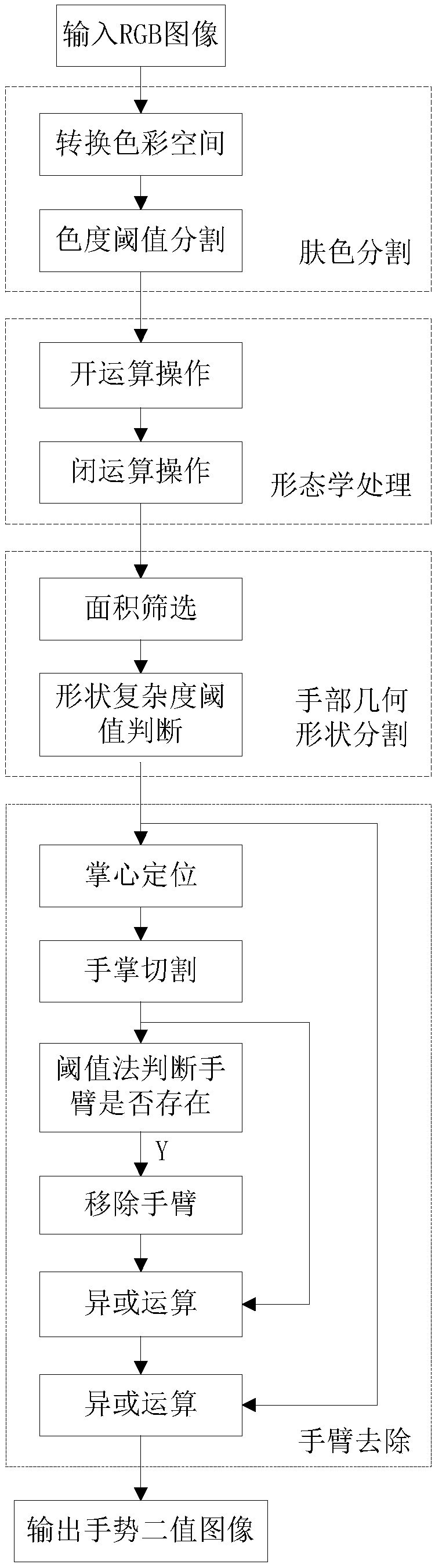

[0075] Such as figure 2 As shown, the image preprocessing steps are as follows:

[0076] S201, skin color segmentation, the specific process is as follows:

[0077] S2011, converting the color space, converting the input image from the RGB color space to the YCr'Cb' color space, the specific conversion formula is as follows:

[0078] y=0.299×r+0.587×g+0.1...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com