A method for generating facial expression based on human facial expression by partitioning facial expression into facial expression elements

A technology of facial expression and movement, applied in the field of artificial intelligence interaction, can solve problems such as restricting the interaction performance of humanoid robots

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0054] Taking smiling and saying "Hello" as an example, the method for generating human facial expressions based on partitioning elements, the specific steps are as follows:

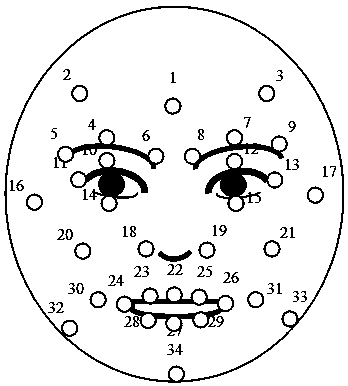

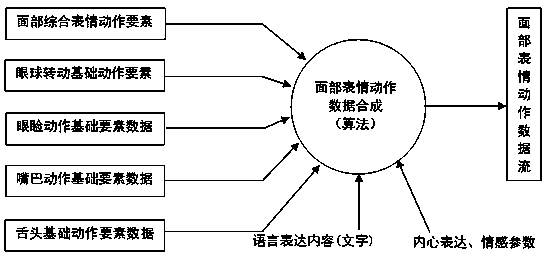

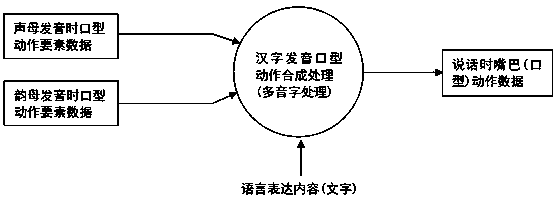

[0055] Step 1: Collect the movement data of each part of the facial expressions of multiple objects, and establish a comprehensive facial expression action element database through sorting, feature analysis, evaluation, and element extraction; the facial integrated expression action element database is driven by human facial expression actions Muscle groups of the eyes, face, and mouth, collected by figure 1 Set 34 marker points at the position shown to collect facial comprehensive expression and movement data.

[0056] Specifically, a high-precision 3D facial motion capture system is used to collect motion data of various parts of facial expressions of multiple objects. The specific collection method is as follows: first collect the 3D coordinates A of 34 marker points on the face of a single object in ...

Embodiment 2

[0078] Taking disgust, rolling eyes and saying "Ah" as an example, the method for generating human facial expressions based on partitioning and factorization of facial expressions, the specific steps are as follows:

[0079] Step 1: Collect the movement data of each part of the facial expressions of multiple objects, and establish a comprehensive facial expression action element database through sorting, feature analysis, evaluation, and element extraction; the facial integrated expression action element database is driven by human facial expression actions Muscle groups of the eyes, face, and mouth, collected by figure 1 Set 34 marker points at the position shown to collect facial comprehensive expression and movement data.

[0080] Specifically, a high-precision 3D facial motion capture system is used to collect motion data of various parts of facial expressions of multiple objects. The specific collection method is as follows: first collect the 3D coordinates A of 34 marker p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com