Cascaded convolutional neural network-based quick detection method of irregular-object grasping pose of robot

A convolutional neural network and detection method technology, which is applied in the field of robot vision technology detection and grasping control, can solve problems such as not being well used to solve robot grasping posture detection, and overcome the influence of grasping posture detection. , improve real-time performance, improve the effect of grasping detection accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

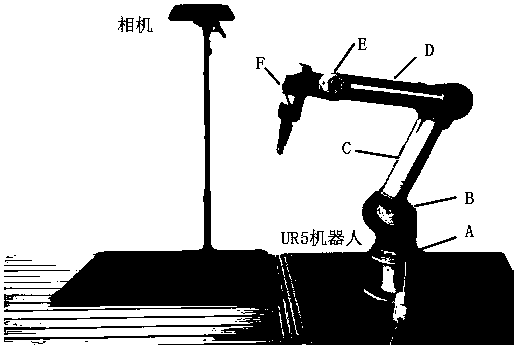

[0041] The robot and camera configuration adopted in the embodiment are as follows: figure 1 shown, including a low-cost top-down color camera (resolution ), a UR5 robot that has been calibrated by hand-eye. The computer configuration used for model training and experiment implementation is Intel(R) Core(TM) i7 3.40GHz CPU, NVIDIA GeForce GTX 1080TI graphics card, 16GB memory, and the operating system is Ubuntu 16.04.

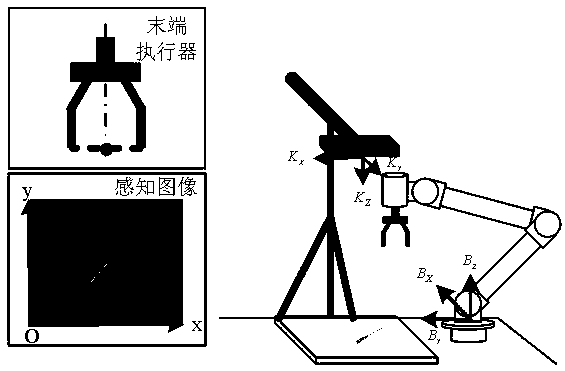

[0042] figure 2 The definition relations of each coordinate system are given in . In order to facilitate the correspondence between the grasping detection results and the robot's grasping pose, the grasping pose detection results under the image are represented by a simplified "dot-line method". The center point of the grabbing position in the image is recorded in the image coordinate system as , corresponding to the midpoint of the line connecting the two fingers of the robot end effector; the grasping center line in the image corresponds to the line co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com