Scene text detection method based on end-to-end full convolutional neural network

A convolutional neural network and text detection technology, applied in the field of scene text detection based on end-to-end full convolutional neural network, can solve the problem of inability to accurately express the geometric characteristics of text, and achieve the effect of good application value

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0062] The implementation method of this embodiment is as described above, and the specific steps will not be described in detail. The following only shows the effect of the case data. The present invention is implemented on two data sets with ground-truth labels, namely:

[0063] MSRA-TD500 dataset: This dataset contains 300 training images and 200 testing images.

[0064] ICDAR 2015 dataset: This dataset contains 1000 training images and 500 testing images.

[0065] In this embodiment, experiments are carried out on each data set, and the images in the data set are for example figure 2 shown.

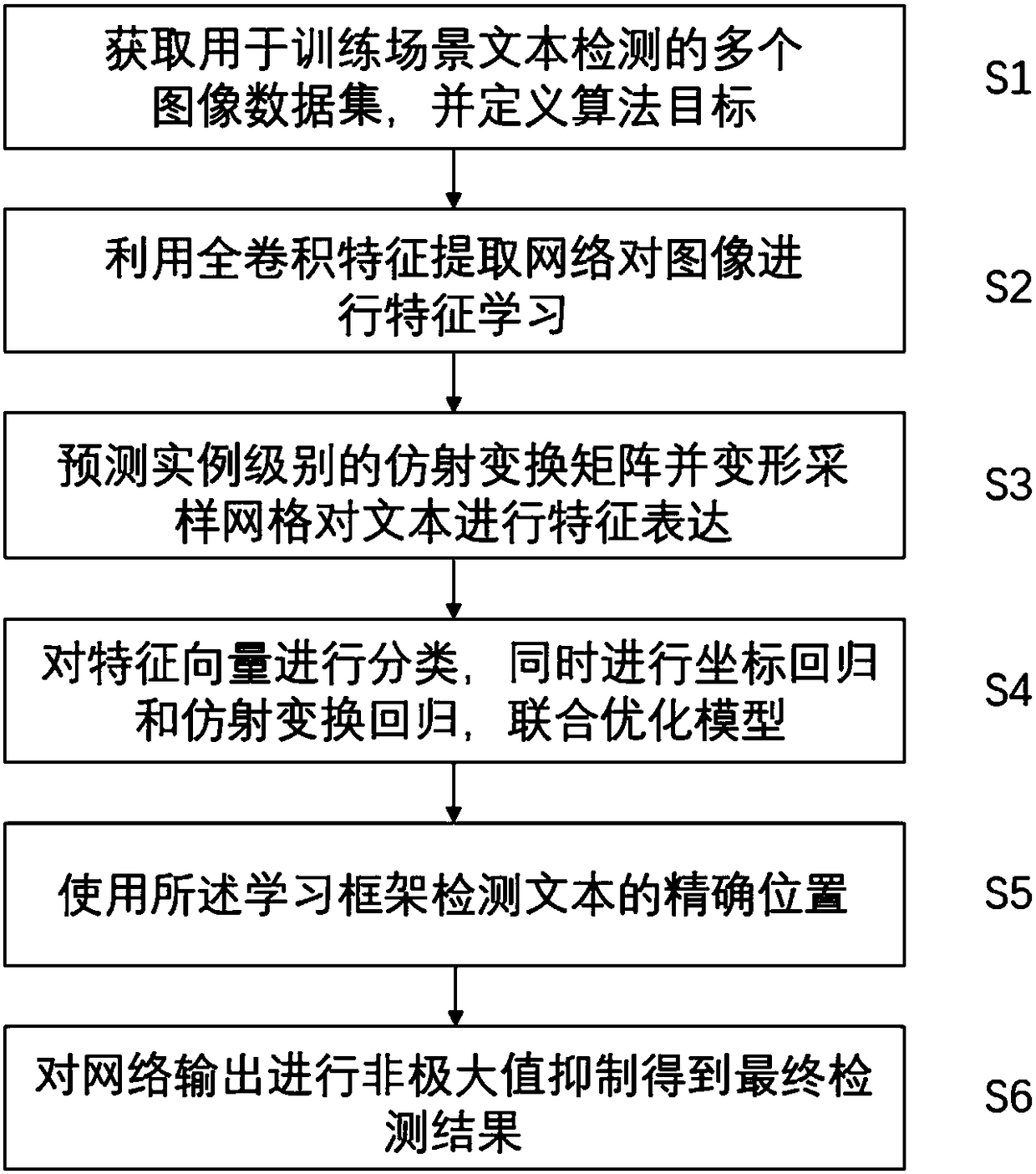

[0066] The main process of text detection is as follows:

[0067] 1) Extract the multi-scale feature map of the image through the basic full convolutional network;

[0068] 2) Fusion of feature maps on three scales to obtain initial features;

[0069] 3) Use a layer of convolution operation to predict the affine transformation matrix of each sample point on the feature map, and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com