Human movement identification method through fusion of deep neural network model and binary system Hash

A technology of deep neural network and human motion recognition, which is applied in character and pattern recognition, instruments, computer components, etc., can solve problems such as high computational complexity, large number of parameters, and capturing motion dynamics, achieving good recognition effect and simple operational effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The technical solutions in the embodiments of the present invention will be described clearly and in detail below in conjunction with the drawings in the embodiments of the present invention. The described embodiments are only a part of the embodiments of the present invention.

[0039] The technical solutions of the present invention to solve the above technical problems are:

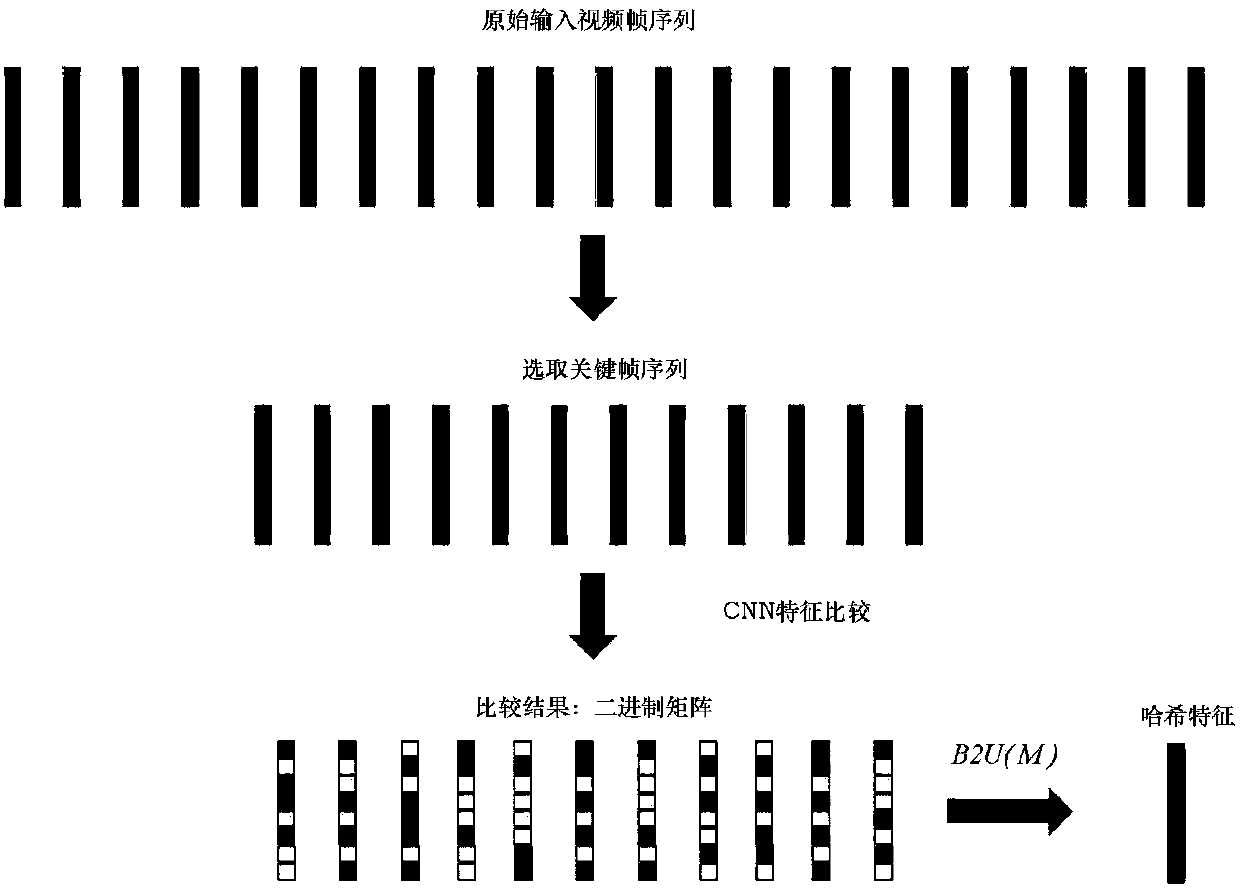

[0040] Attached below Figure 1-2 As shown, a human body action recognition method based on a deep network model and a binary hash method includes the following steps:

[0041] 1. Extract the depth features of the video

[0042] The samples in the experimental video library are divided into training set and test set, and the FC layer features are extracted from all samples. The detailed steps of the extraction method are as follows:

[0043] 1) Split the input video into frames

[0044] In order to extract the local feature information of the video, the input video containing human movements is divided i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com