Fusion correction method of stereo vision and low-beam lidar in unmanned driving

A lidar and stereo vision technology, applied in radio wave measurement systems, electromagnetic wave re-radiation, image enhancement and other directions, can solve the problems of inability to guarantee pixels, complex changes, and inability to directly perceive and process the surrounding environment, to reduce costs, Improve visual accuracy and improve the effect of binocular vision accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] The invention will be further described below with reference to the accompanying drawings and in combination with specific embodiments, so that those skilled in the art can implement it by referring to the description, and the protection scope of the present invention is not limited to the specific embodiments.

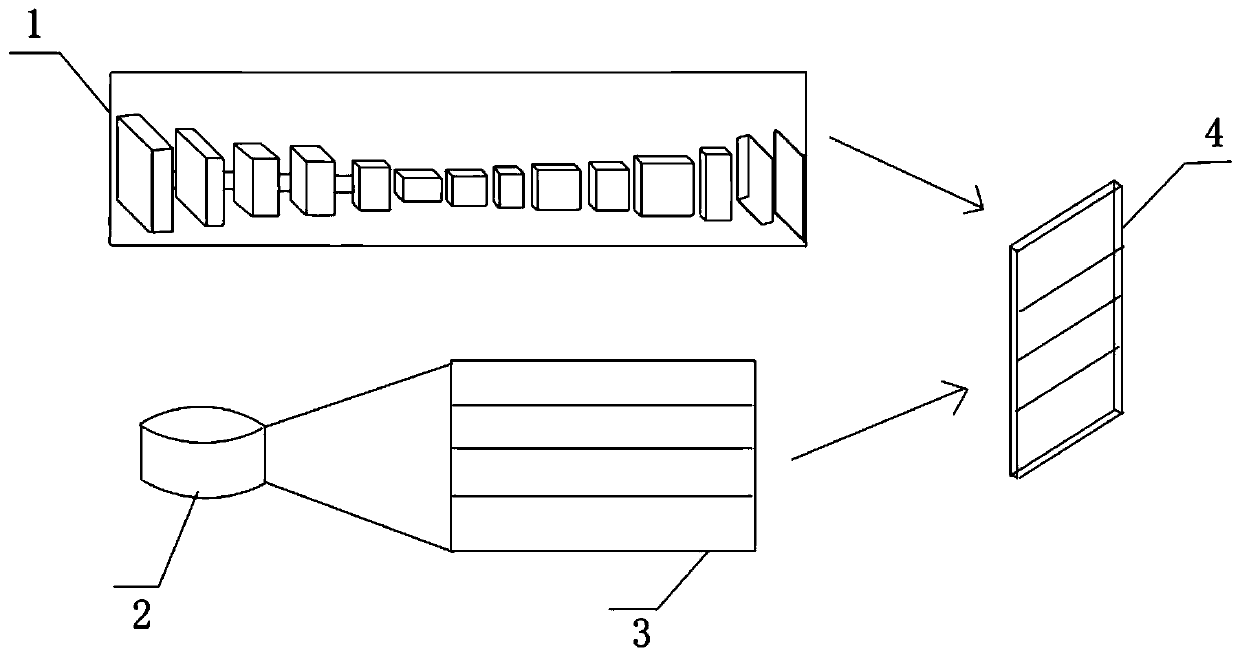

[0017] The invention relates to a method for fusion correction of stereo vision and low beam laser radar in unmanned driving. The method includes the following steps:

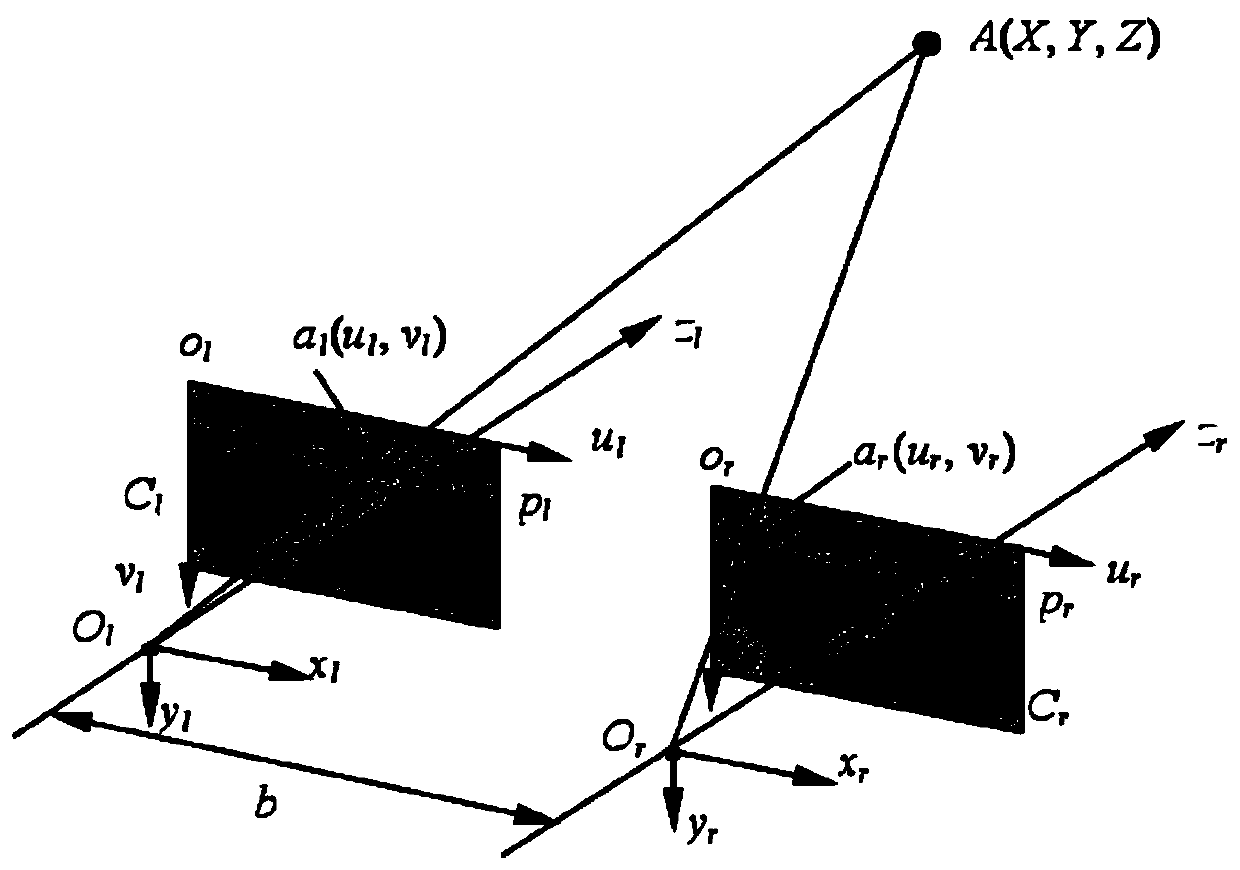

[0018] (1), register the binocular camera and the laser radar in the airspace and timing, the binocular camera is aimed at the target to collect images, and the laser radar emits a wire beam towards the target and collects data; the airspace registration refers to the binocular The location of the camera is correspondingly matched with the location of the lidar, and the timing registration refers to the simultaneous acquisition of images by the binocular camera and the emission of the lidar beam;...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com