Three-dimensional ground object automatic extraction and scene reconstruction method

An automatic extraction and scene reconstruction technology, applied in scene recognition, 3D modeling, instruments, etc., can solve problems such as difficult to implement and poor flexibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

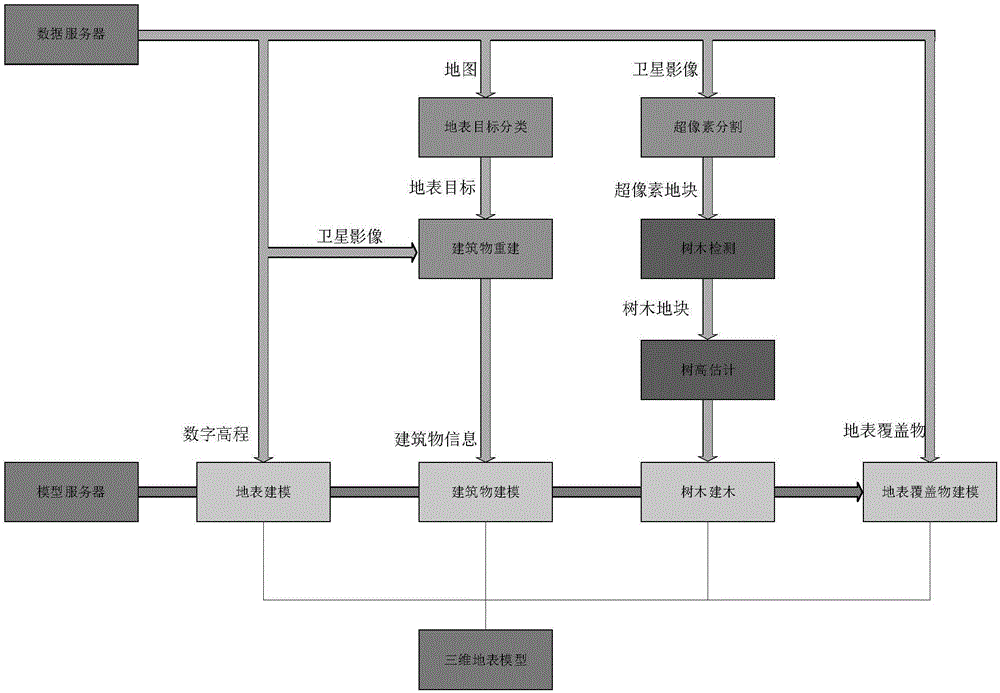

Method used

Image

Examples

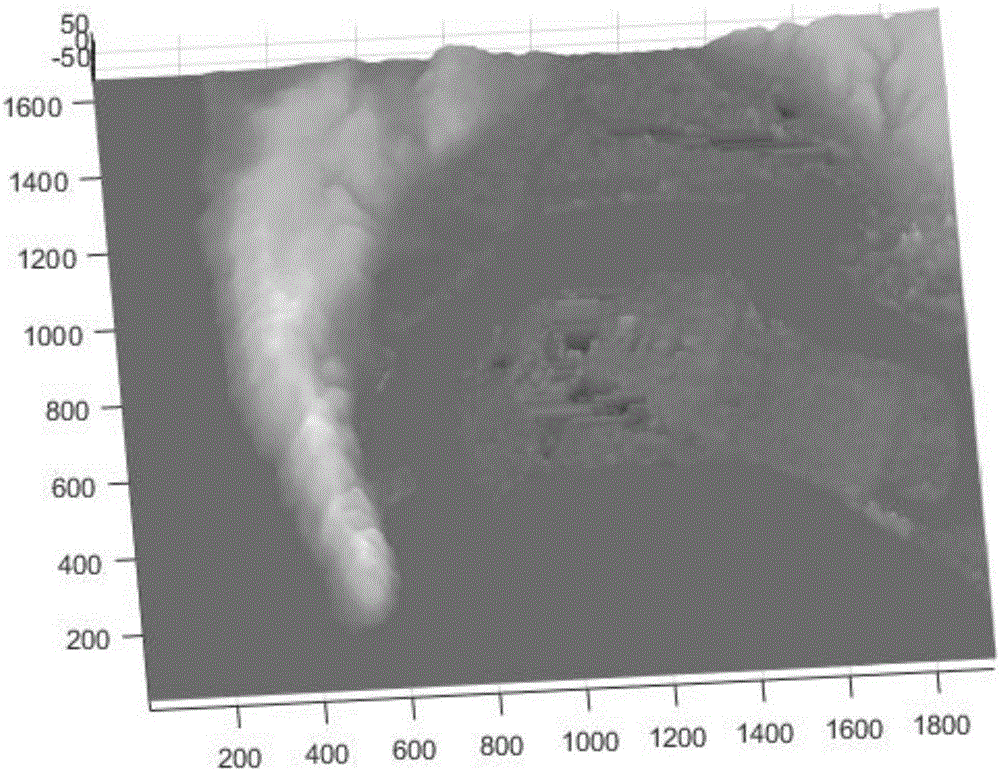

Embodiment Construction

[0055] In the present invention, the land cover classification uses map data and land cover type data for classification. Using nearest neighbor classification to classify pixels in map data using map data with feature labels. The cluster center point of the nearest neighbor algorithm is predetermined, and the color information corresponding to each category of an example of the present invention is given in Table 1, and the map data used in Table 1 is google map data. Using the nearest neighbor classification for the map data, the main targets such as roads, buildings, waters, and airports marked in the map data can be quickly extracted to realize the preliminary extraction of the land cover type, and then use the land cover information to classify the green space marked in the map data, Carry out fine classification of land cover types in areas such as open spaces to achieve high-precision classification of land types. Through the combination of map data and land surface ty...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com