Multi-target tracking method based on multi-model fusion and data association

A multi-target tracking and data association technology, which is applied in the fields of image processing, video detection and artificial intelligence cross-technology applications, and can solve the problems such as the failure of automatic recovery of the target reappearance, the inability to meet the requirements of real-time performance, and the inability to continue to track accurately. Achieve the effect of reducing the interference of light and background noise, good real-time performance and robustness, and fast processing speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

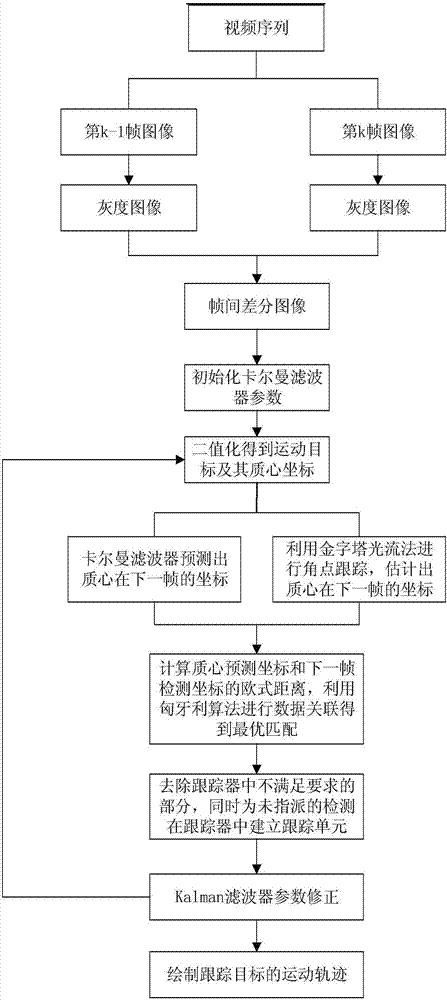

[0043] According to attached figure 1 , the specific embodiment of the present invention is:

[0044] 1) Input a video sequence S={f 1 ,f 2 ,..., f 50}, f i It is the i-th shot frame, represented by a two-dimensional matrix with a size of 50*50, and the video shot S is processed by the inter-frame difference method to obtain the outline and centroid coordinates of the moving target. The specific steps are as follows:

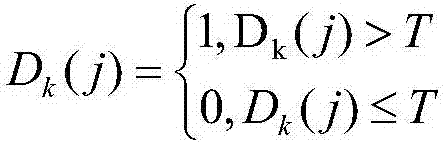

[0045] 1.1) Take the f in the video lens S 1 , f 2 As an example, grayscale processing is performed to obtain the grayscale difference image f 1 ', f' 2 , for f 1 ', f' 2 For each pixel point j in , calculate D 2 (j)=f' 2 (j)-f 1 '(j), when D 2 (j) Satisfy the decision equation:

[0046] D. 2 (j) > T, judge j as the foreground point;

[0047] D. 2 (j)≤T, judge j as the background point.

[0048] Get moving target contour D 2 Then store the coordinates of its center point as the center of mass coordinates of the moving target in the Point type ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com