Key frame extraction method for RGBD 3D reconstruction

An extraction method and three-dimensional reconstruction technology, applied in the field of key frame extraction for RGBD three-dimensional reconstruction, can solve the problems of depth image RGB image motion blur, low key frame quality, texture extraction influence, etc., to reduce motion blur, improve accuracy, The effect of reducing holes and noise

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

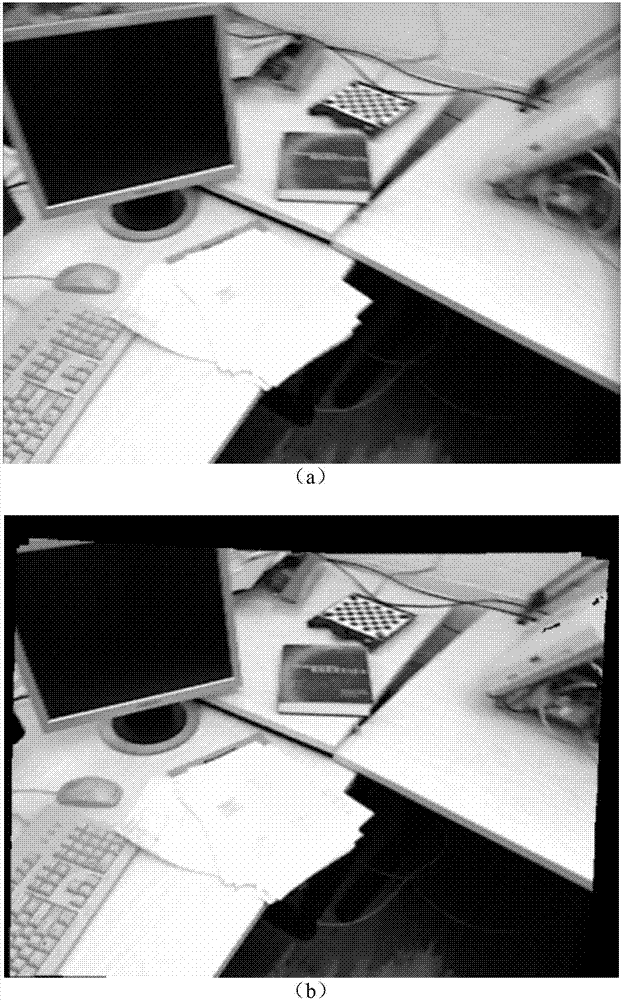

[0023] Embodiments of the present invention will be described in detail with reference to the accompanying drawings.

[0024] The implementation process of the present invention is mainly divided into four steps: RGBD data frame grouping, projection depth image calculation, projection RGB image calculation, and projection data fusion.

[0025] Step 1. Grouping of RGBD data frames

[0026] For a given registered RGBD data stream Input 1 ~Input n , several frames of RGB images with adjacent time stamps (in C 1 ~C k as an example), depth image (take D 1 ~D k as an example) and the corresponding camera pose (take T 1 ~T k example) into a group.

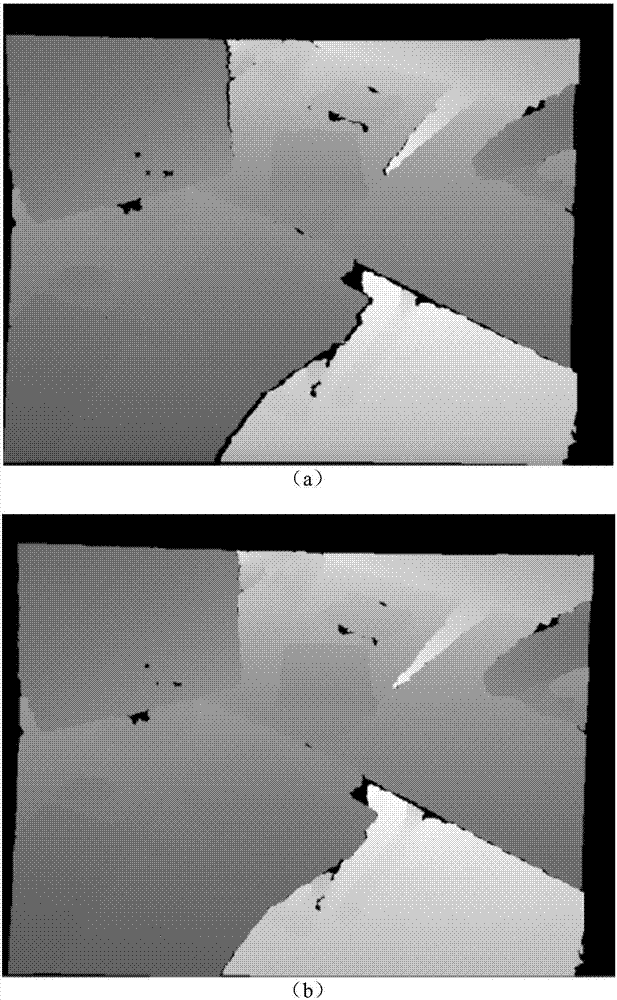

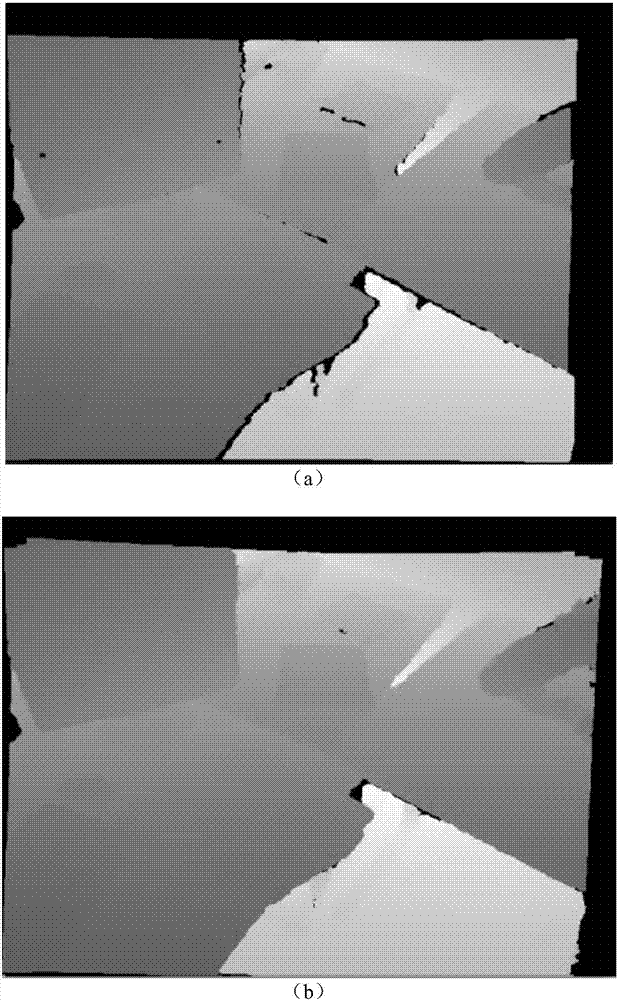

[0027] Step 2. Projection depth image calculation

[0028] Its main steps are:

[0029] Step (2.1) According to the depth camera internal parameters K d Will D 1 ~D k Each pixel in is mapped to the three-dimensional space, specifically:

[0030] p=K d *(u,v,d) T (1)

[0031] Among them, p is the mapped three-dimensional ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com