Hyperspectral Image Super-resolution Reconstruction Method Based on Coupling Dictionary and Spatial Transformation Estimation

A technology of hyperspectral image and space conversion, applied in the field of hyperspectral image super-resolution reconstruction based on coupling dictionary and space conversion estimation, can solve the problem of low reconstruction accuracy, achieve good super-resolution effect and reduce the effect of use limitation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

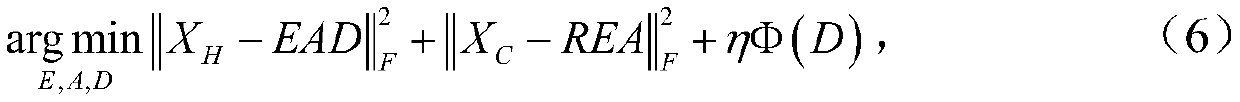

[0054] The spatial resolution of hyperspectral images is very low. Simply using the super-resolution method promoted by true-color images cannot improve the resolution very effectively. Relatively speaking, true-color images are easier to obtain, and the main purpose of the present invention is to use true-color images in the same scene to improve the spatial resolution of hyperspectral images. Assuming that the hyperspectral image and the true color image that have been obtained and registered are respectively and And the target image is an image with high spatial resolution and spectral resolution Where L and l represent the number of bands of the hyperspectral image and true color image, w, h represent the width and height of the low-resolution hyperspectral image, and W, H represent the width and height of the high-resolution true color image. It is also assumed that n and N represent the number of pixels in the hyperspectral image and the true color image, n=w×h, N=W...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com