Human action recognition method

A technology of human motion recognition and motion, applied in the field of computer vision and pattern recognition, can solve the problems of complex calculation and time-consuming, etc., and achieve the effect of improving the recognition rate, improving the ability to resist noise, and improving the motion recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

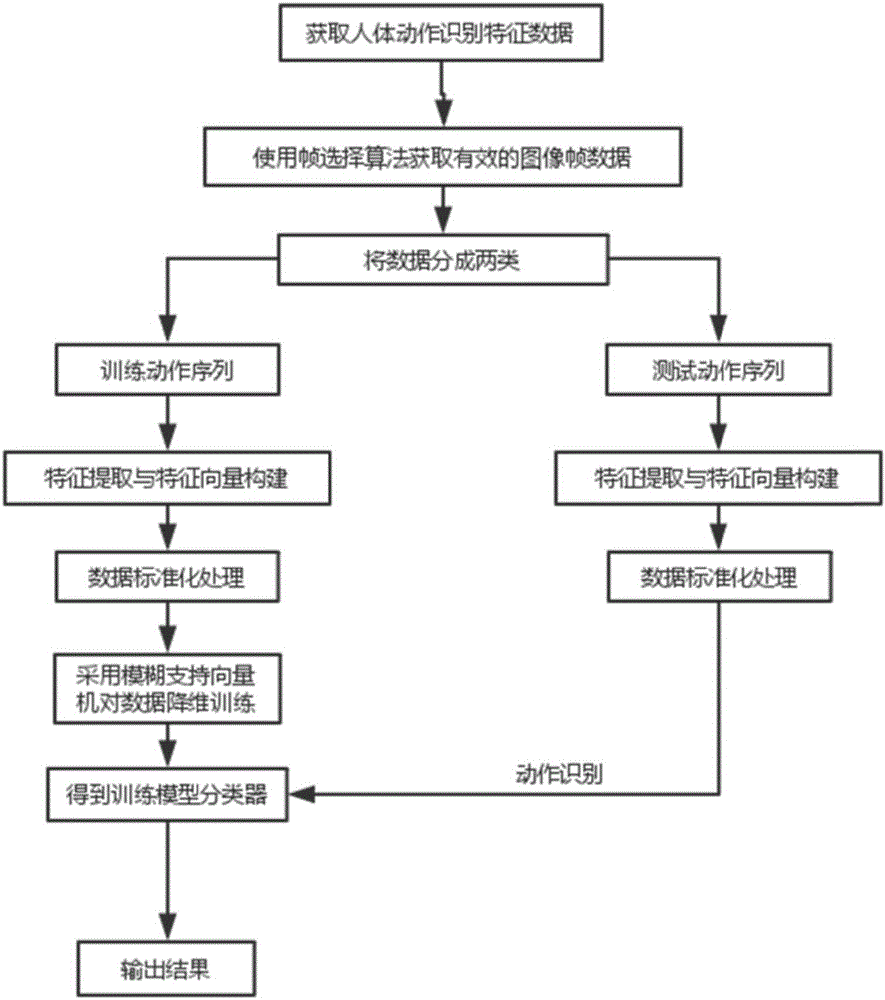

[0047] Such as figure 1 As shown, the present embodiment is a human action recognition method, including the following steps.

[0048] Step 1: Obtain the video of the required human motion recognition feature data;

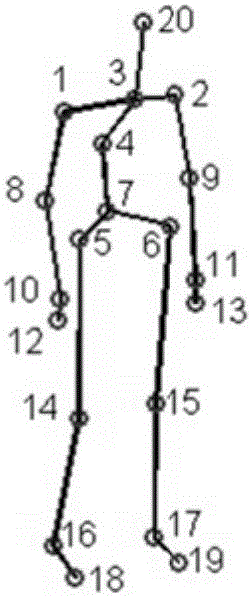

[0049] Specifically, the MSR Action3D action database can be used as experimental data; MSR Action3D is a public dataset that provides depth maps and skeleton sequences captured by an RGBD camera. It consists of 20 actions performed by 10 people facing the camera. Perform each movement two to three times. The depth map has a pixel size of 320x240. In order to analyze the results of action recognition more clearly, the action data set is divided into three parts of the experiment, and 18 action classes are selected from the 20 action data and divided into three groups for experiments. The 18 actions are defined as Action1 to Action18.

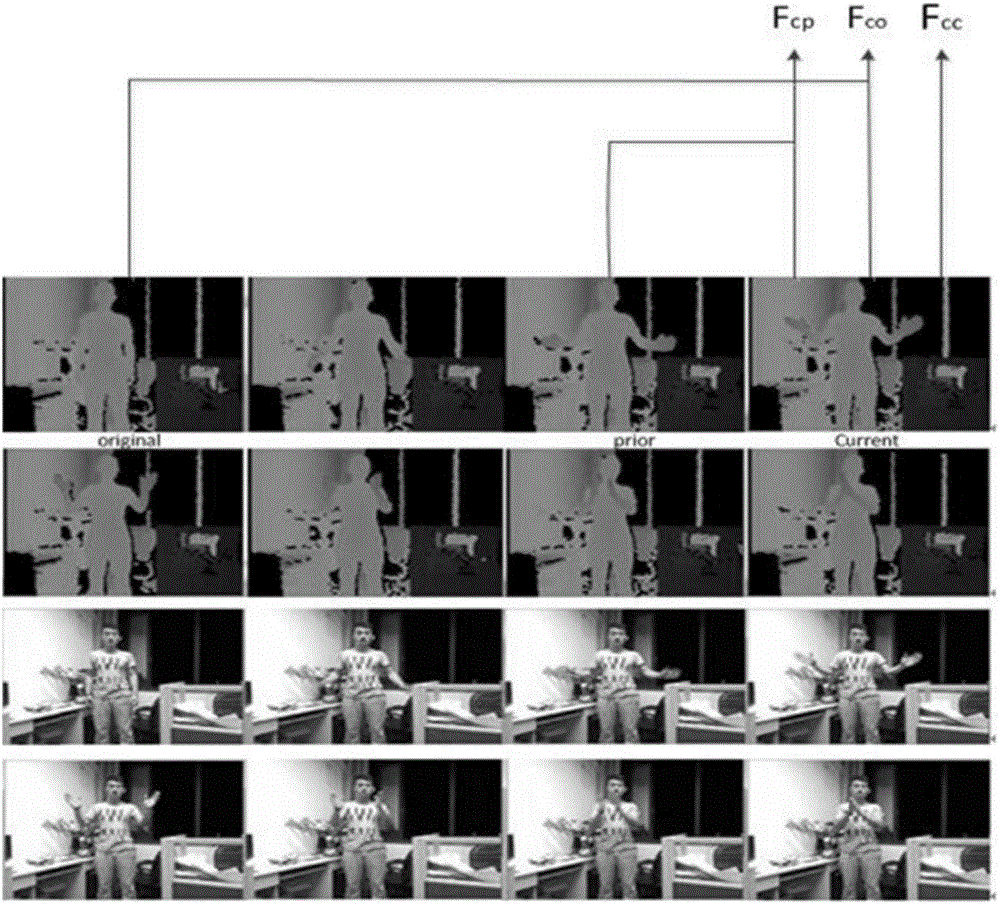

[0050] Step 2: Based on the traditional cumulative motion energy improved frame selection algorithm, select effective image fr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com