Human body motion classification method based on compression perception

A technology of compressed sensing and human motion, applied in the field of video analysis, which can solve the problem of low accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0067] In order to enable those skilled in the art to better understand the technical solutions of the present invention, the present invention will be further analyzed below in conjunction with specific examples.

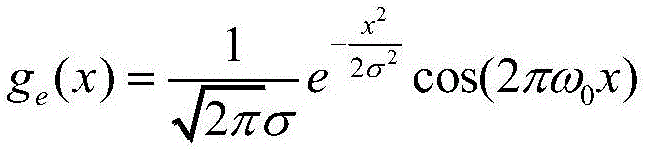

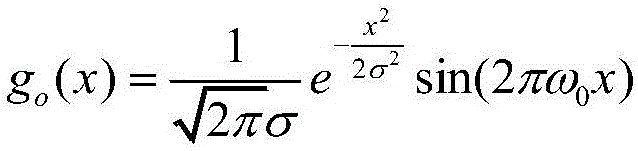

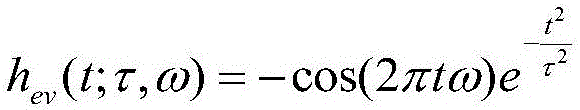

[0068] A human action classification method based on compressive sensing. By treating all action training samples as an over-complete dictionary, an action classification algorithm based on compressive sensing is designed. The method includes: space-time interest point detection, video bag-of-words model-based Feature expression, construction of visual dictionary and action classification algorithm based on compressed sensing are four steps, of which: Step 1: Spatio-temporal interest point detection, using the method of spatio-temporal interest point detection to count the features based on time changes. For a video sequence, the point of interest is determined by three dimensions, the x and y axes marking the spatial position and the t axis marking the time. The i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com