Visual odometer realization method based on fusion of RGB and depth information

A visual odometry and implementation method technology, applied in computing, image data processing, instruments, etc., to achieve the effect of broadening application time and space, breaking the dependence of lighting conditions, and accurate and reliable motion estimation results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] Below in conjunction with accompanying drawing and embodiment the present invention is described in further detail:

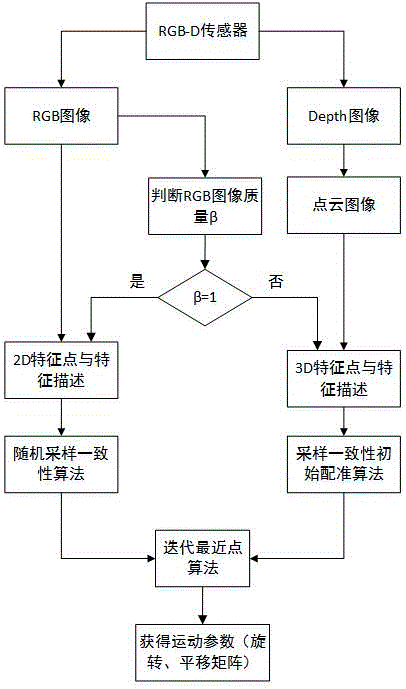

[0024] Such as figure 1 As shown, a kind of realization method of the visual odometer of fusion RGB and Depth information comprises the following steps:

[0025] 1) Take the time T as the cycle, use the Kinect sensor to collect environmental information, and output a sequence of RGB images and Depth images;

[0026] 2) According to the order of the time axis, select RGB images in turn and Depth image Depth image Point cloud image converted to 3D pcd format

[0027] 3) For the selected RGB image Perform brightness, color shift and blur detection to judge its image quality β. Calculate the brightness parameter, color shift parameter, and blur parameter. If the brightness parameter L=1, the color shift parameter C=1, and the blur parameter F=1, the RGB image quality is good, and β=1; otherwise, the RGB image quality is poor , β=0;

[0028] 4) ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com