Robot Vision Grasping Method

A robot vision and product picking technology, applied in the field of visual recognition, can solve problems such as grasping operation deviation, information deviation, and poor timeliness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

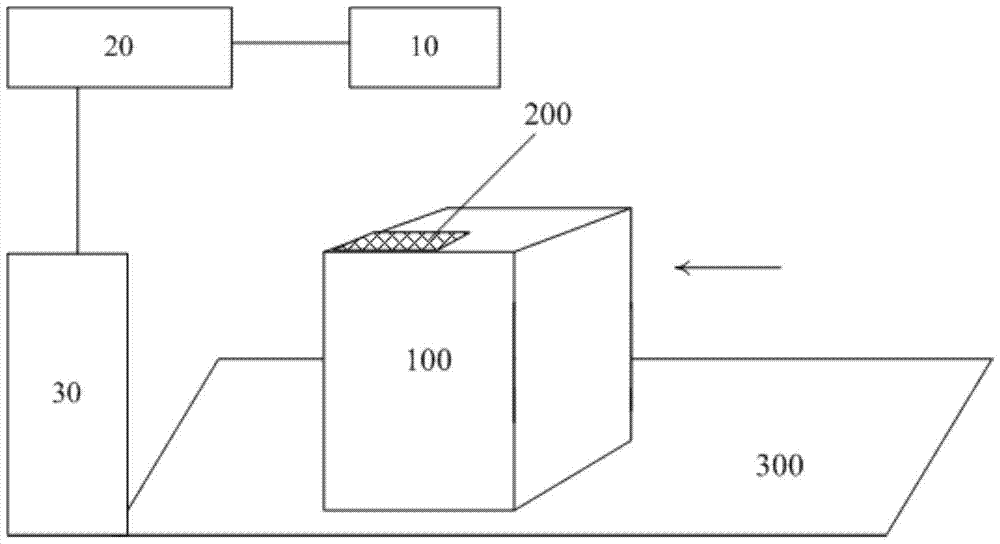

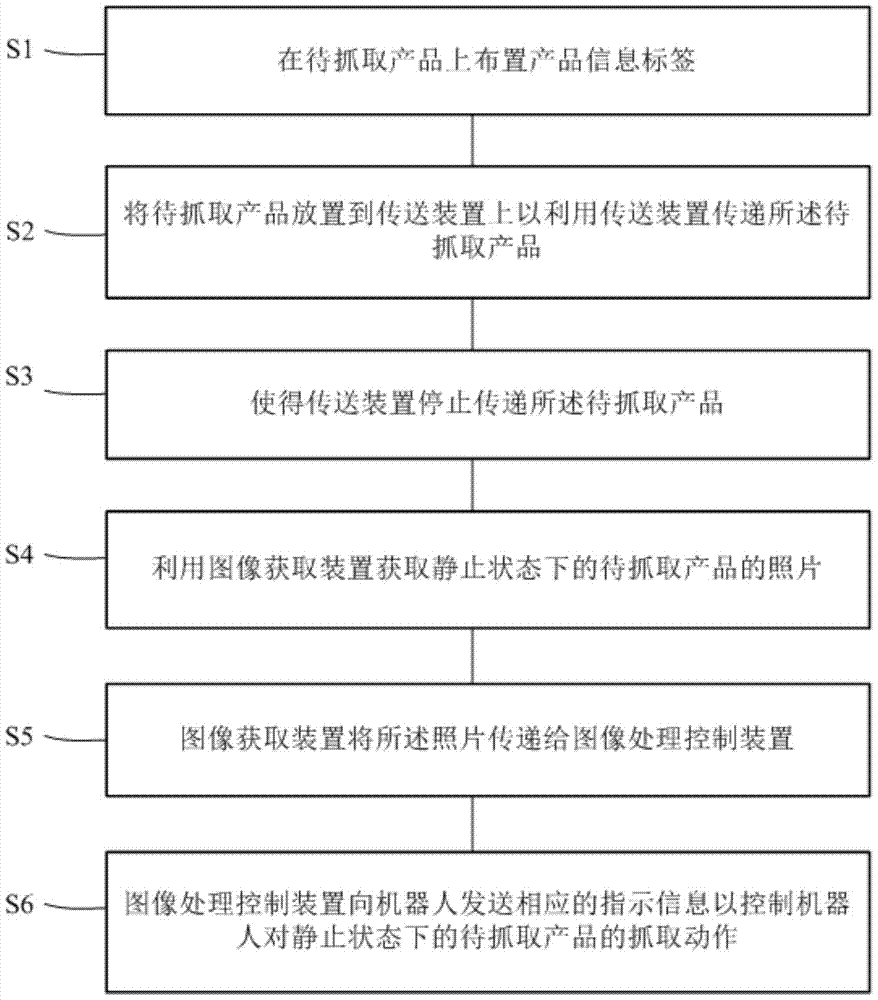

[0024] figure 1 is a schematic diagram of a robot visual grasping method according to a preferred embodiment of the present invention, and figure 2 It is a flow chart of the robot visual grasping method according to the preferred embodiment of the present invention.

[0025] like figure 1 and figure 2 As shown, the robot vision grasping method according to a preferred embodiment of the present invention includes:

[0026] First step S1: arranging a product information label 200 on the product 100 to be captured, wherein the product information label 200 includes the size information of the product 100 to be captured and the position information of the product information label 200 on the product 100 to be captured;

[0027] Among them, preferably, the product information label 200 has a standardized shape and a standardized size.

[0028] Preferably, the product information label 200 is arranged at the corner of the specific surface of the product 100 to be grasped; or, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com