Augmented reality virtual keyboard input method and apparatus using same

A virtual keyboard input and augmented reality technology, applied in the input/output of user/computer interaction, computer components, graphics reading, etc., can solve problems such as inability to find, slow response speed, further improvement in adaptability and robustness, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

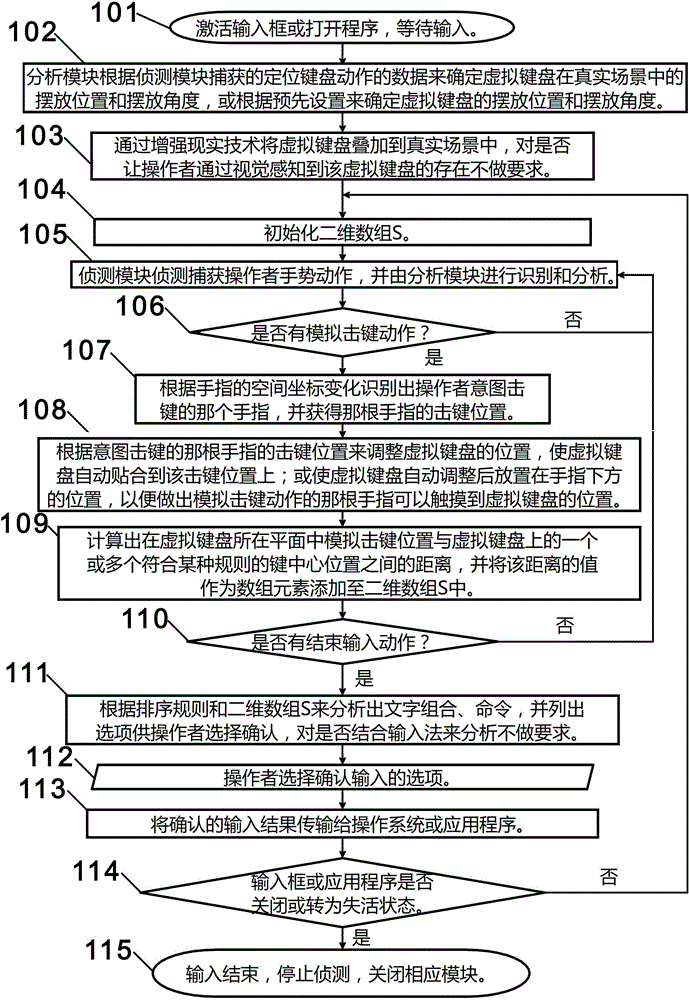

[0077] Such as figure 1 Shown is the flowchart of Embodiment 1 of the present invention.

[0078] Specifically, whenever a simulated keystroke is detected, first calculate the distance between the positions of those keys that meet certain rules on the virtual keyboard and the keystroke position, and save the values of these distances until the operator is identified Make the end input action and then calculate the sorting result according to the value of the distance according to the sorting rules;

[0079] The flowchart includes:

[0080] Step 101 activates the input box or opens the program, and waits for input.

[0081] Specifically, activating the input box means reactivating the input box to enter the input state when the program is already opened, or automatically activating the input box to enter the input state after opening the program. Other ways to enter the input state can also be used.

[0082] Step 102: The analysis module determines the placement position ...

Embodiment 2

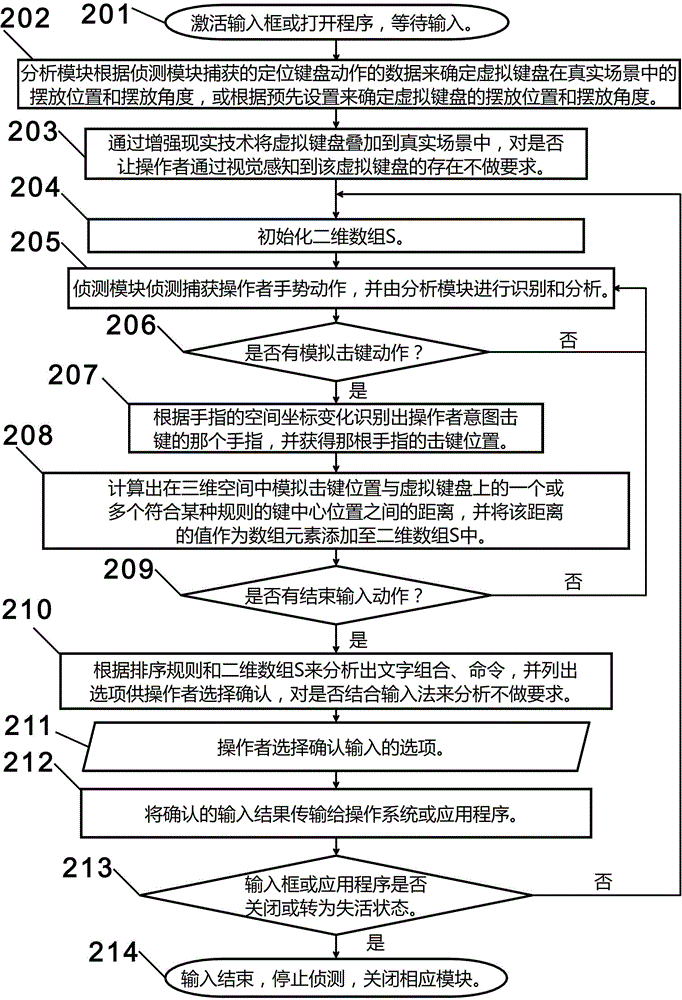

[0220] Such as figure 2 Shown is the flowchart of Embodiment 2 of the present invention,

[0221] Specifically, whenever a simulated keystroke is detected, first calculate the distance between the positions of those keys that meet certain rules on the virtual keyboard and the keystroke position, and save the values of these distances until the operator is identified Make the end input action and then calculate the sorting result according to the value of the distance according to the sorting rules;

[0222] The flowchart includes:

[0223] Step 201 activates the input box or opens the program, and waits for input.

[0224] Specifically, the same as step 101.

[0225] Step 202: The analysis module determines the placement position and placement angle of the virtual keyboard in the real scene according to the keyboard movement data captured by the detection module, or determines the placement position and placement angle of the virtual keyboard according to preset settings...

Embodiment 3

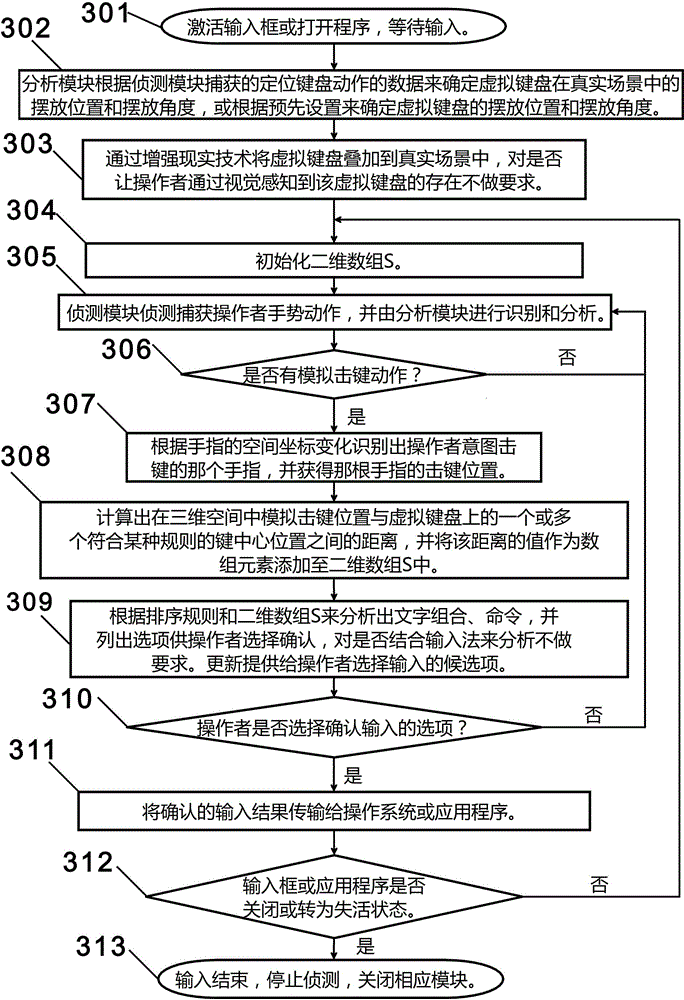

[0257] Such as image 3 Shown is the flow chart of Embodiment 3 of the present invention.

[0258] Specifically, after each simulated keystroke action is completed, the value of the above-mentioned distance obtained according to this simulated keystroke action and the value of the above-mentioned distance obtained from the previous simulated keystroke actions according to the sorting rules Analyze and update the candidates so that the operator can choose to confirm the input, if the operator does not select these candidates to confirm the input, but continues to make simulated keystrokes, then this process is repeated by step 305 until the operator selects The input is completed only after the candidate item is confirmed and inputted. In the process of this embodiment, there is no step of detecting, analyzing and judging whether the operator has made an action to end the input.

[0259] The flowchart includes:

[0260] Step 301 activates the input box or opens the program, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com