A storage system cache strategy adaptive method

A caching strategy and storage system technology, applied in memory systems, instruments, electrical and digital data processing, etc., can solve problems such as untimely response to changes in data access patterns in storage systems, reduce cache pollution, improve cache hit rate, and improve performance Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

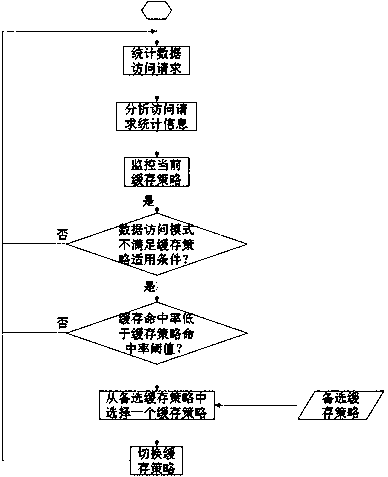

[0021] The present invention will be described in detail below in conjunction with the accompanying drawings. The storage system caching strategy adaptive method includes the following steps:

[0022] (1) Monitor and count the data access requests received by the storage system, and analyze the statistical information of the data access requests to obtain the data access mode of the storage system. The data access mode of the storage system refers to the access characteristics of the data access requests sent by the upper application to the storage system, including the ratio of read requests to write requests for data access, the randomness of data access, and whether there is hot data.

[0023] The statistical function and statistical data records of data access requests can be merged and shared with other functional modules in the storage system, thereby reducing the performance overhead of implementing the request statistical function. At the same time, statistics on acce...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com