High-efficiency SVM active half-supervision learning algorithm

A semi-supervised learning and active learning technology, applied in computing, computer components, instruments, etc., can solve problems such as lack of incremental learning ability, affecting active learning performance, and high complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

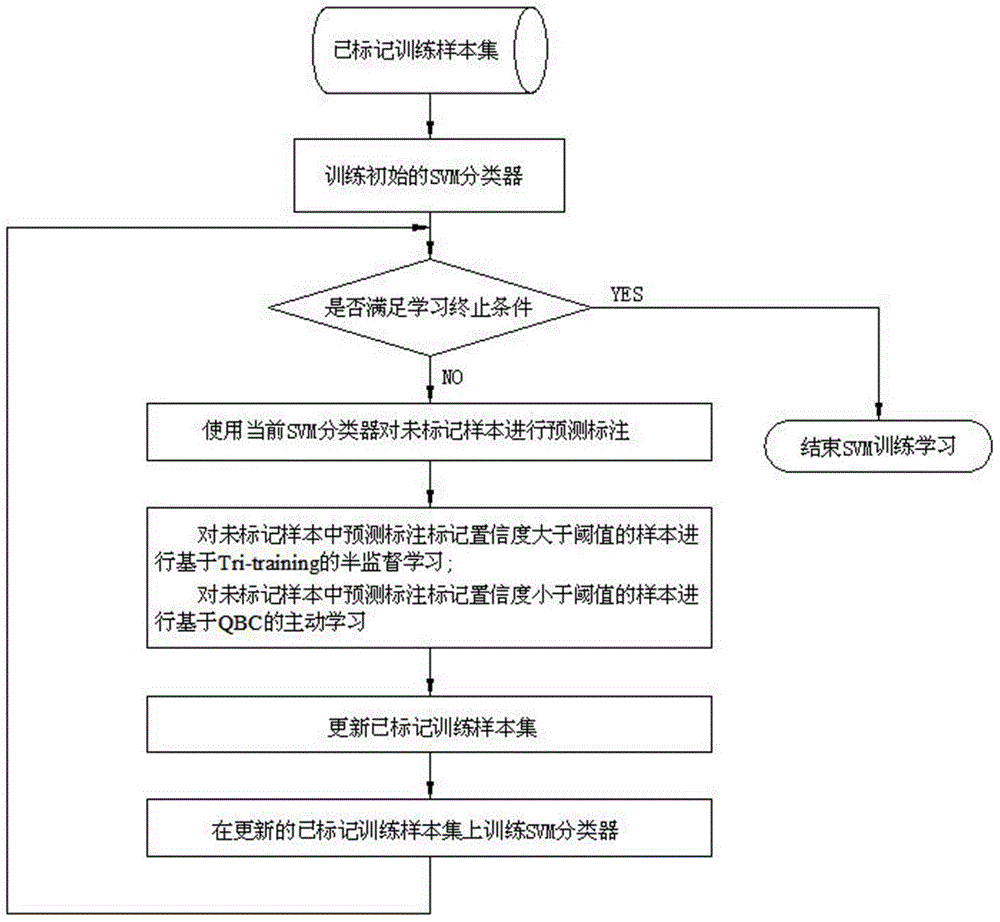

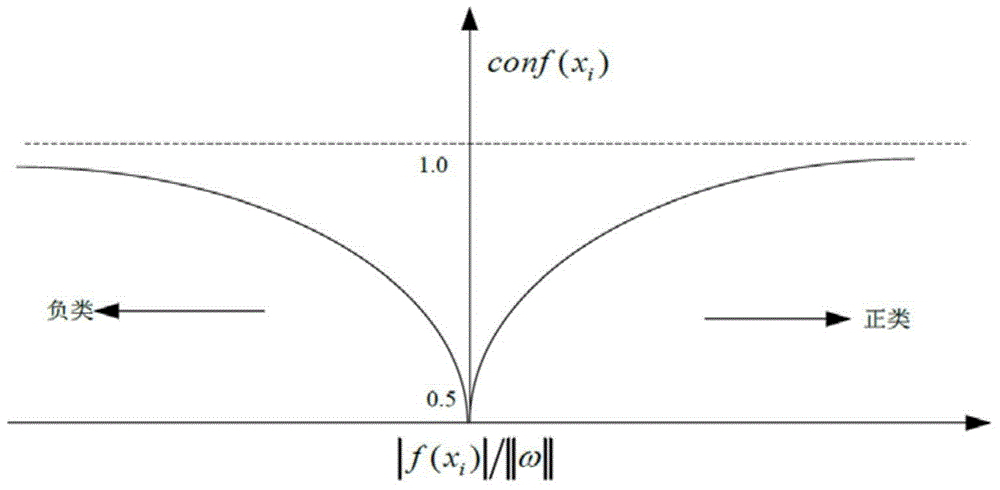

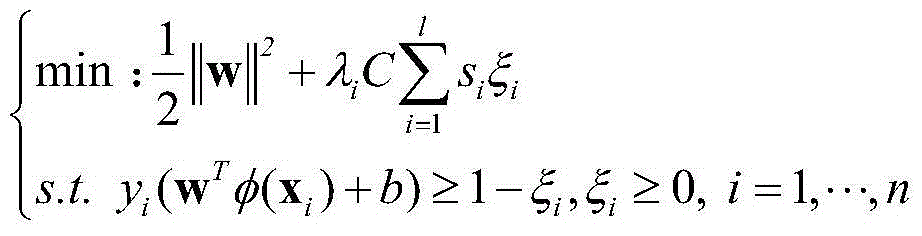

[0107] SVM active learning generally selects the most uncertain and low-confidence samples of the current learner to mark, and relatively certain or fully represented samples are not used for training, while semi-supervised learning methods can use these classifiers to mark them Relatively certain or high confidence samples to make full use of the useful information contained in unlabeled samples for classifier training, which can avoid the error propagation caused by the uncertainty of the initial classifier in SVM active learning, thereby improving SVM Active Learning Performance. Based on this, the present invention provides the SVM active semi-supervised learning algorithm that fuses semi-supervised learning and active learning, refer to below figure 1 , introduce in detail the process of the efficient SVM active semi-supervised learning algorithm of the present invention.

[0108] In this embodiment, the data uses the breast-cancer-wisconsin, ionosphere, house-votes-84, he...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com