Narrow-view-field double-camera image fusion method based on large virtual camera

A virtual camera and dual-camera technology, applied in the field of aerospace and aerial photogrammetry, can solve problems such as difficulty in surveying and mapping, poor geometric accuracy, lack of physical imaging models for spliced images, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The technical solution of the present invention will be described in detail below in conjunction with the drawings and embodiments.

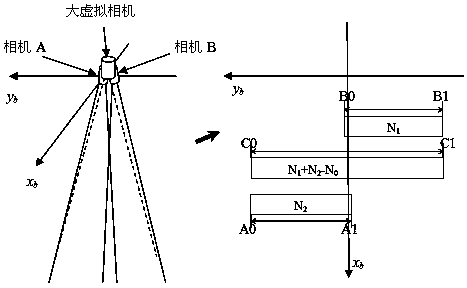

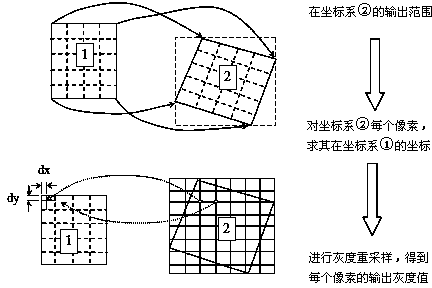

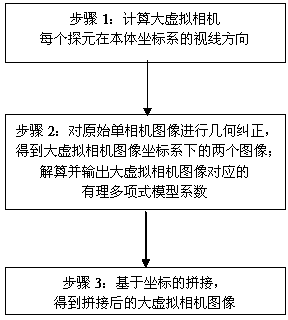

[0045] see image 3 , the embodiment is aimed at the narrow-field-of-view dual-camera image of simultaneous push-broom imaging, the execution steps are as follows, and computer software technology can be used to realize the automatic operation process:

[0046] Step 1. According to the geometric imaging parameters of the two single cameras, the geometric imaging parameters of the large virtual camera are established.

[0047] The invention proposes the concept of a large virtual camera, and calculates the line-of-sight direction of each probe in the body coordinate system.

[0048] First, the satellite body coordinate system and the single camera coordinate system are introduced.

[0049] Satellite body coordinate system o-X b Y b Z b : The origin o is located at the center of mass of the satellite, X b Y b Z b The axes are the ro...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com