Multi-user Natural Scene Labeling Ranking Method

A technology of natural scenes and sorting methods, applied in the fields of instruments, character and pattern recognition, computer parts, etc., can solve the problems of inaccurate labeling results of a single user, not making full use of labeling and sorting information, etc., to achieve value-added information, fast speed , the effect of high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will be described in detail below in conjunction with the accompanying drawings and specific examples.

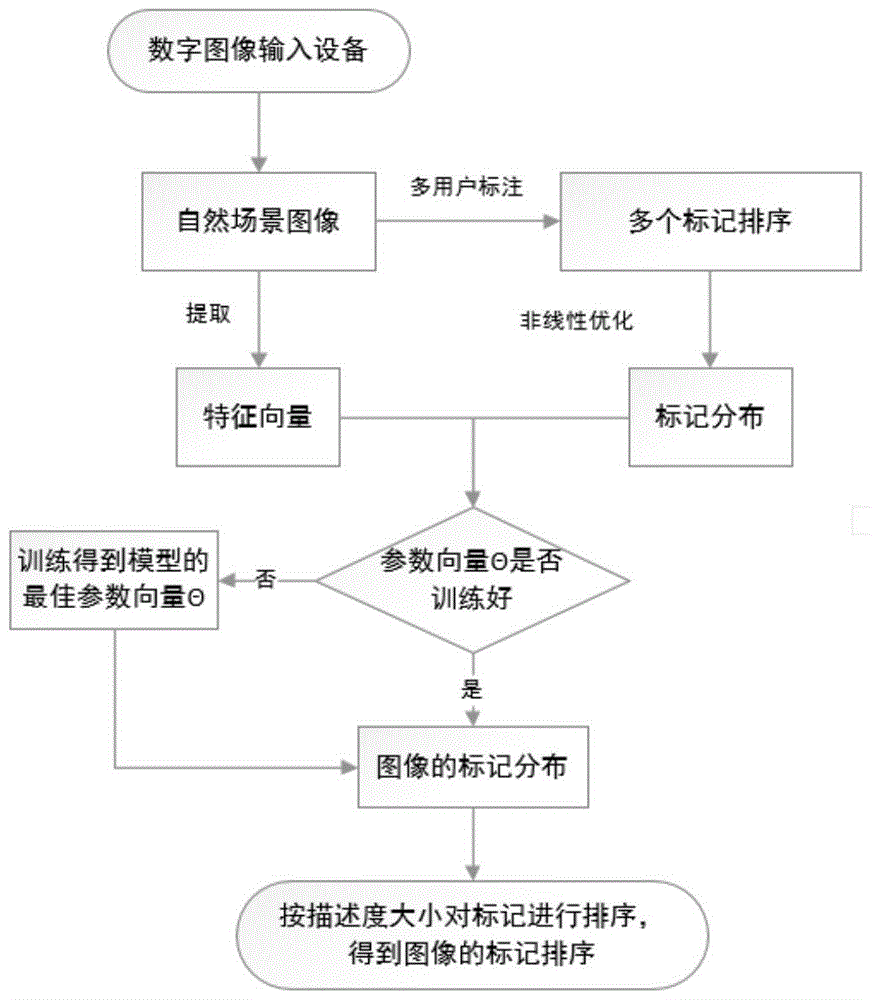

[0028] Such as figure 1 As shown, the multi-user natural scene mark sorting method of the present invention comprises the steps:

[0029] (1) Obtain a set of natural scene images for training, and extract feature vectors for each natural scene image;

[0030] (2) Obtain multiple tag rankings of natural scene images from the image tagging system based on user interest; the image tagging system based on user interest is that users sort the related tags of each natural scene image according to their own interests, and then the system Automatically store the results of tag sorting into the database.

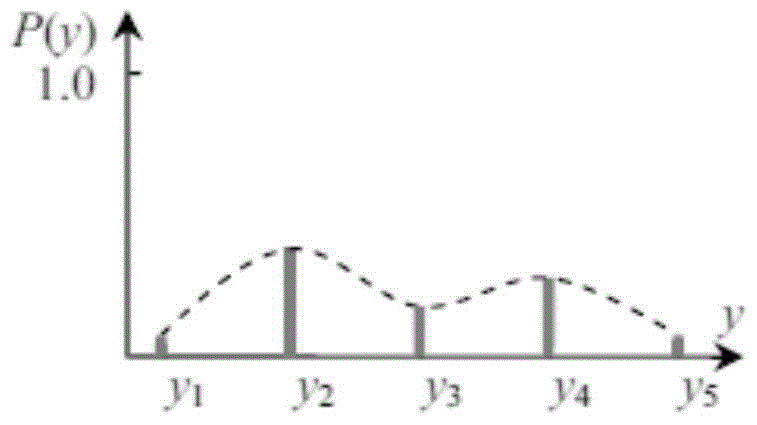

[0031] (3) By constructing a nonlinear optimization problem and using the interior point method to solve it, multiple label rankings are converted into a label distribution. The specific construction method of the nonlinear optimization problem is: ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com