Variational mechanism-based indoor scene three-dimensional reconstruction method

An indoor environment and 3D reconstruction technology, which is applied to the reconstruction of large-scale indoor scenes and the field of 3D reconstruction of indoor environment, to achieve the effect of reducing the amount of processed data, improving reconstruction accuracy, and reducing computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

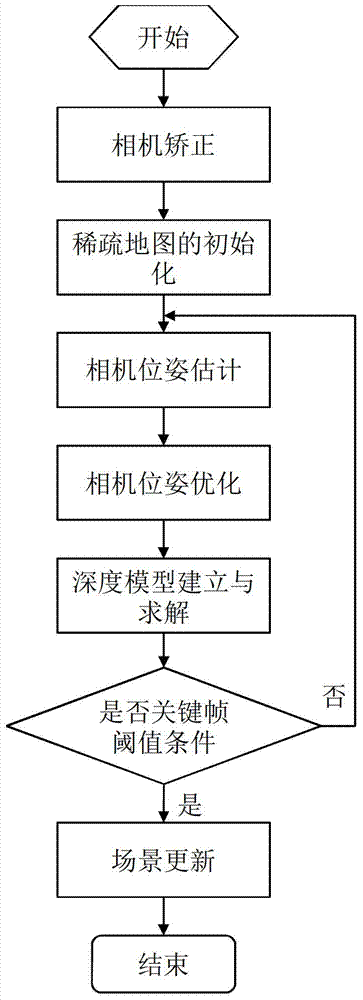

[0104] figure 1 It is a flow chart of an indoor 3D scene reconstruction method based on a variational model, including the following steps:

[0105] Step 1, obtain the calibration parameters of the camera, and establish a distortion correction model.

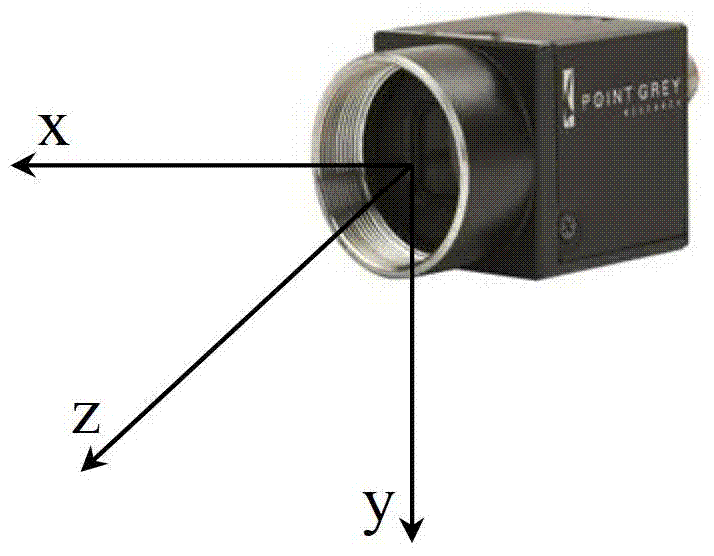

[0106] Step 2, establish camera pose description and camera projection model.

[0107] Step 3, use the SFM-based monocular SLAM algorithm to realize camera pose estimation.

[0108] Step 4: Establish a variational mechanism-based depth map estimation model and solve the model.

[0109] Step five, establishing a key frame selection mechanism to realize updating of the 3D scene.

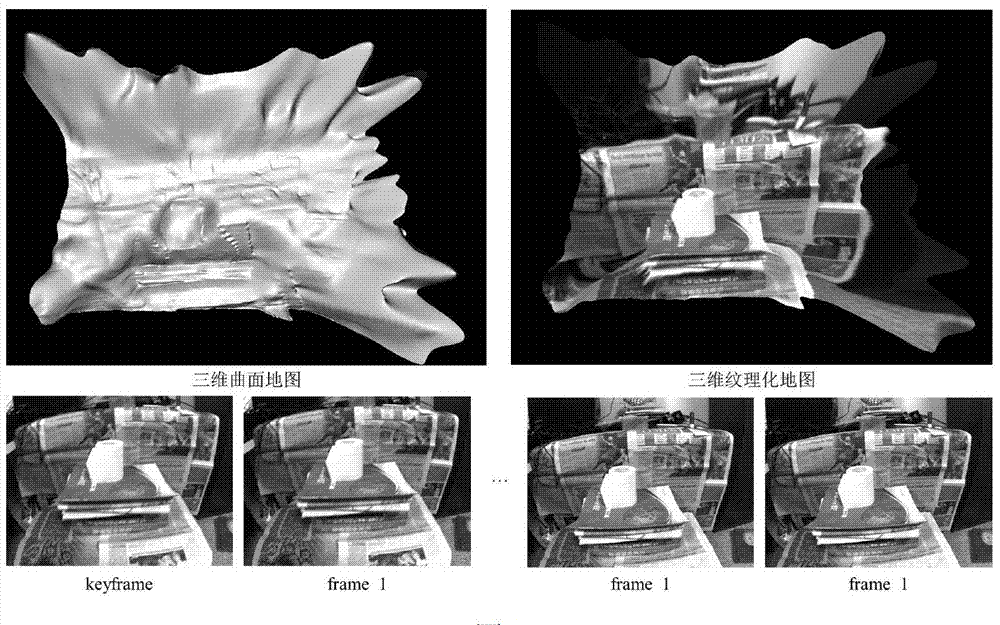

[0110] An application example of the present invention is given below.

[0111]The RGB camera used in this example is Point Gray Flea2, the image resolution is 640×480, the maximum frame rate is 30fps, the horizontal field of view is 65°, and the focal length is about 3.5mm. The PC used is equipped with GTS450GPU and i5 quad-core CPU.

[0112] Dur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com