Unified feature space image super-resolution reconstruction method based on joint sparse constraint

A joint sparse and feature space technology, applied in image enhancement, image data processing, graphics and image conversion, etc., can solve problems such as poor noise robustness, artificial traces, and high-resolution result distortion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

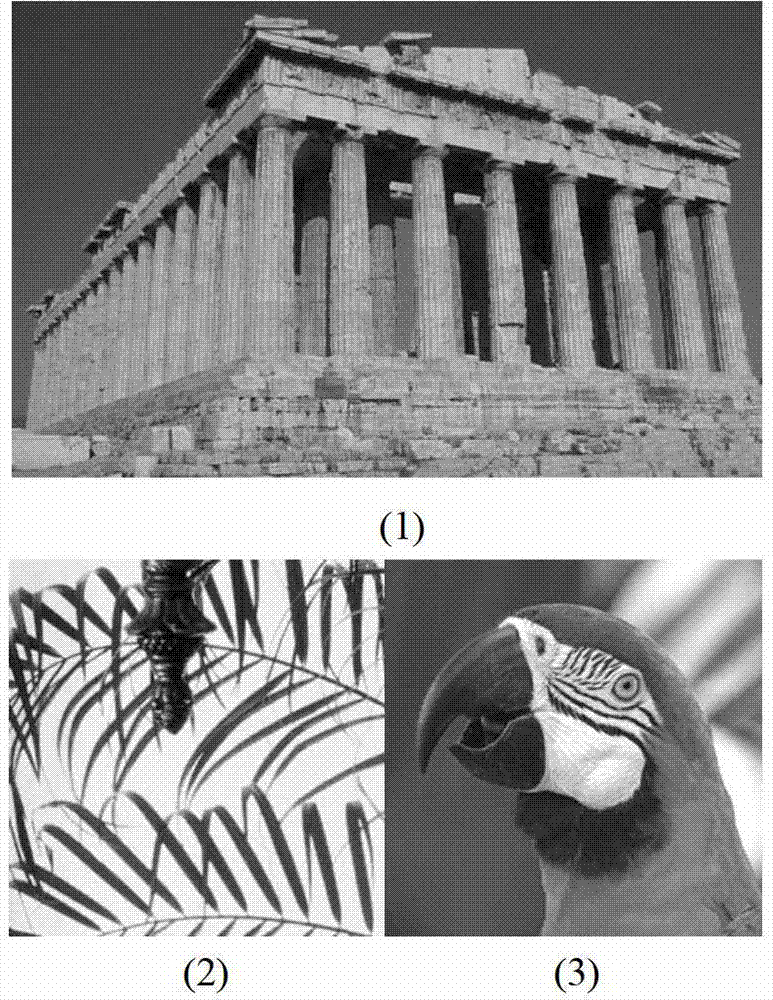

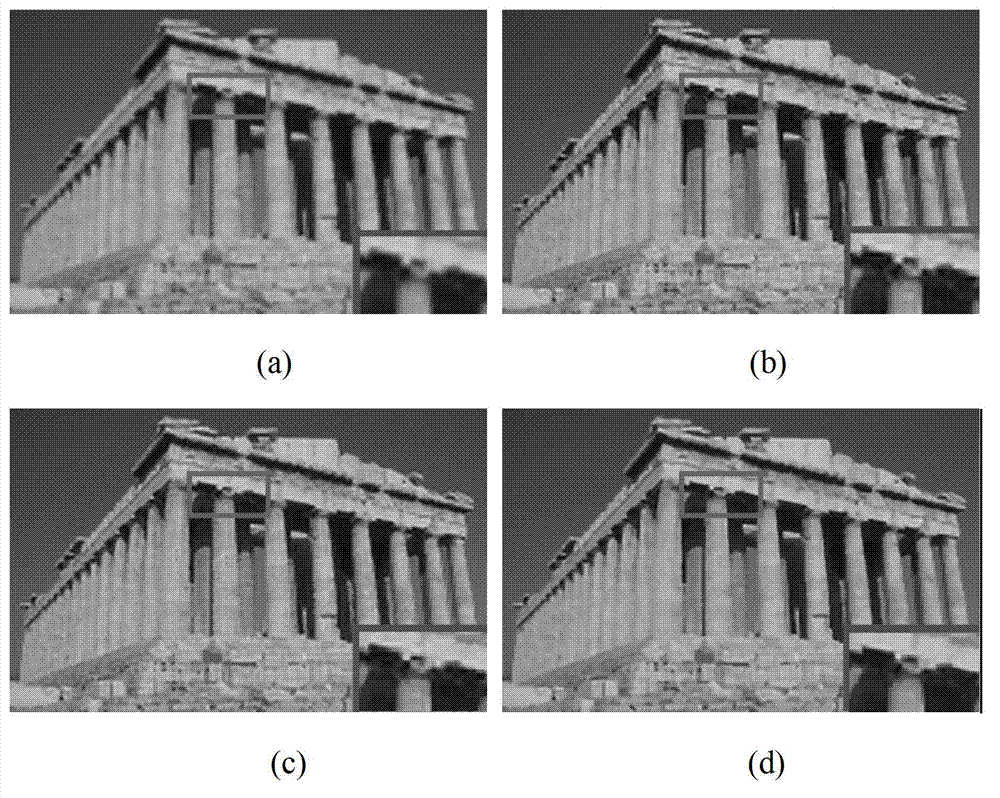

Examples

Embodiment Construction

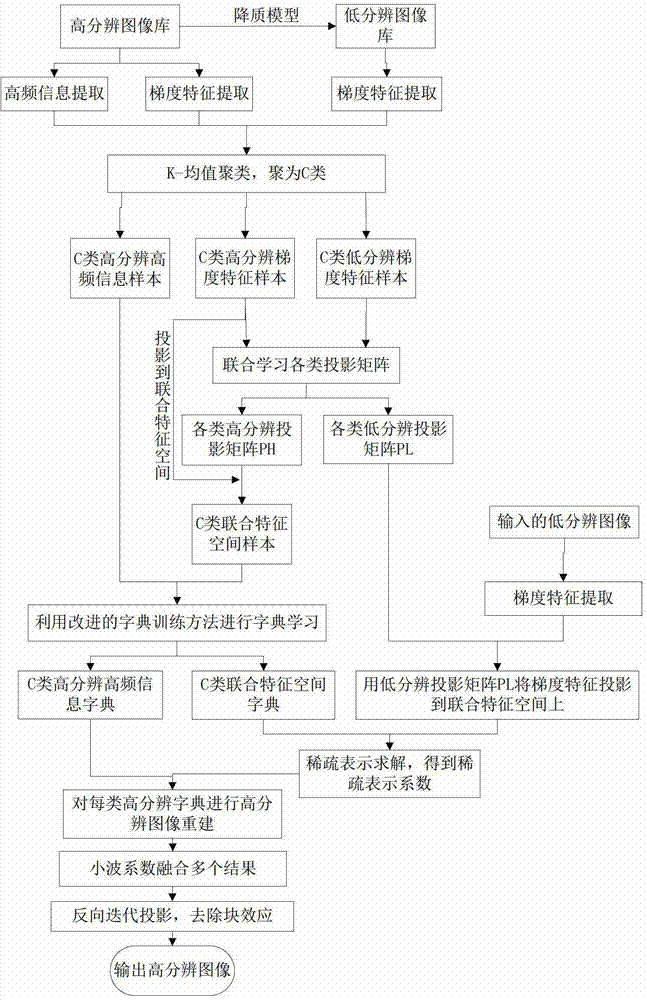

[0032] refer to figure 1 , the specific implementation steps of the present invention are as follows:

[0033] Step 1, construct a training sample set.

[0034] In order to solve the mismatch between the high-resolution image and the low-resolution image, add a training sample to replace the original low-resolution image feature sample to train the dictionary. The specific steps are as follows:

[0035] 1a) Take z common natural images from the natural image library, 60≤z≤70; use the degraded model: X=SGY, simulate and degrade z high-resolution images to obtain the corresponding low-resolution image library; then Use bicubic interpolation to enlarge the image in the obtained low-resolution image library by 2 times to obtain a low-resolution interpolated image W. In this experiment, z=65; where, X represents the low-resolution image obtained after degradation, and Y represents the original The high-resolution image of , G represents the Gaussian blur matrix, and S represents ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com