Network data processing method based on graphic processing unit (GPU) and buffer area, and system thereof

A network data processing and network data packet technology, applied in the direction of digital transmission system, transmission system, data exchange network, etc., can solve the problems of large amount of data, time-consuming copying, performance bottleneck, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0067] In order to enable those skilled in the art to better understand the technical solutions in the embodiments of the present invention, and to make the above-mentioned purposes, features and advantages of the present invention more obvious and easy to understand, the technical solutions in the present invention will be further detailed below in conjunction with the accompanying drawings illustrate.

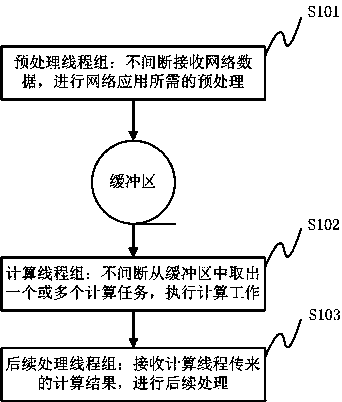

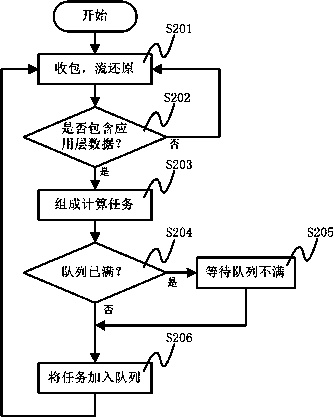

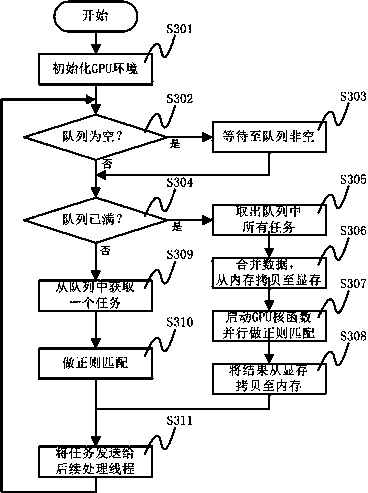

[0068] The present invention provides a network data processing method and system based on GPU and buffer, through multiple computing threads working at the same time, data copying and pipeline execution of GPU kernel function can be achieved, the computing ability of GPU can be fully utilized, and high-speed computing and processing can be achieved. network data. The invention also has the advantage of dynamically adapting to network load and lower processing delays. The invention has nothing to do with specific packet receiving, preprocessing and calculation methods, and i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com