Motion control and animation generation method based on acceleration transducer

An acceleration sensor and motion control technology, applied in the field of computer virtual reality, can solve the problems of poor recognition rate and ignore inherent physical meaning, and achieve the effect of enhancing user experience, improving motion recognition rate, and improving motion recognition rate.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] Below in conjunction with accompanying drawing and embodiment the present invention is described in further detail:

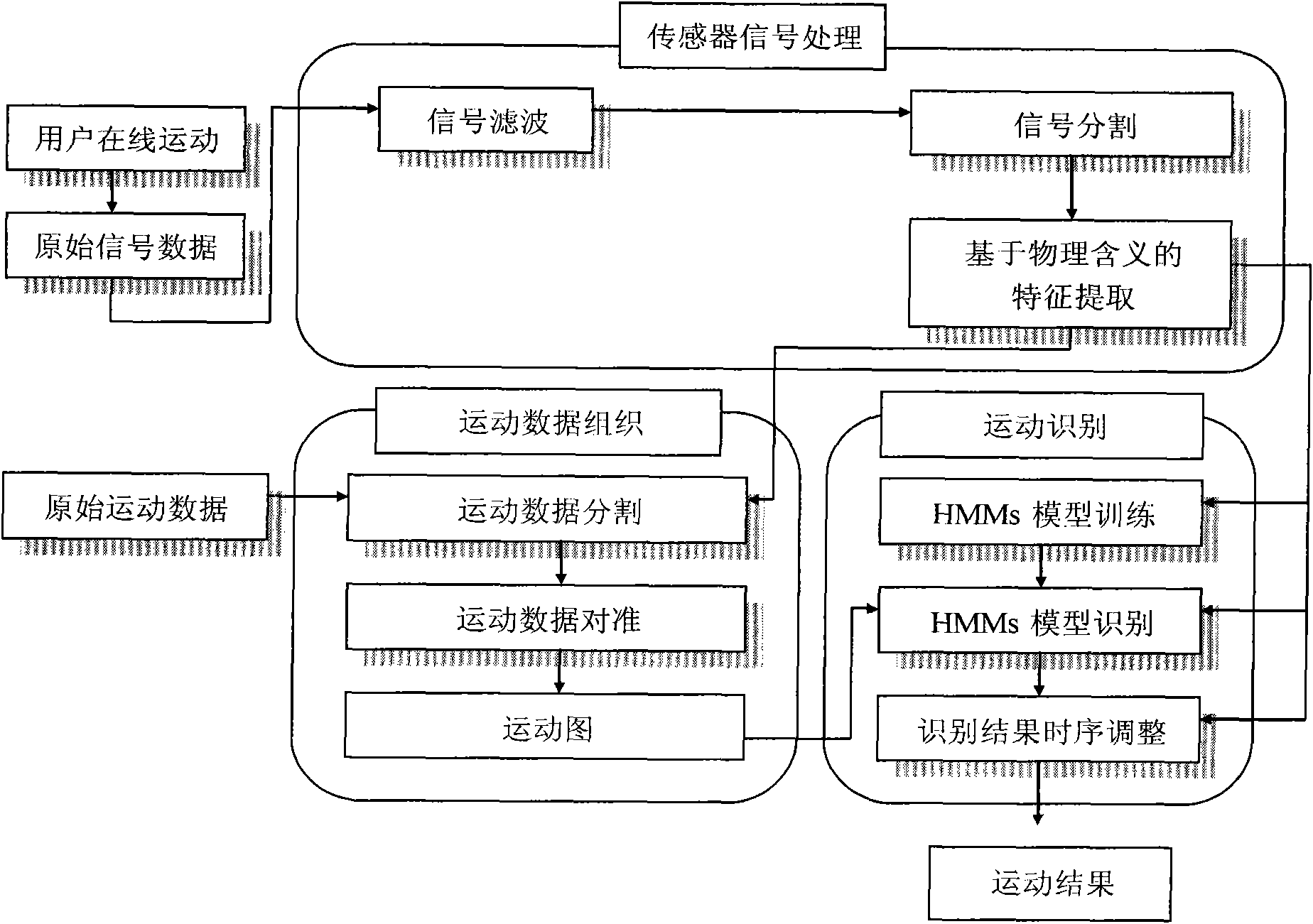

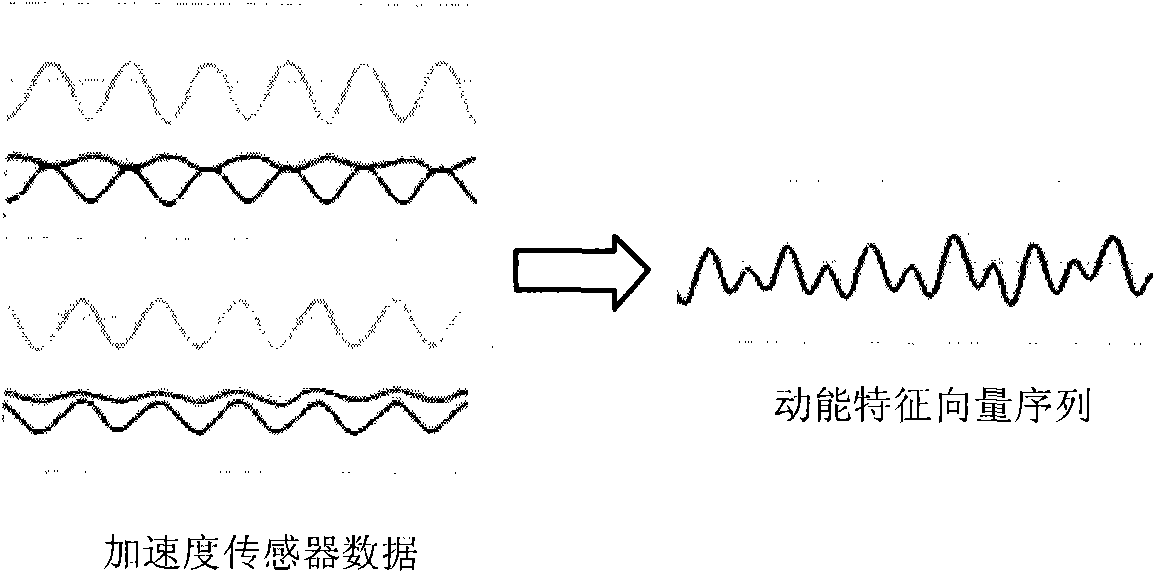

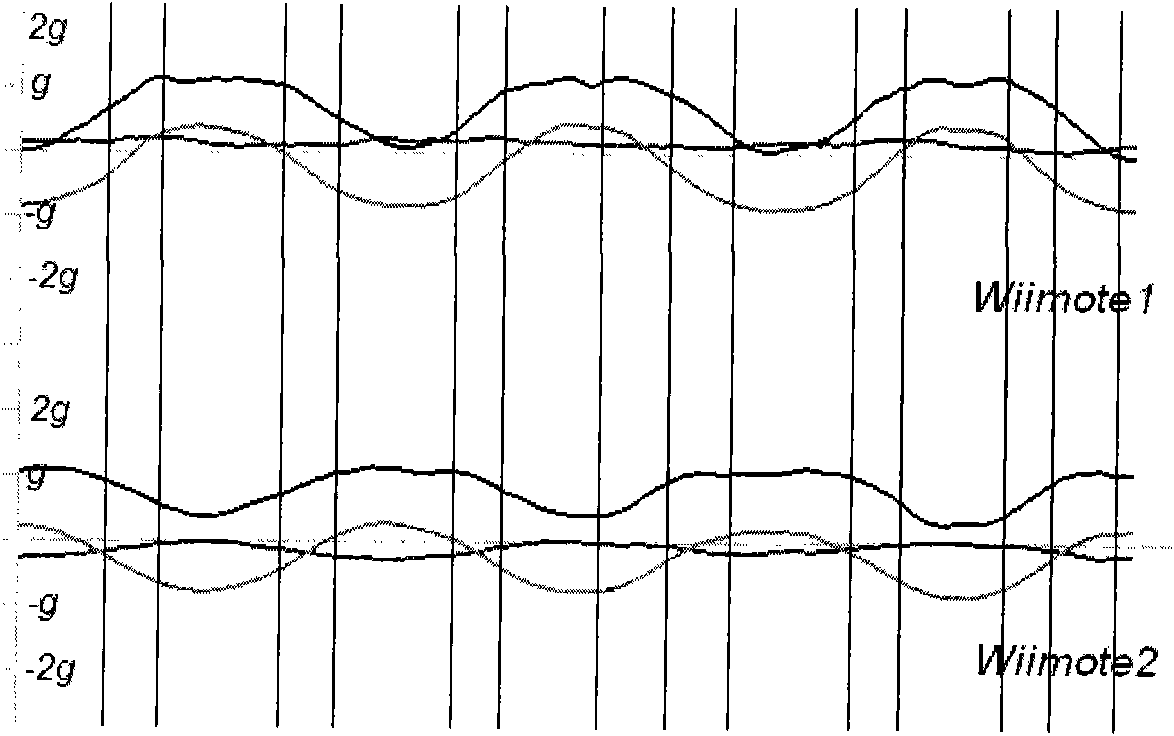

[0043] The implementation process of the present invention includes four main steps: analysis of key joint points, feature extraction based on physical meaning, matching between low-dimensional acceleration sensor signals and high-dimensional bone motion data, and dynamic timing attribute adjustment of motion data results. Such as figure 1 shown.

[0044] Step 1, that is, the analysis of key joint points, is mainly divided into two stages:

[0045] The first stage: principal component analysis on the covariance matrix of raw motion data

[0046] PCA is a common method of dimension reduction analysis, but the disadvantage of using PCA to perform dimension reduction analysis on the number of joints is that it disrupts the original joint semantics, making it difficult to select key joint points. To this end, we first use PCA to perform dimension reductio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com