Method and system of caching management in cluster file system

A technology for cache management and cluster files, which is applied in the fields of electrical digital data processing, special data processing applications, memory address/allocation/relocation, etc. It can solve low-level cache hit rate, low cache space utilization rate, and storage server cache hit rate. , Sequential prefetch data duplicate caching and other issues, to achieve the effect of increasing the ratio and granularity, reducing the ratio, and avoiding data overlap

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] The present invention will be described in further detail below in conjunction with the accompanying drawings.

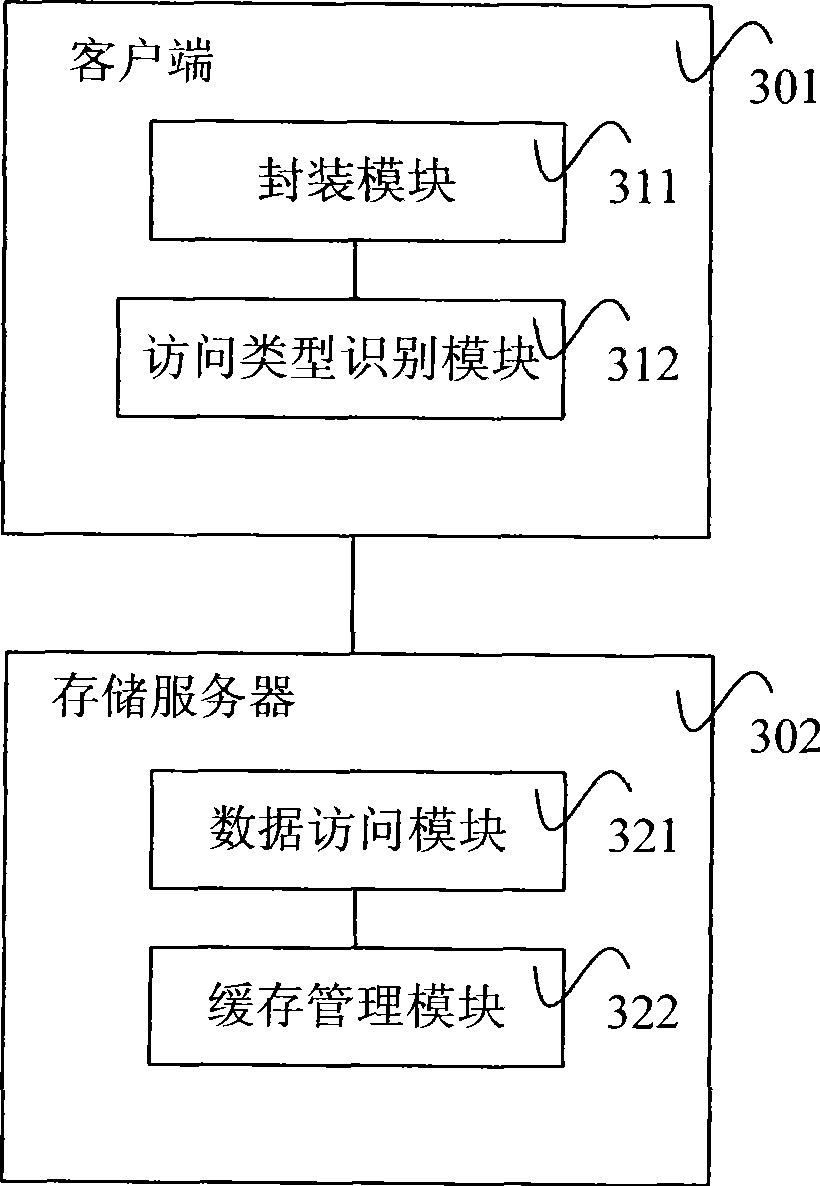

[0046] The system structure diagram of the present invention is as image 3 shown.

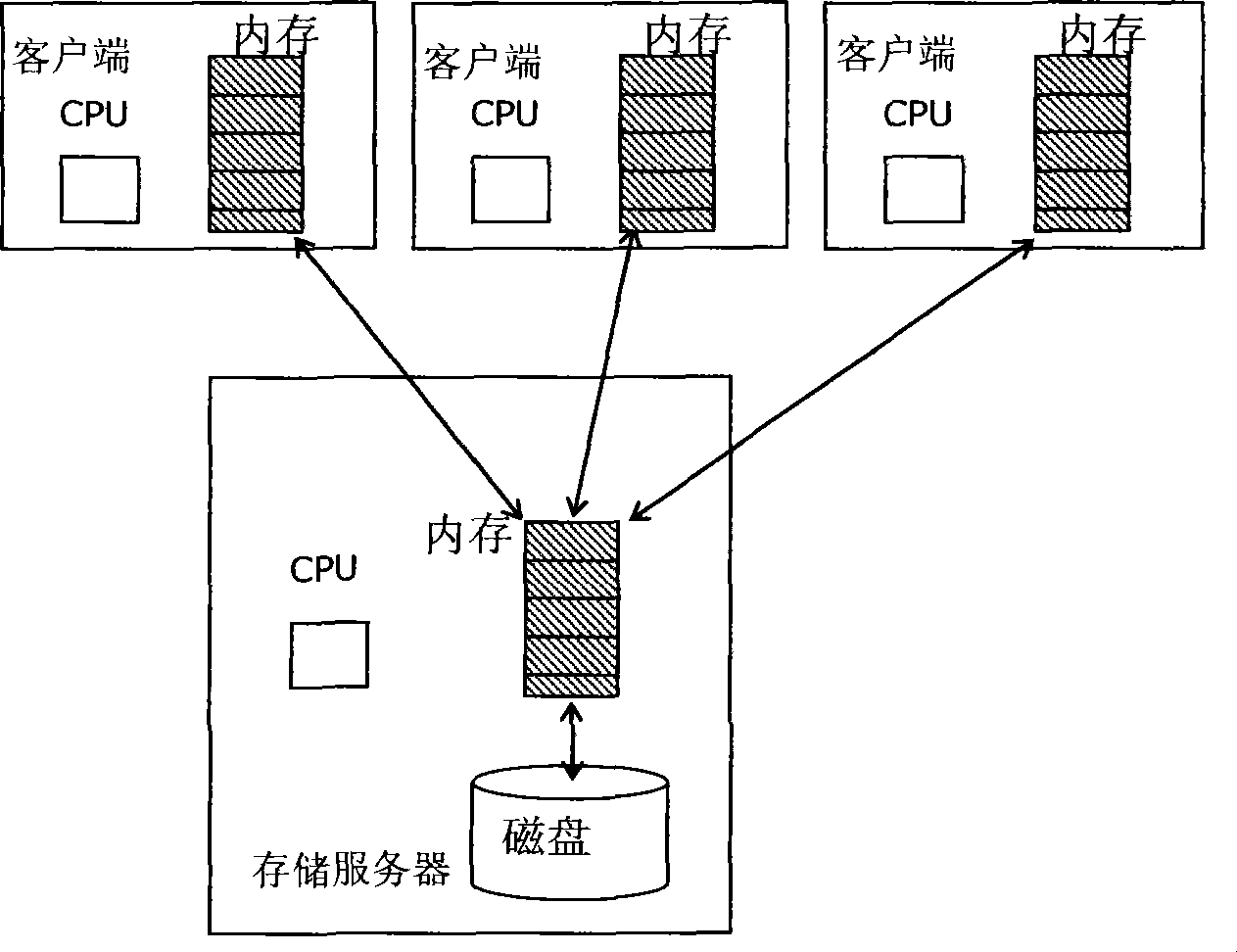

[0047] The system of the present invention includes a storage server 302 with a disk and a client 301 .

[0048] The client 301 includes an encapsulation module 311 and an access type identification module 312 .

[0049] The encapsulation module 311 is configured to receive the file access request of the application layer, encapsulate the file access request into a read request message, and encapsulate the access mode information identified by the access type identification module 312 into the read request message, and encapsulate the read request The message is sent to the storage server 302 .

[0050] The access type identification module 312 is configured to identify the access mode information corresponding to the read request message.

[0051] The access mode informa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com