A method and system data buffering and synchronization under cluster environment

A data caching and caching technology, applied in the field of database applications, can solve problems such as wasting computer system resources, redundancy, and low performance, and achieve the effects of saving network resources and computer resources, reducing the frequency of message sending, and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

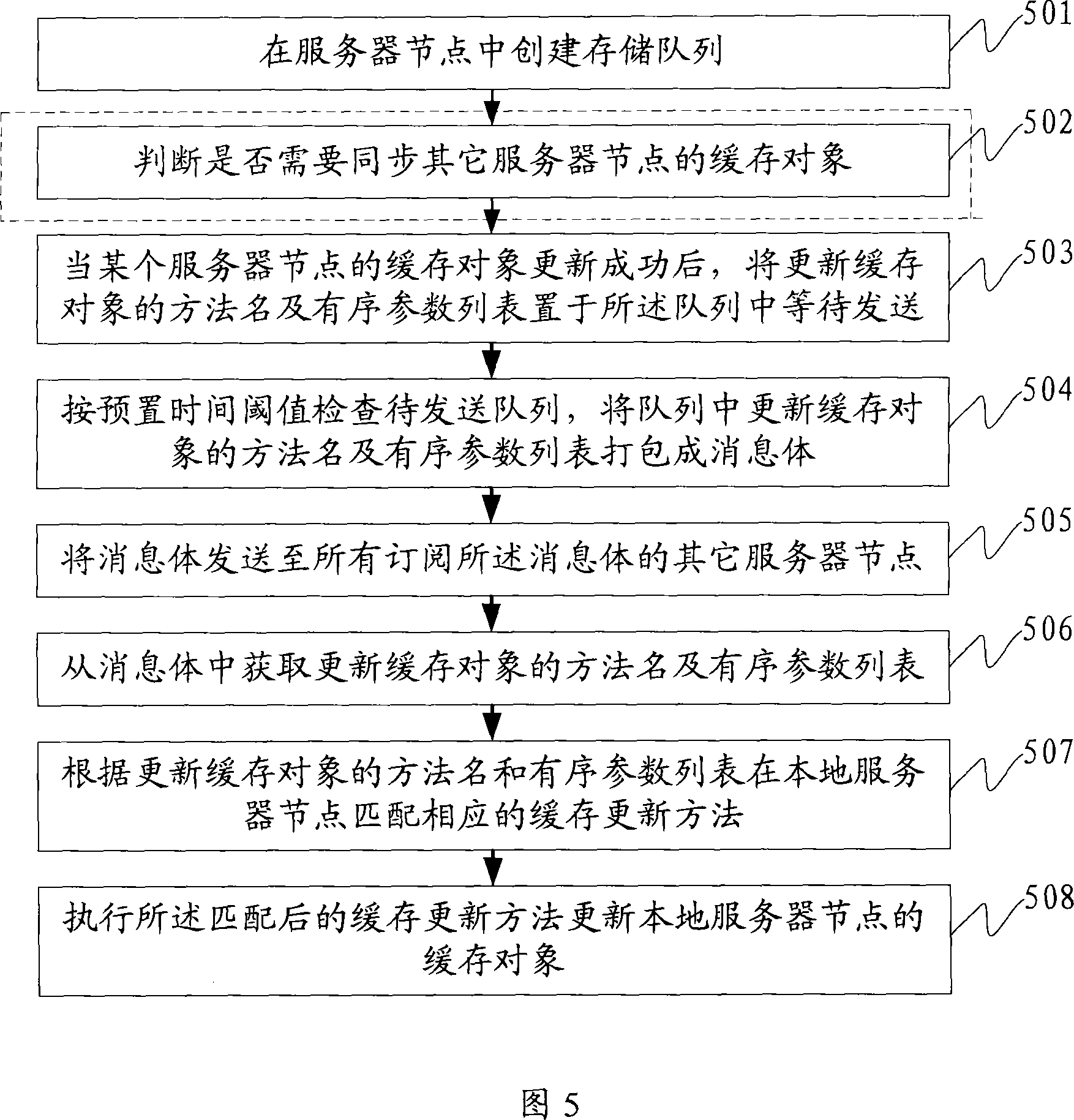

[0085] In a cluster environment, the code of the web application deployed on each server node is basically the same, thus ensuring that the interface for updating the cache in each server node and the code implemented by the interface are completely consistent. For example, the cache update existing in a certain server node The method must also exist on other server nodes.

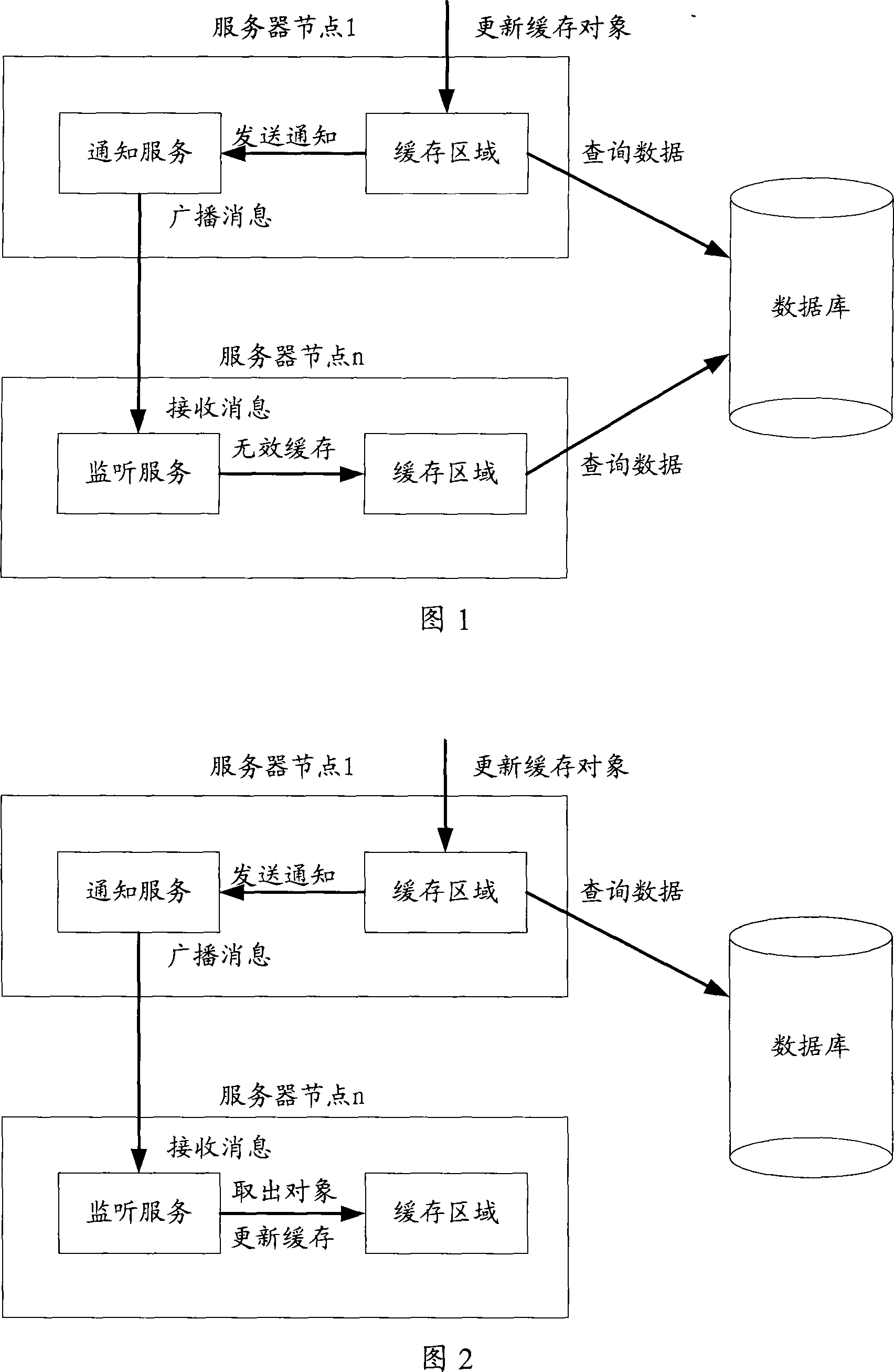

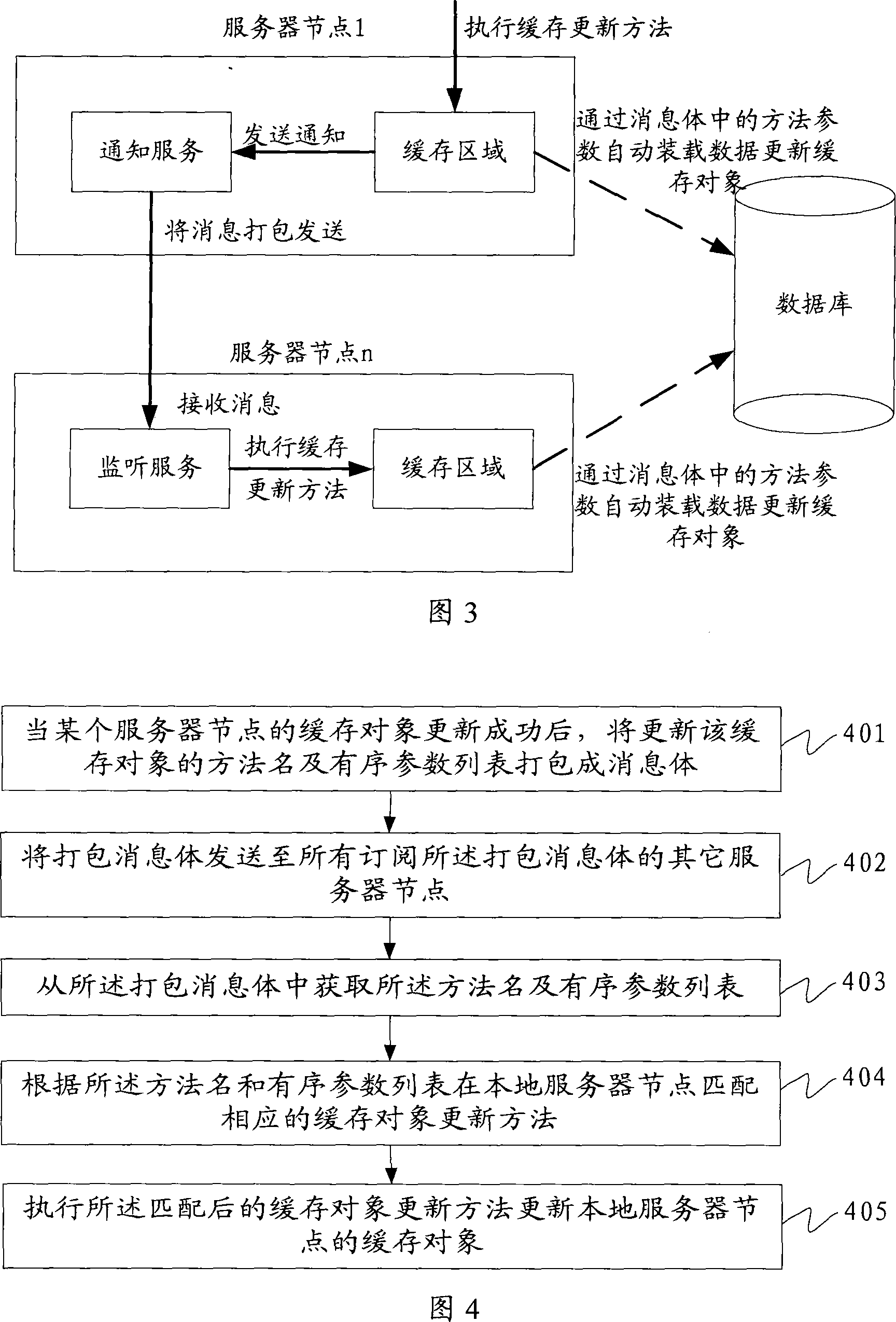

[0086] Based on the above facts, referring to FIG. 3 , the core idea of the present invention is: when the cache object of a certain server node changes, it is judged whether to synchronize the cache objects of other server nodes according to the cache synchronization strategy. If necessary, the server node packages the method name and ordered parameter list for updating the cache object and attaches it to the message body and sends it to all other server nodes subscribing to the message body. After other server nodes receive the message body, obtain the method name and ordered parameter list of updating...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com