Accelerated gaze-supported manual cursor control

a cursor control and accelerated technology, applied in the field of ubiquitous user interfaces, can solve the problems of increasing the problem of reliably referring to small and closely positioned targets, affecting the user's experience of virtual environments, and affecting the ability to appropriately combine multimodal inputs, so as to improve user interaction with virtual environments

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

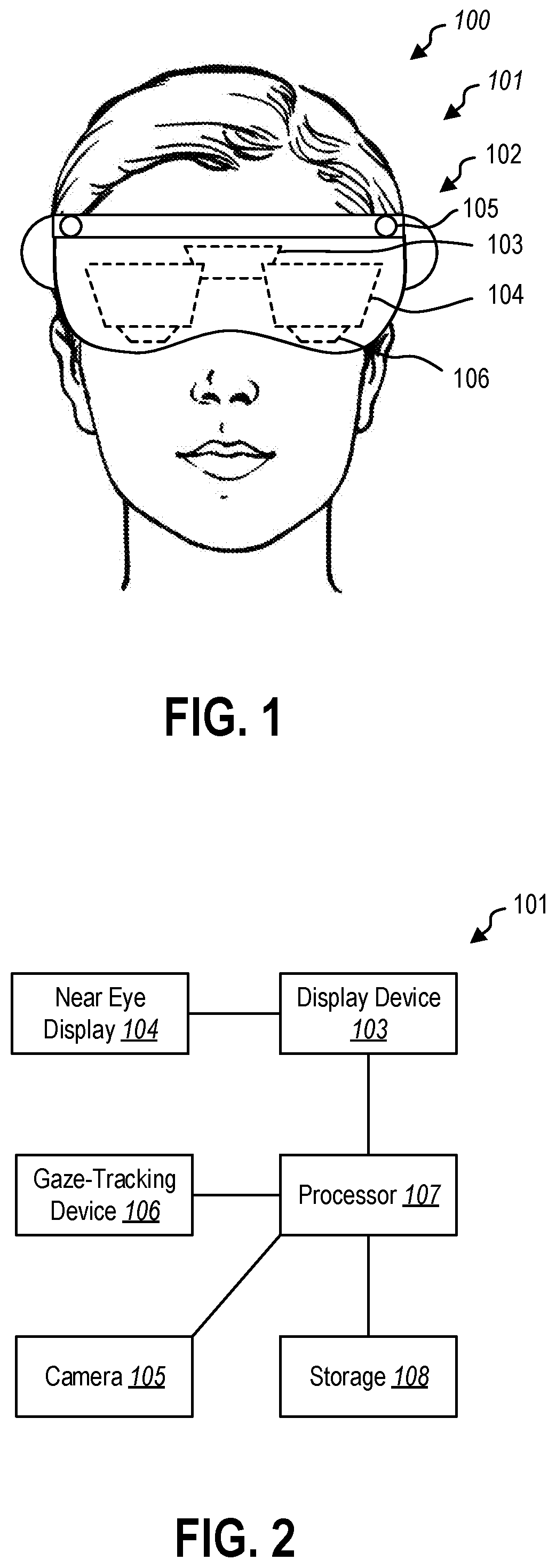

[0026]This disclosure generally relates to devices, systems, and methods for visual user interaction with virtual environments. More specifically, the present disclosure relates to improving interaction with virtual elements using gaze-informed manual cursor control. In some embodiments, visual information may be provided to a user by a near-eye display. A near-eye display may be any display that is positioned near a user's eye, either to supplement a user's view of their surroundings, such as augmented or mixed reality devices, or to replace the user's view of their surroundings, such as virtual reality devices. In some embodiments, an augmented reality or mixed reality device may be a head-mounted display (HMD) that presents visual information to a user overlaid on the user's view of their surroundings. For example, the visual information from the HMD may be combined with ambient or environment light to overlay visual information, such as text or images, on a user's surroundings.

[...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com