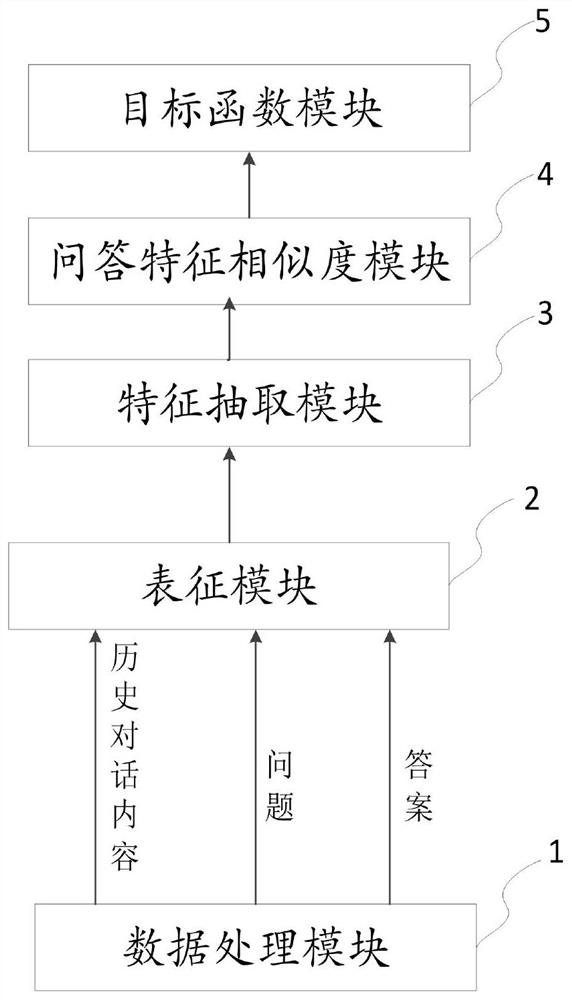

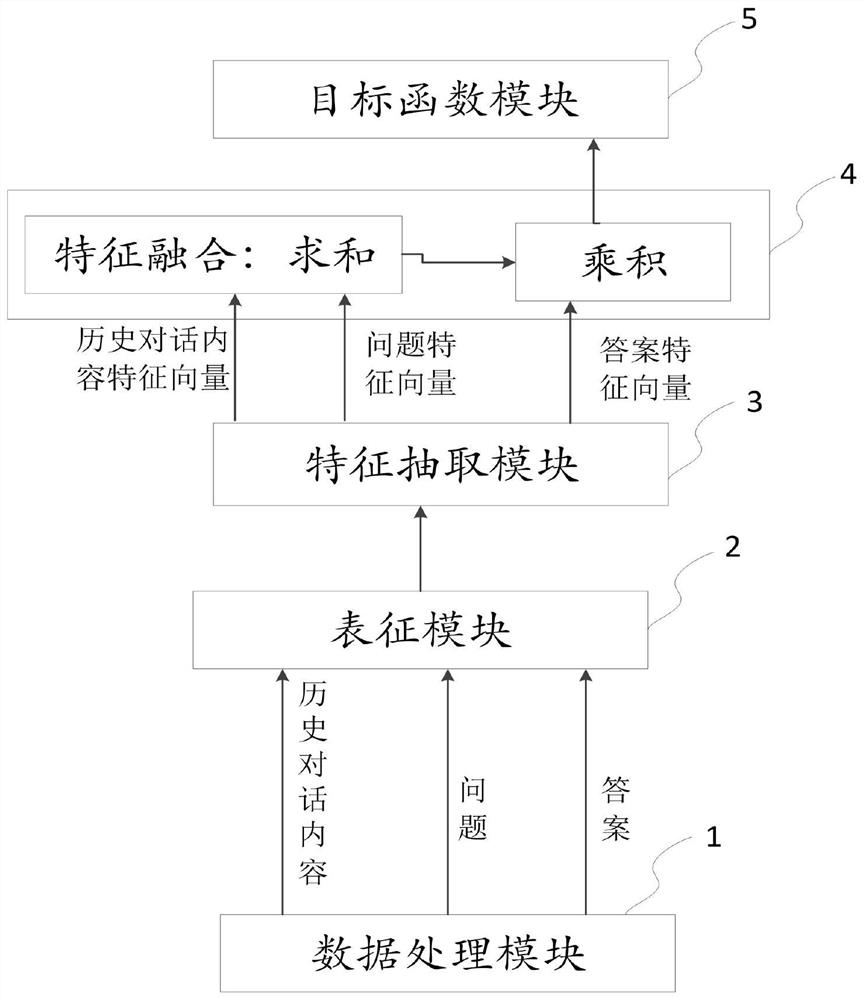

Multi-round dialogue device and method

A feature vector, the above technology, applied in semantic analysis, instruments, electronic digital data processing and other directions, can solve problems such as slow speed, lack of dialogue data, lack of contextual dialogue information for semantic features, etc., to achieve accurate prediction, simple network structure, The effect of improving natural language understanding ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

[0054] As a preferred embodiment, its implementation is as follows:

[0055] First the words of each sentence of the above dialogue text data, question data and answer data are segmented, then the position or address of each word is represented by ID, and the ID information of each word is used in N dimensions (such as 512 dimensions) random vector representation, thereby constructing the sentence vector set of the above dialogue text data, question data and answer data, and then can use this sentence vector set to input into the feature extraction module for feature extraction or extraction.

[0056]In this embodiment, the position or address of each word is represented by an ID, so that in the multi-round dialogue device of the present application, it is used to select masking for predictive training during pre-training. In the specific implementation, a certain probability algorithm can be designed, each ID has a certain probability or is randomly selected to be replaced by...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com