Cross-modal semantic clustering method based on bidirectional CNN

A clustering method and cross-modal technology, applied in the field of computer vision, can solve problems such as different efficiency and accuracy, and achieve the effects of strengthening recognition ability, enhancing correlation, and improving accuracy and efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] The purpose and effects of the present invention will become more apparent by referring to the accompanying drawings in detail of the present invention.

[0048] Step 1: Data preprocessing, pre-training the text samples of the training set.

[0049] Using the existing data set, divide it into training set and test set according to the set ratio, and pre-train the text samples of the training set.

[0050] Step 2: Build a cross-modal retrieval network.

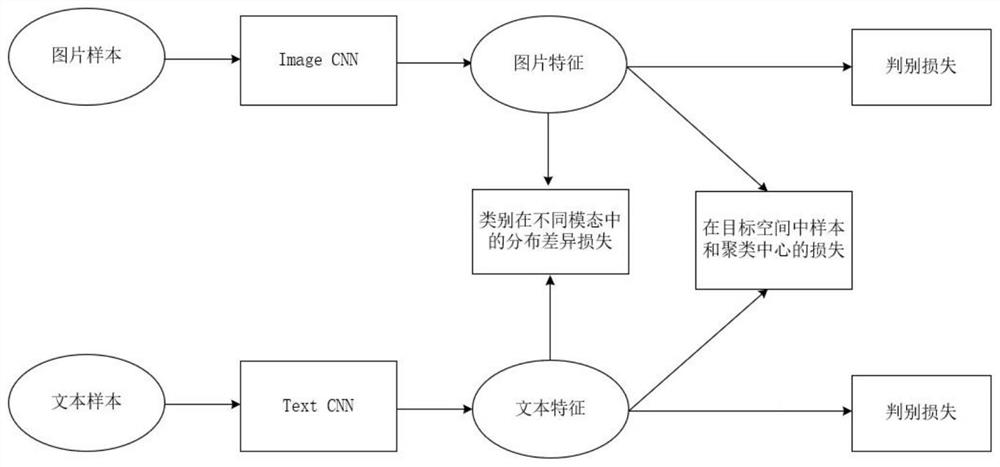

[0051] Such as figure 1As shown, the cross-modal retrieval network adopts a two-layer CNN structure, including a ResNet-50 network and a text CNN network, namely TextCNN. The network structure adopts double CNN simultaneously. The feature vector of the image sample is extracted through the ResNet-50 network. For text samples, first use Word2Vec to pre-train word vectors, and then use TextCNN to extract text feature vectors.

[0052] The main idea of ResNet-50 is to add a direct connection channel in the network, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com