Pedestrian re-identification method based on two-way mutual promotion deentanglement learning

A technology of pedestrian re-identification and training samples, which is applied in the field of pedestrian re-identification based on two-way mutual promotion disentanglement learning, can solve the problems of low recognition performance, performance degradation, and degradation of recognition performance of data sets, and the method is simple and effective, avoiding The effect of affordability and excellent performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0065] The technical solutions of the present invention will be clearly and completely described below in conjunction with the accompanying drawings and specific embodiments.

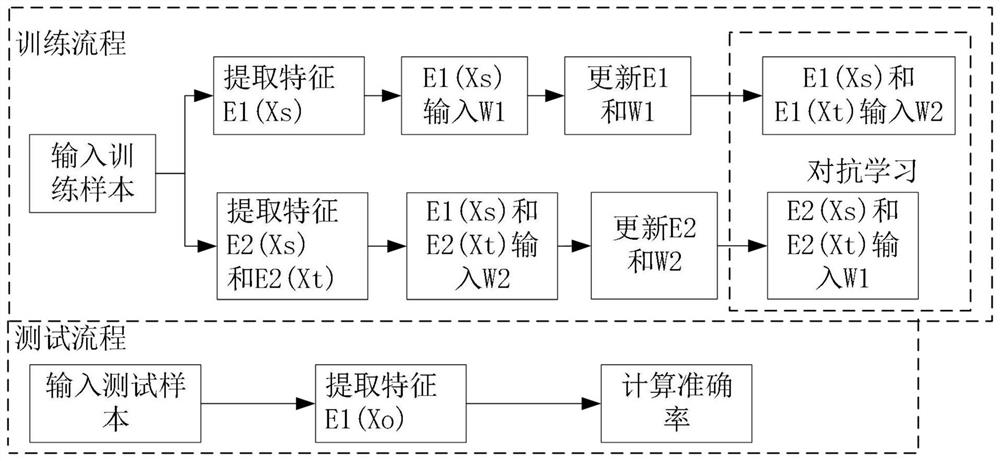

[0066] figure 1 It is a flowchart of a pedestrian re-identification method based on two-way mutual promotion disentanglement learning in an example of the present invention. Depend on figure 1 It can be seen that a pedestrian re-identification method based on two-way mutual promotion disentanglement learning in the present invention includes obtaining a content encoder E with extraction domain invariant features through the training process 1 , using the content encoder E in the testing process 1 Re-identify pedestrians in the test samples of the target domain. The training process includes a content encoding branch and a camera style encoding branch, and the content encoding branch includes a content encoder E 1 and the identity classifier W 1 , the camera style encoding branch includes a style en...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com