Panoramic video coding optimization algorithm based on user field of view

A panoramic video and optimization algorithm technology, applied in the field of panoramic video coding, can solve the problems that the characteristics of panoramic video are not fully considered, space and time redundancy, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

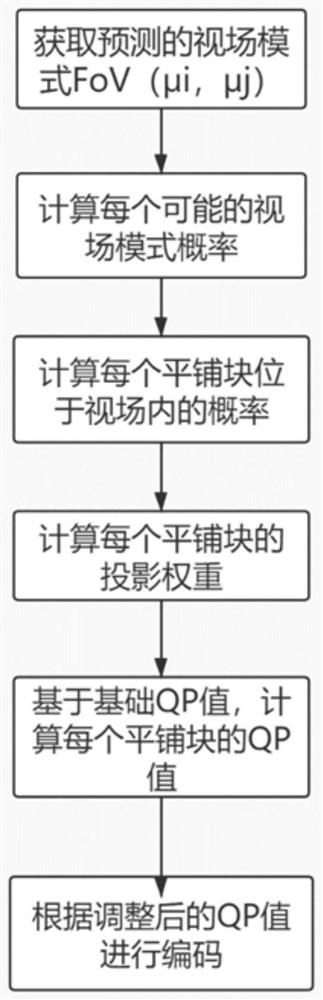

[0046] A panoramic video encoding optimization algorithm based on the user's field of view, which describes the weighted FoV distortion of the tile according to the stretching characteristics of the panoramic video projection transformation and the probability of the FoV area being observed.

[0047] First, use the existing saliency analysis algorithm to obtain the predicted field of view, and the fixed size of the field of view can be represented by the center of the field of view. The center of the predicted field of view is approximated by the nearest tile center, and the field of view can be recorded as FoV(i, j), where i, j are the row and column index numbers of the tile, respectively.

[0048] Considering that there may be errors in viewpoint prediction, it is assumed that all the viewing field modes obey a two-dimensional Gaussian distribution centered on the predicted viewing field, and the probability of each viewing field mode is calculated.

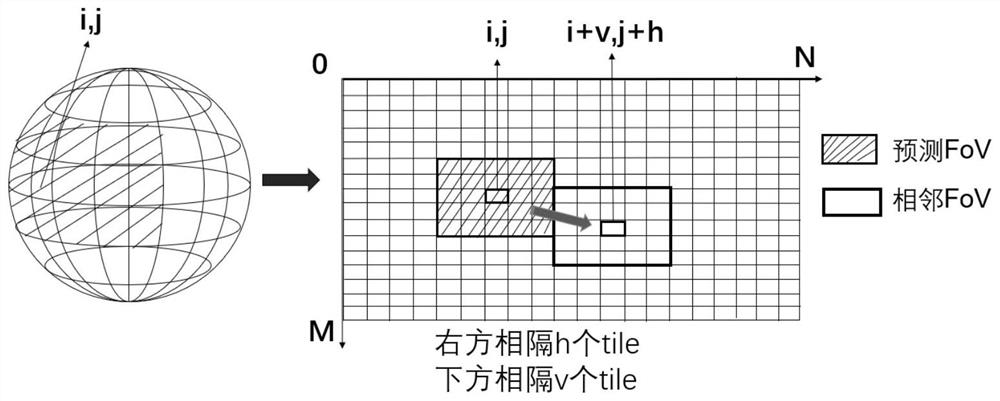

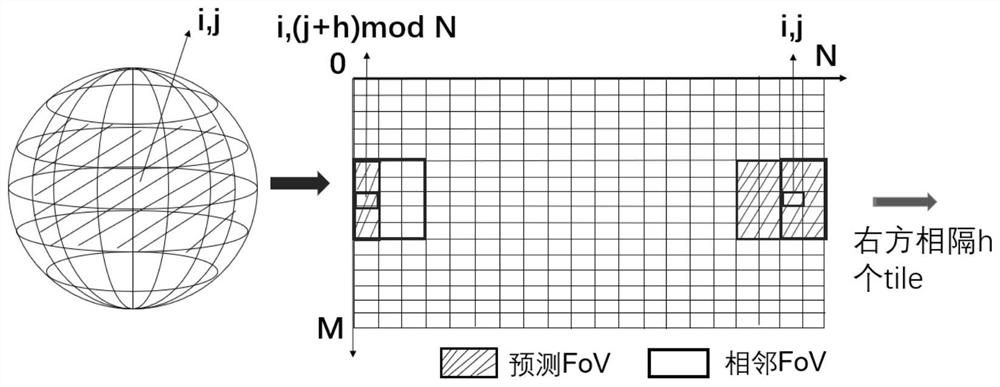

[0049] The vector tile...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com