Federal learning method based on dynamic adjustment model aggregation weight

A technology of dynamic adjustment and learning methods, applied in computing models, machine learning, computing, etc., can solve problems such as inability to provide universality and good solutions to heterogeneous data, so as to improve the quality of the global model, rationally aggregate weight distribution, and accelerate The effect of convergence speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] It should be understood that all combinations of the foregoing concepts as well as additional concepts described in more detail below may be considered part of the inventive subject matter, provided such concepts are not mutually inconsistent. Additionally, all combinations of claimed subject matter are considered part of the inventive subject matter.

[0018] The foregoing and other aspects, embodiments and features of the present teachings can be more fully understood from the following description when taken in conjunction with the accompanying drawings. Other additional aspects of the invention, such as the features and / or advantages of the exemplary embodiments, will be apparent from the description below, or learned by practice of specific embodiments in accordance with the teachings of the invention.

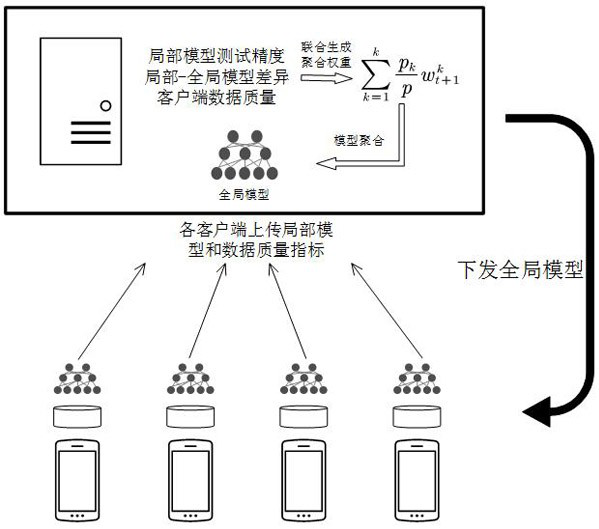

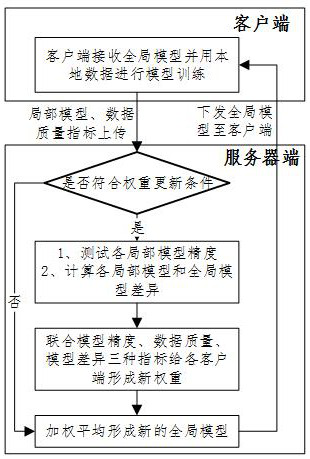

[0019] The federated learning method based on dynamically adjusting model aggregation weights of the present invention is applicable to federated learning includin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com