Image menu retrieval method based on intra-modal and inter-modal hybrid fusion

A modal and recipe technology, applied in the field of cross-modal retrieval, can solve problems such as poor cross-modal retrieval effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

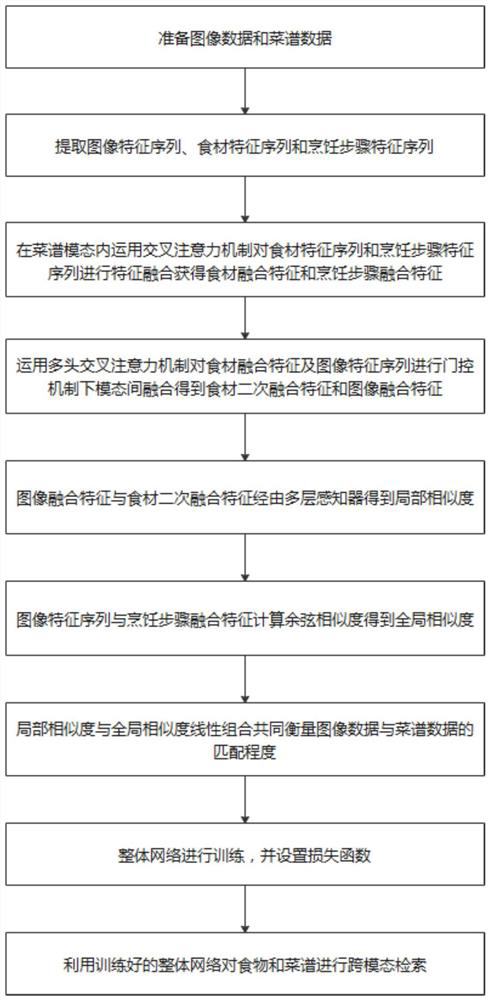

[0049] Such as figure 1 The shown image recipe retrieval method based on hybrid fusion within and between modalities includes the following steps:

[0050] Step 1. Prepare image data and recipe data;

[0051] Step 2. Build the overall network;

[0052] Step 3. Train the overall network of step 2, and set the loss function;

[0053] Step 4. Utilize the trained overall network for cross-modal retrieval of food and recipes.

[0054] In the above method, the image data prepared in step 1 includes food images, and the recipe data includes ingredients and cooking steps;

[0055] In this embodiment, step 2 specifically includes the following steps:

[0056] Step 21. extract image feature sequence, food material feature sequence and cooking step feature sequence;

[0057] Step 22. Use the cross-attention mechanism in the recipe mode to perform feature fusion on the ingredient feature sequence and the cooking step feature sequence to obtain the ingredient fusion feature and the cook...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com