Multi-camera combined large-scene crowd counting method

A crowd counting and large-scene technology, applied in the field of computer vision, can solve problems such as multi-cameras in large-scale scenes, overlapping monitoring areas, and complex distribution of monitoring cameras.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

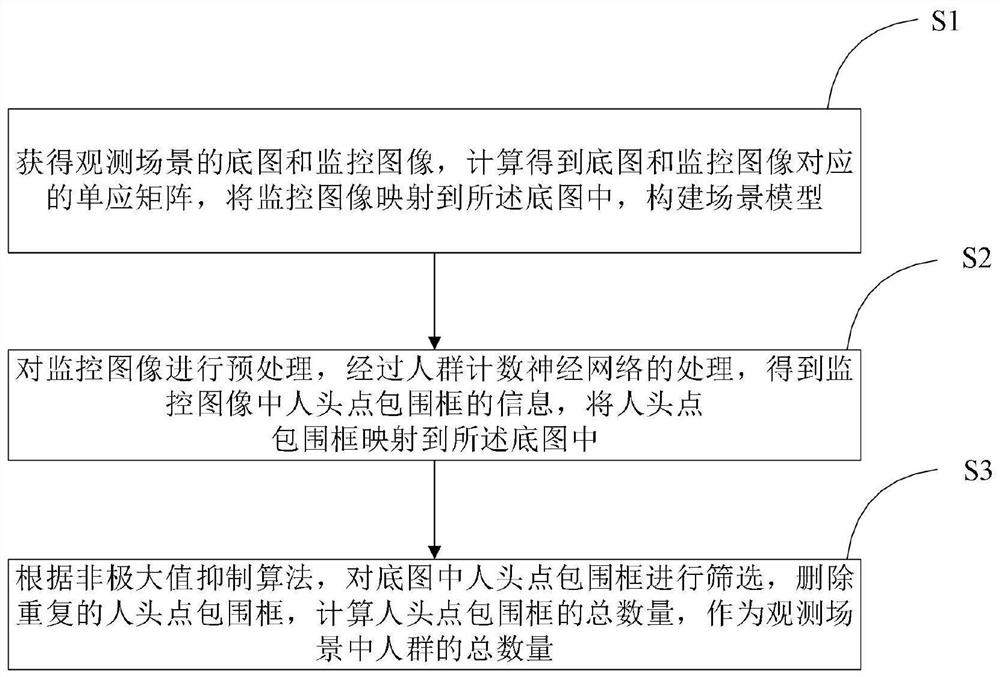

[0022] Such as figure 1 As shown, a multi-camera combined large-scene crowd counting method provided by an embodiment of the present invention includes the following steps:

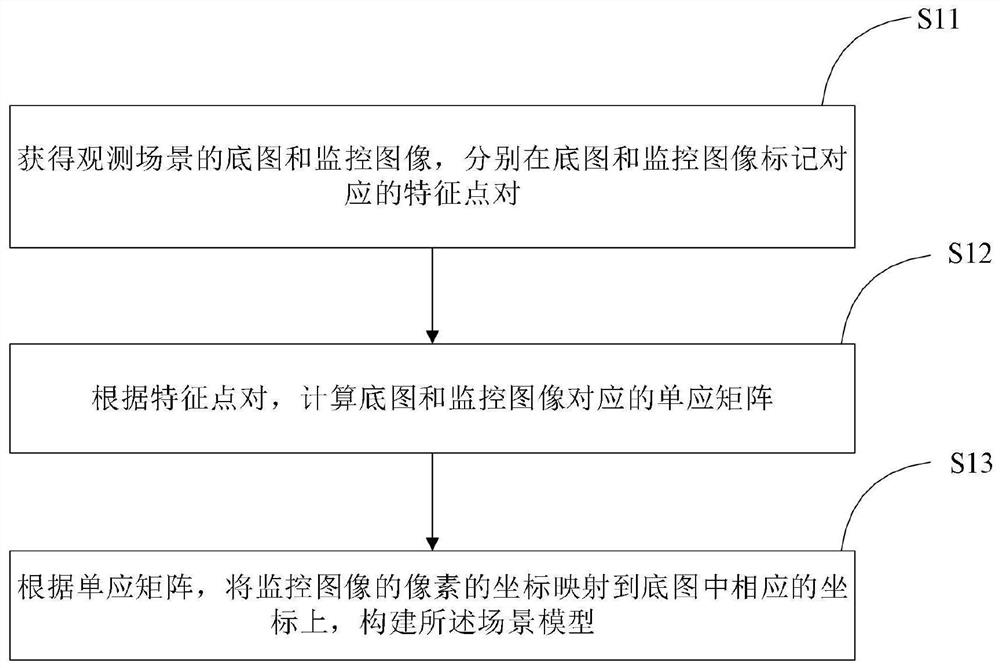

[0023] Step S1: Obtain the base map and monitoring image of the observed scene, calculate the homography matrix corresponding to the base map and the monitoring image, map the monitoring image to the base image, and construct a scene model;

[0024] Step S2: Preprocessing the monitoring image, and obtaining the information of the head point bounding box in the monitoring image through the processing of the crowd counting neural network, and mapping the head point bounding box to the base image;

[0025] Step S3: According to the non-maximum value suppression algorithm, filter the bounding boxes of human head points in the base image, delete repeated bounding boxes of human head points, and calculate the total number of bounding boxes of human head points as the total number of people in the observation sc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com