Method and device for obtaining pre-trained model

A pre-training and model technology, applied in the field of natural language processing and deep learning technology, to achieve good semantic understanding and generalizable representation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

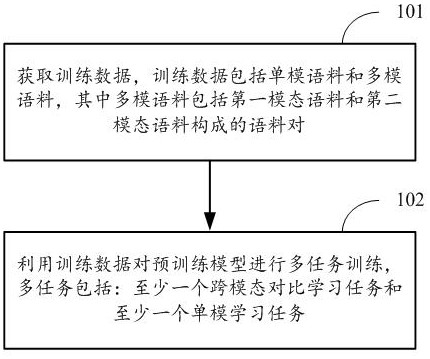

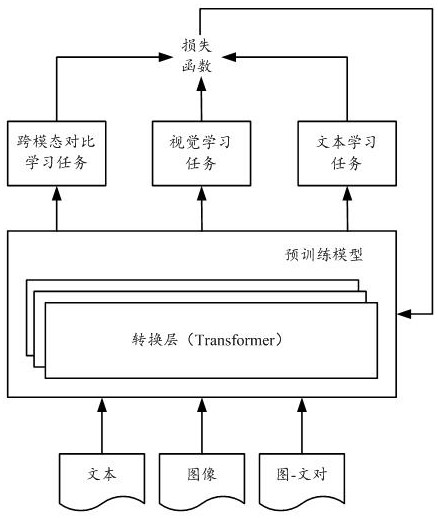

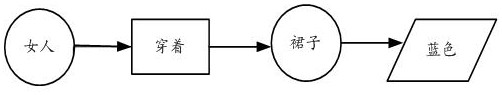

Embodiment Construction

[0029] Exemplary embodiments of the present disclosure are described below in conjunction with the accompanying drawings, which include various details of the embodiments of the present disclosure to facilitate understanding, and they should be regarded as exemplary only. Accordingly, those of ordinary skill in the art will recognize that various changes and modifications of the embodiments described herein can be made without departing from the scope and spirit of the disclosure. Also, descriptions of well-known functions and constructions are omitted in the following description for clarity and conciseness.

[0030] Most of the existing pre-training models can only process single-mode data. For example, the BERT (BidirectionalEncoder Representation from Transformers, bidirectional encoding representation from the converter) model can only learn and process text data. The SimCLR (Simple Framework for Contrastive Learning of Visual Representations) model can only learn and pro...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com