Text generation image method based on remote related attention generative adversarial network

A technology for generating images and attention, applied in biological neural network models, image data processing, 2D image generation, etc., can solve problems such as inability to judge whether the output result is real or not

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] In order to make the above objects, features, and advantages of the present invention, the technical solutions in the embodiments of the present invention will be apparent from the embodiments of the present invention. The specific implementation process of the present invention is as follows:

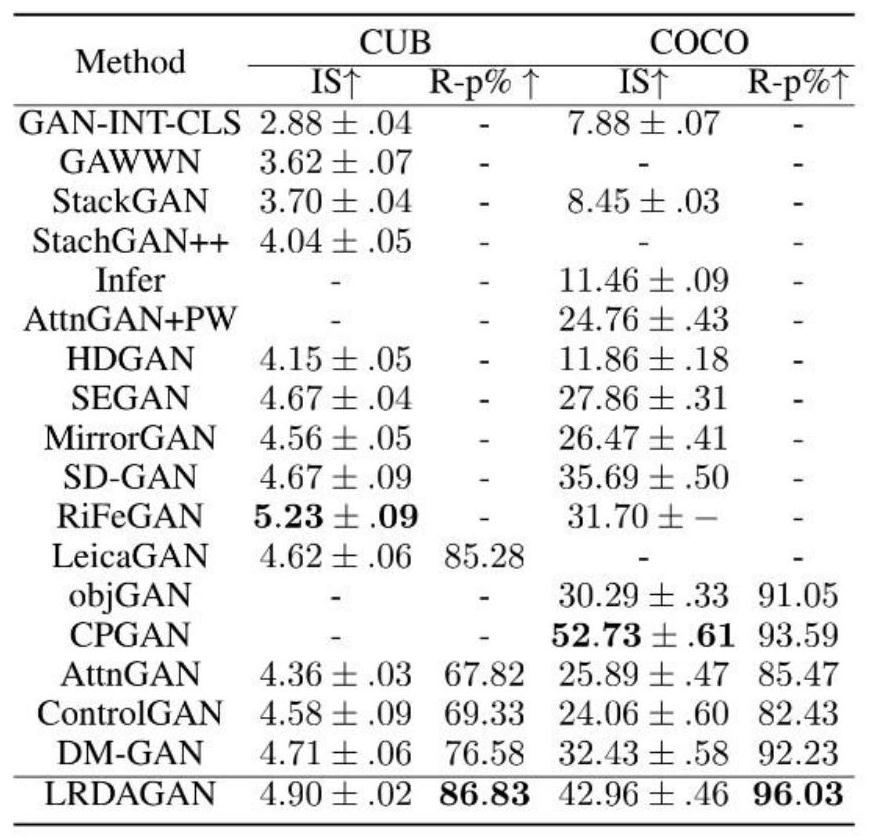

[0056] Step 1: Divide the data set, divided into training sets and test sets. Follow the previous text to image methods, our approach at the Caltech-UCSD BIRDS 200 Data Set (CUB) and Microsoft Common Objects (Coco). The CUB dataset contains 11,788 bird images that belong to 200 categories, each with 10 visual description statements. For text-to-image synthesis, COCO data sets more diverse and challenging, and has 80K training images and 40K test images. Each image has 5 visual description statements.

[0057] Step 2: Data pretreatment. The pre-processing step is: Build a dictionary and add NULL in the dictionary; build a text vector, using a one-dimensional vector of length 18, the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com