Quantifying perceptual quality model uncertainty via bootstrapping

A technology of perceptual quality and perceptual model, applied in the fields of computer science and video, which can solve the problems of unknown accuracy of BD-rate value and unknown accuracy, and achieve the effect of reliable testing and optimized encoding operation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] In the following description, there are many specific details to provide a more thorough understanding of the present invention. However, those skilled in the art will appreciate that the present invention can be implemented without one or more details in these specific details.

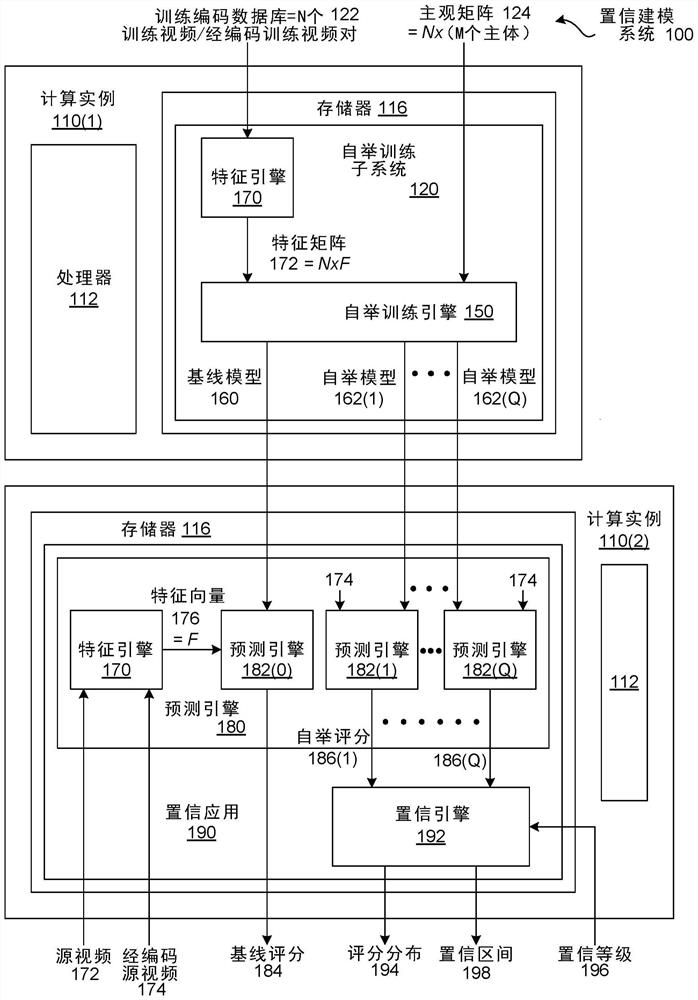

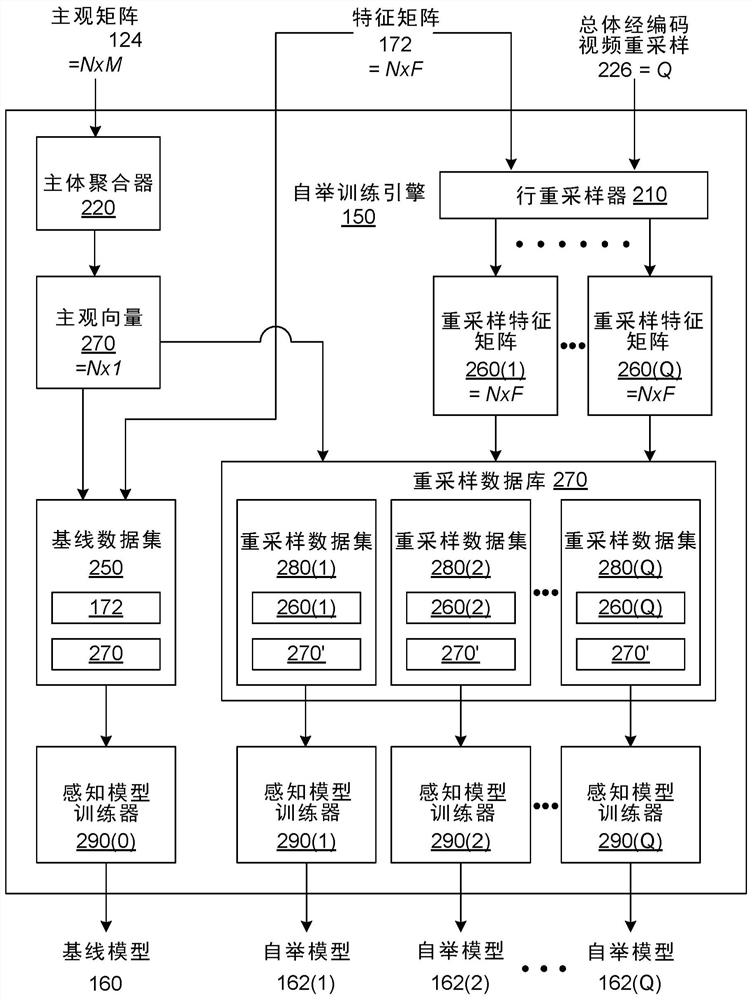

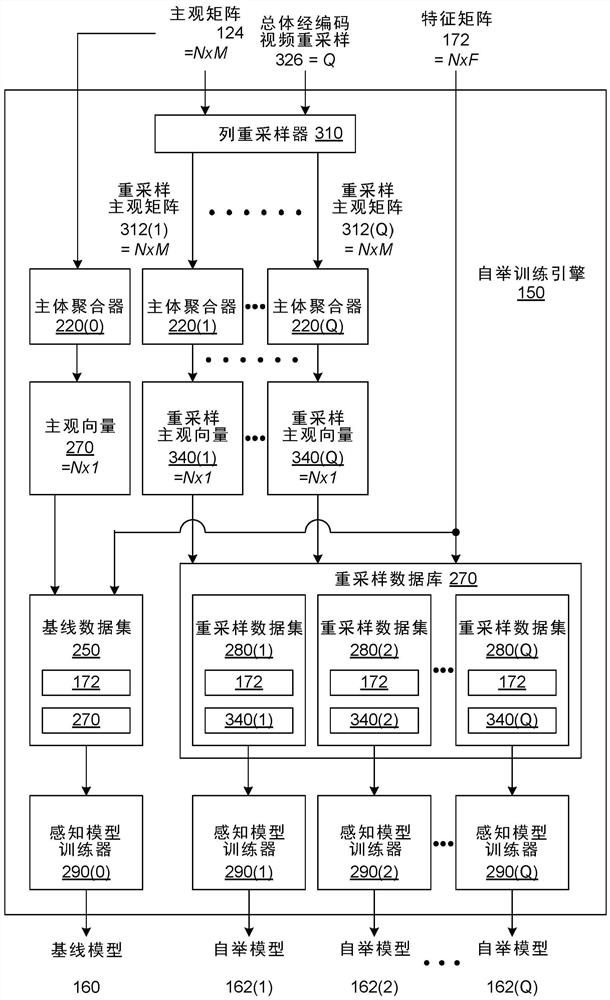

[0022] To optimize media services to the viewer's overall visual experience, media service providers often implement automated encoded video quality predictions as part of coding and streaming infrastructure. For example, the media service provider can use an automated encoded video quality prediction to evaluate the encoder / decoder (codec) and / or fine-tuning flow transmission bits, thereby optimizing the quality of the encoded video. In a typical prior art for assessing the quality of the encoded video, the training application performs machine learning operations based on the original opinion score associated with a set of coded training video to generate a perceived quality model. The origi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com