Method for semantic completion of single depth map point cloud scene

A depth map and completion technology, applied in image enhancement, image analysis, image data processing, etc., can solve problems such as inability to achieve a complete point cloud

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

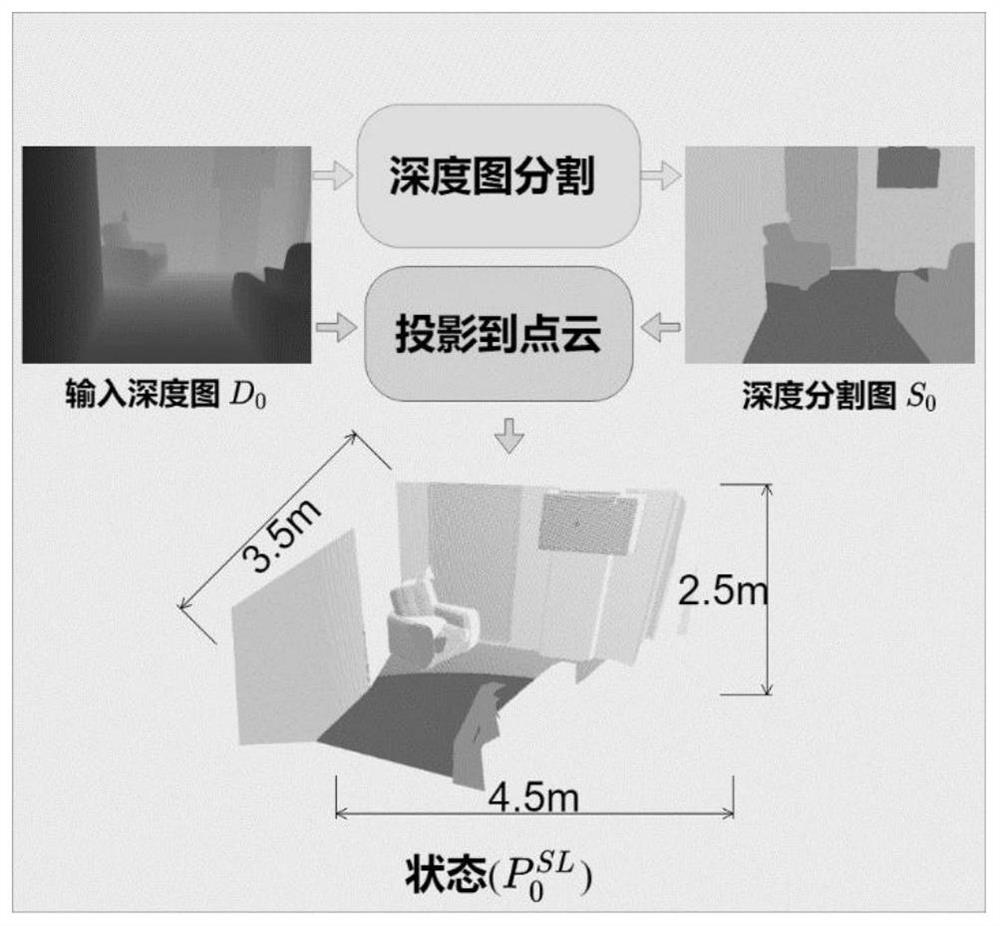

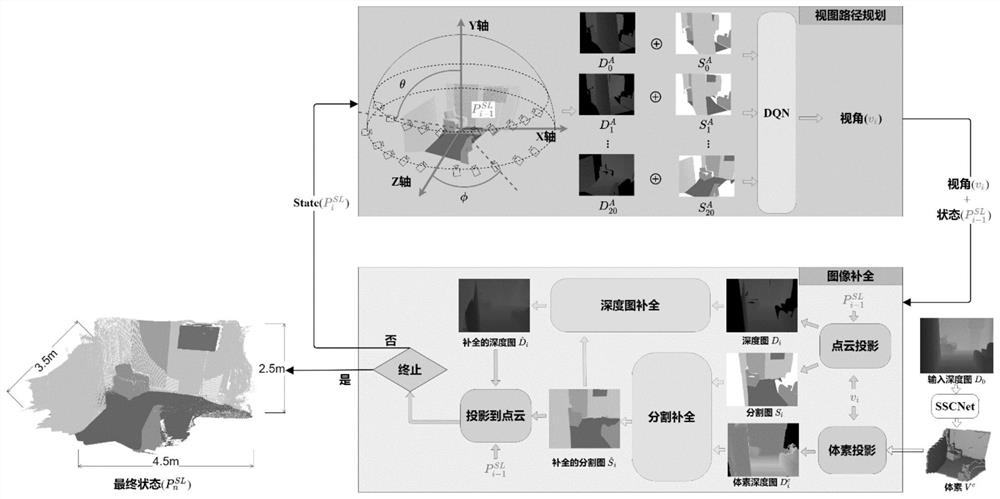

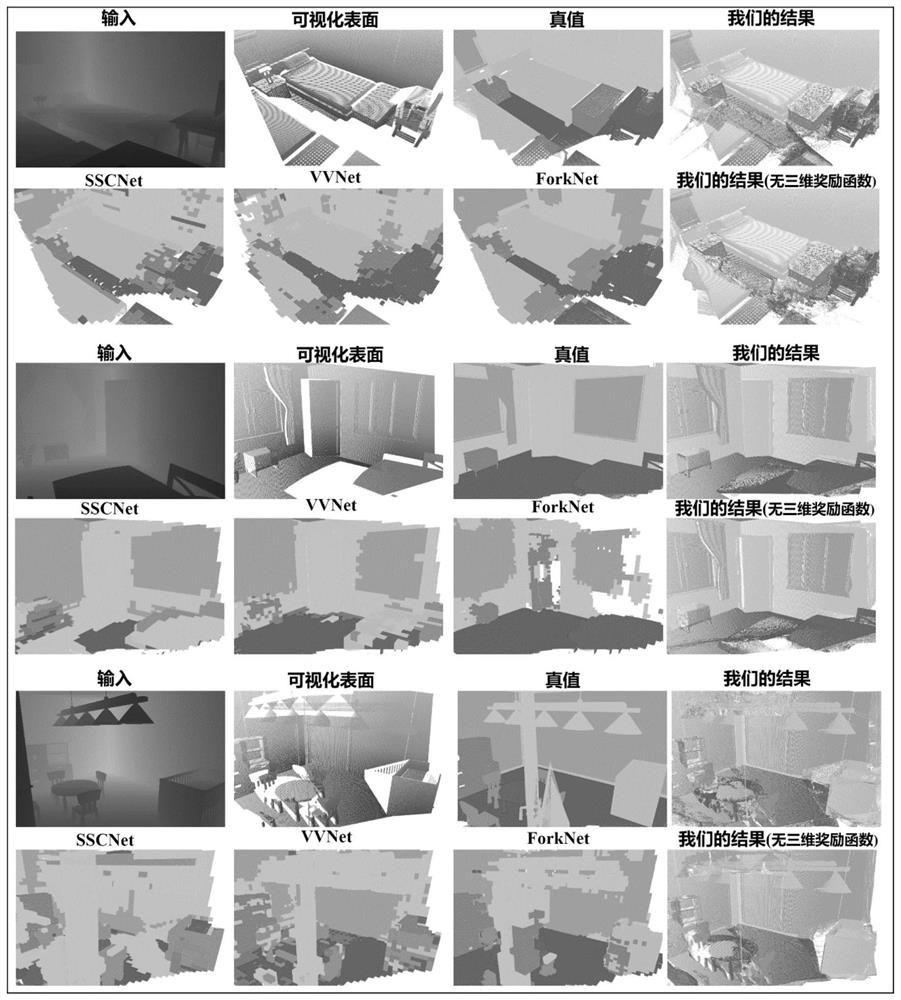

[0051] The specific implementation manners of the present invention will be further described below in conjunction with the drawings and technical solutions.

[0052] In this embodiment, a training set and a test set are generated based on the SUNCG data set. 1590 scenes are randomly selected for rendering, 1439 scenes are used for DQN training, and the rest are used for DQN testing. In order to train the segmentation completion and depth map completion network, 5 or 6 viewpoints defined in the action space are randomly selected, and they are applied to the above-mentioned 1590 scenes to render more than 10,000 sets of depth maps and semantic segmentation truth. value. Wherein, choose one thousand groups to test the present invention.

[0053] The present invention includes four main components, which are depth map semantic segmentation network, voxel completion network, segmentation completion network and depth map completion network. All required DCNN networks are impleme...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com