A saliency-based multimodal small-shot learning method

A learning method, multi-modal technology, applied in character and pattern recognition, biological neural network models, instruments, etc., can solve the problems of data difficulties, cost a lot of manpower and financial resources, limit the applicability of models, etc., to enhance the classification ability, strengthen the Availability, the effect of rich feature representation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments. It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

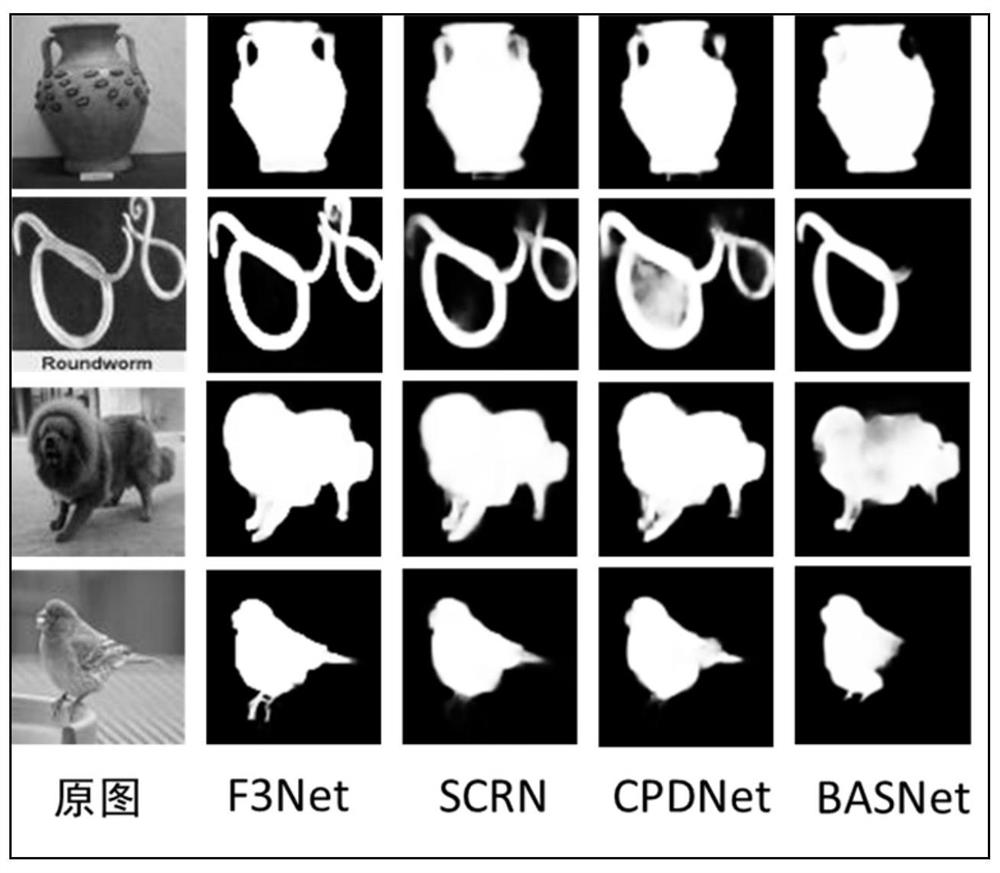

[0024] The saliency-based multimodal small sample learning method provided by the present invention mainly adds saliency image extraction, multimodal information combination and label propagation operations on the basis of traditional small sample classification. First, the saliency map of the image is obtained through the saliency detection network to obtain the foreground and background regions of the image, and then the semantic information and the visual information are combined through the multi-modal hybrid model, and the semantic information is used to assist the visual information to classify, and finally, the data is used The manifold structure of the data sample is construct...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com