Remote sensing scene classification method and device, terminal equipment and storage medium

A scene classification and remote sensing technology, applied in the field of remote sensing images, can solve the problem of shallow convolution feature loss

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

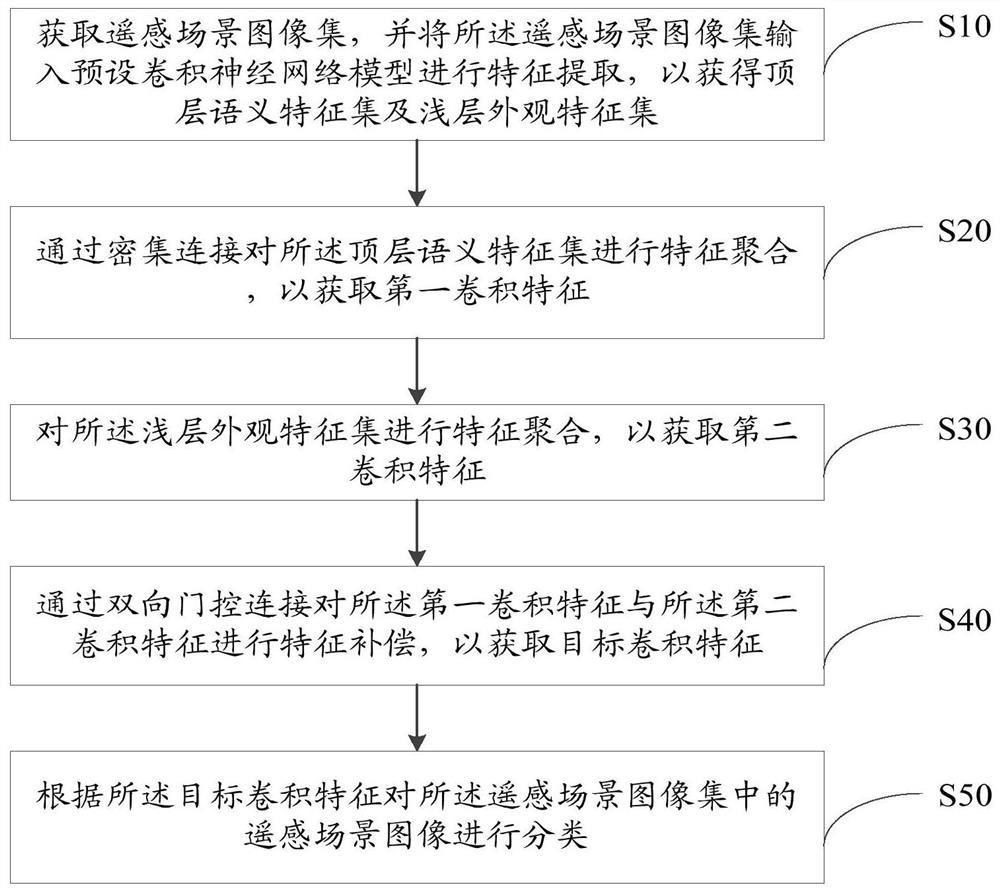

[0098] refer to Figure 4 , Figure 4 It is a schematic flowchart of the third embodiment of a remote sensing scene classification method of the present invention. Based on the first embodiment above, the remote sensing scene classification method in this embodiment specifically includes in step S50:

[0099] Step S51: Perform feature merging of the target convolutional features and the global features output by the preset convolutional neural network model to obtain target classification features.

[0100] It is easy to understand that the remote sensing image set is input into the preset convolutional neural network model, so as to obtain the output global features. The global feature is merged with the target convolutional feature, ie the global feature is compensated by the target convolutional feature.

[0101] Step S52: Obtain a feature vector of the object classification feature, and obtain the number of object categories according to the feature vector.

[0102] In...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com