Target tracking method based on deep learning and discriminant model training and memory

A target tracking and model training technology, applied in the fields of computer vision and pattern recognition, can solve problems such as hindering the improvement of the accuracy of target tracking algorithms, and achieve the effects of alleviating the imbalance of positive and negative samples, efficient offline network training, and efficient solutions.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] Preferred embodiments of the present invention are described below with reference to the accompanying drawings. Those skilled in the art should understand that these embodiments are only used to explain the technical principle of the present invention, and are not intended to limit the protection scope of the present invention.

[0052] It should be noted that, in the description of the present invention, the terms "first" and "second" are only for the convenience of description, rather than indicating or implying the relative importance of the devices, elements or parameters, so they should not be understood as important to the present invention. Invention Limitations.

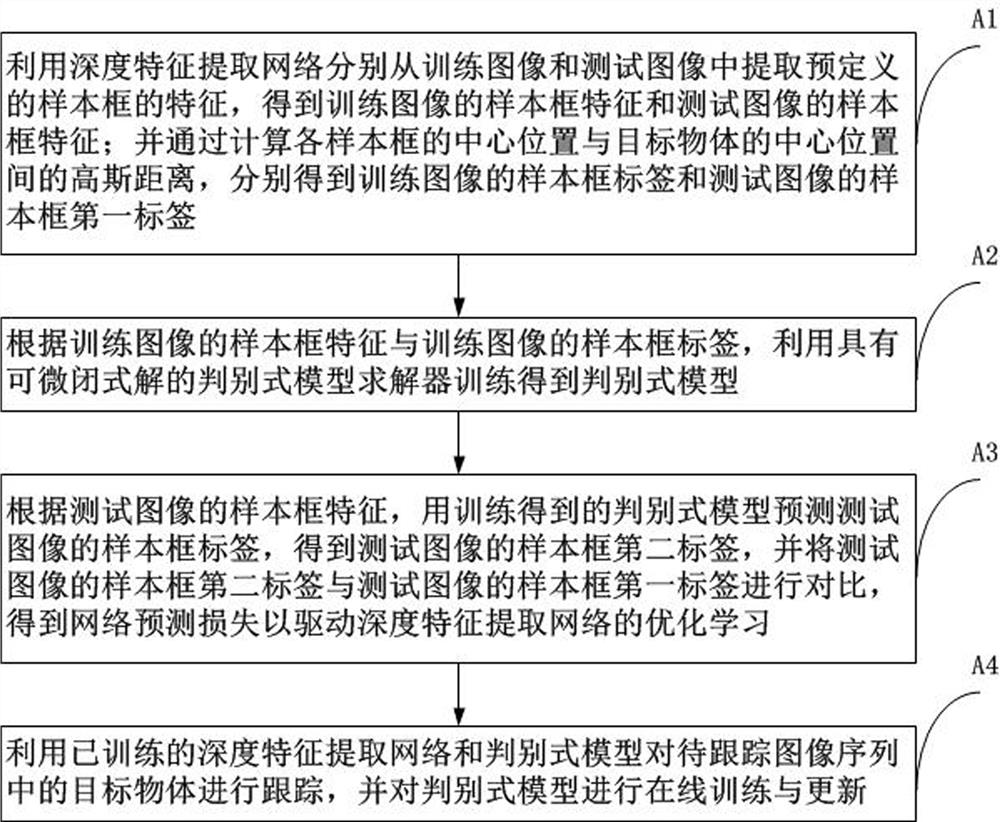

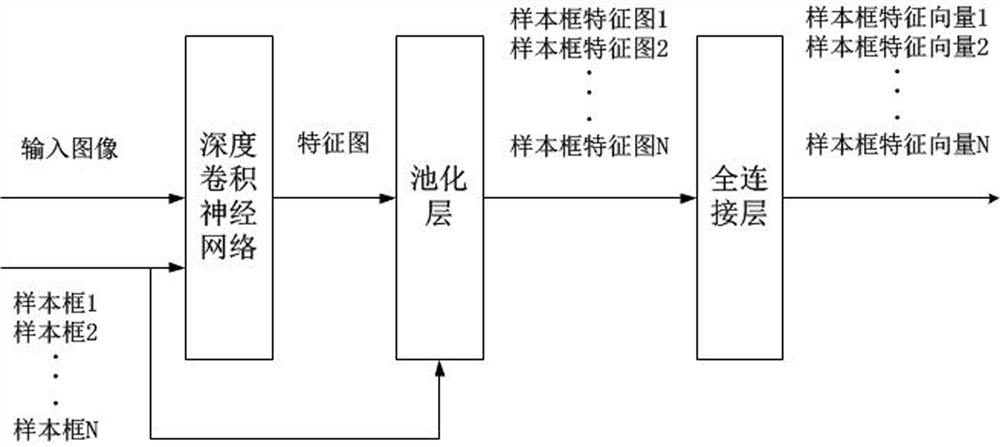

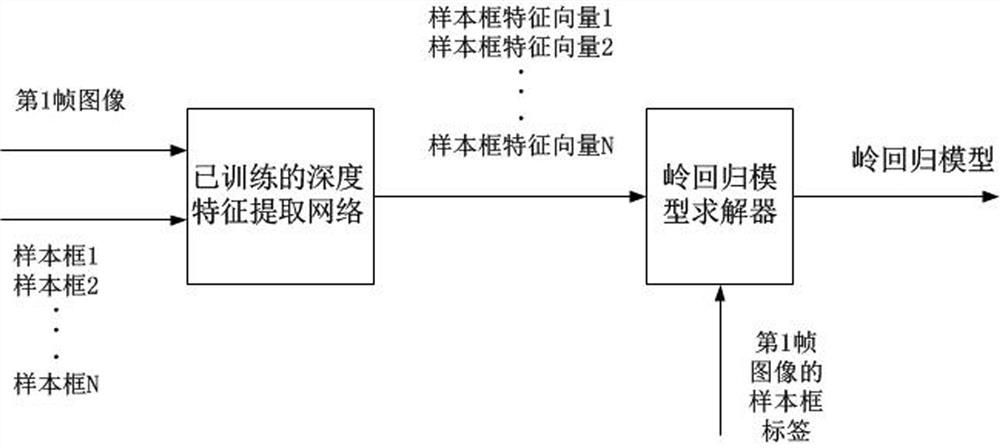

[0053] figure 1 It is a schematic diagram of the main steps of the embodiment of the target tracking method based on deep learning and discriminant model training of the present invention. Such as figure 1 As shown, the target tracking method of this embodiment includes: an offline training phase an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com