Allocation of memory

A technology of memory allocation and memory, which is applied in the direction of memory architecture access/allocation, resource allocation, memory system, etc., and can solve problems that affect the performance of the processing system and conflicts

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] The following description is presented by way of example to enable any person skilled in the art to make and use the invention. The invention is not limited to the embodiments described herein, and various modifications to the disclosed embodiments will be apparent to those skilled in the art.

[0043] Embodiments will now be described by way of example only.

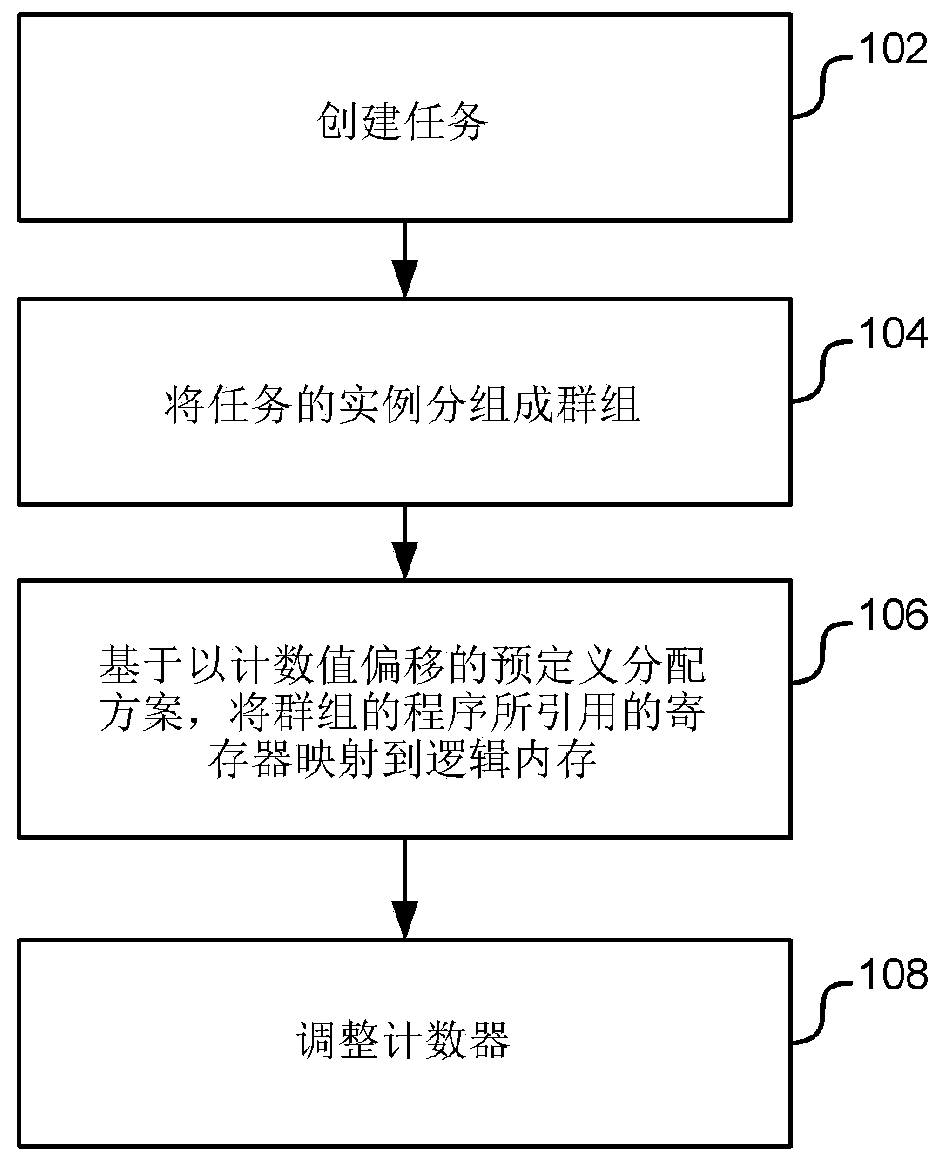

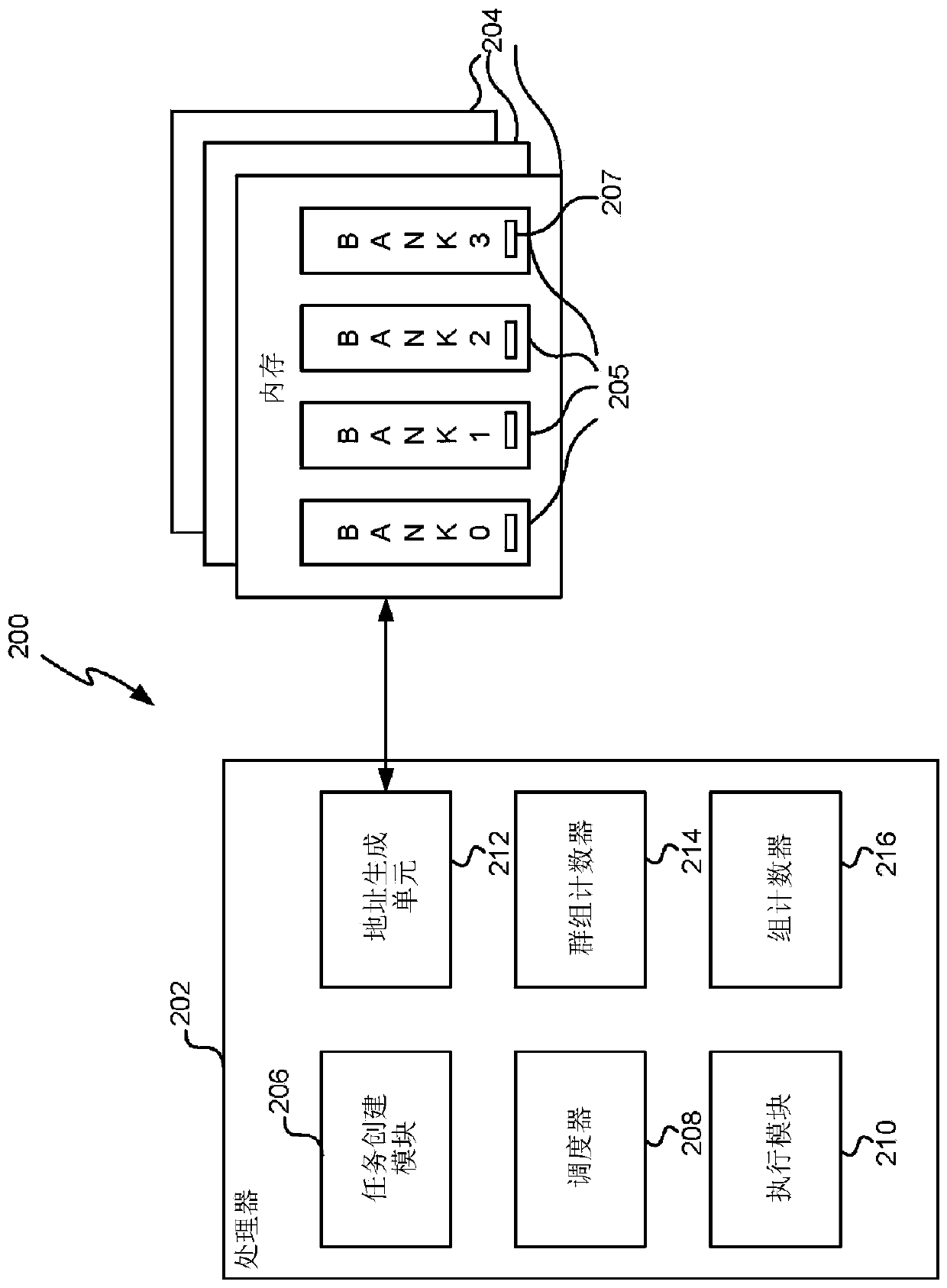

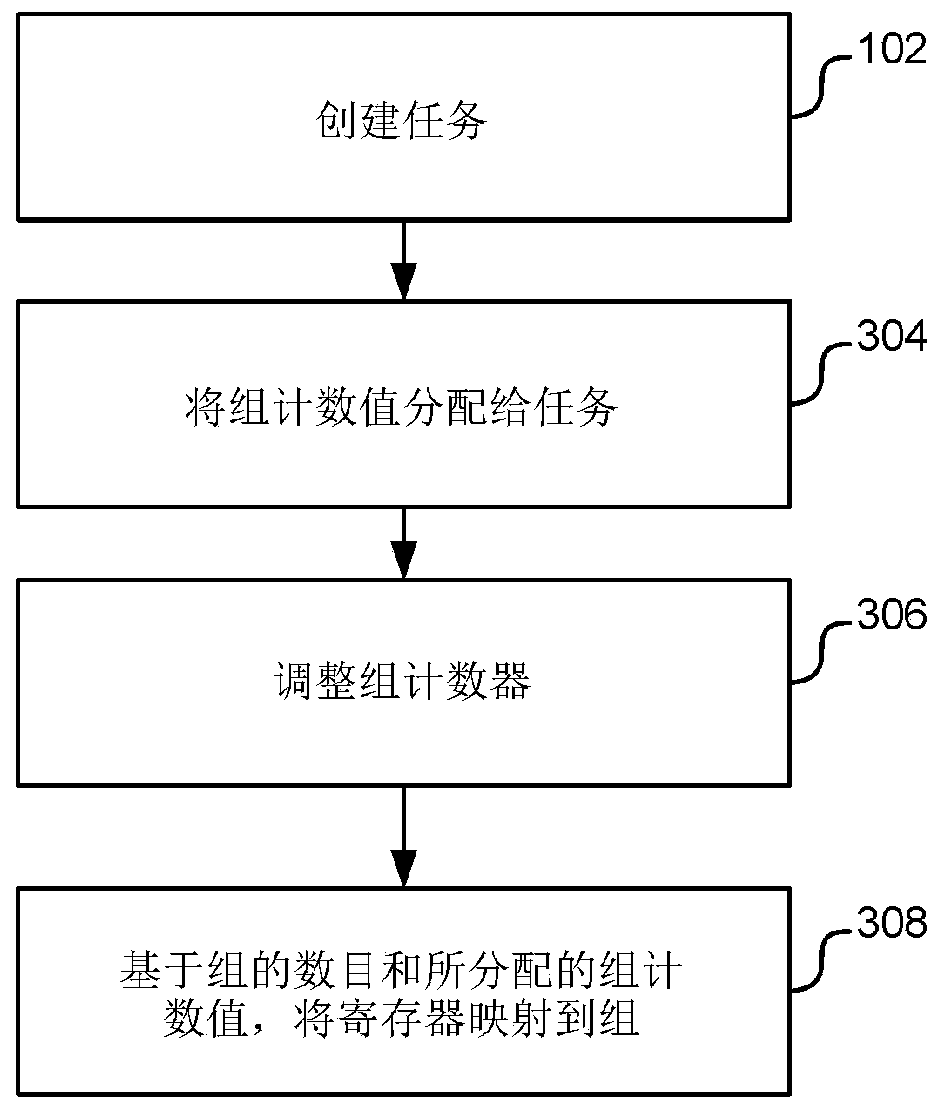

[0044] As described above, a processing system (eg, a system including a CPU or GPU and memory) may include multiple banks within memory. The executed instruction (eg, a read or write instruction) typically does not refer to any particular group, but only a register number, eg, read r0, where r0 refers to register 0. In known processing systems, an address generation unit maps register numbers to groups within memory based on a defined formula (or relation) such as:

[0045] (group number) = (register number) mod (number of groups)

[0046] (Equation 1)

[0047] And the address decoding logic within each grou...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com