Semantic segmentation-based text detection method for scene of any shape

A technology of text detection and arbitrary shape, which is applied in the field of computer vision, can solve the problems of inability to accurately locate text of arbitrary shape, cannot be solved, and the detection accuracy rate is not high, and achieves fast speed, strong generalization ability, and high detection accuracy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

[0046] As a preferred embodiment of the present invention, the construction method of scene text detection network model in described step S1 comprises the following steps:

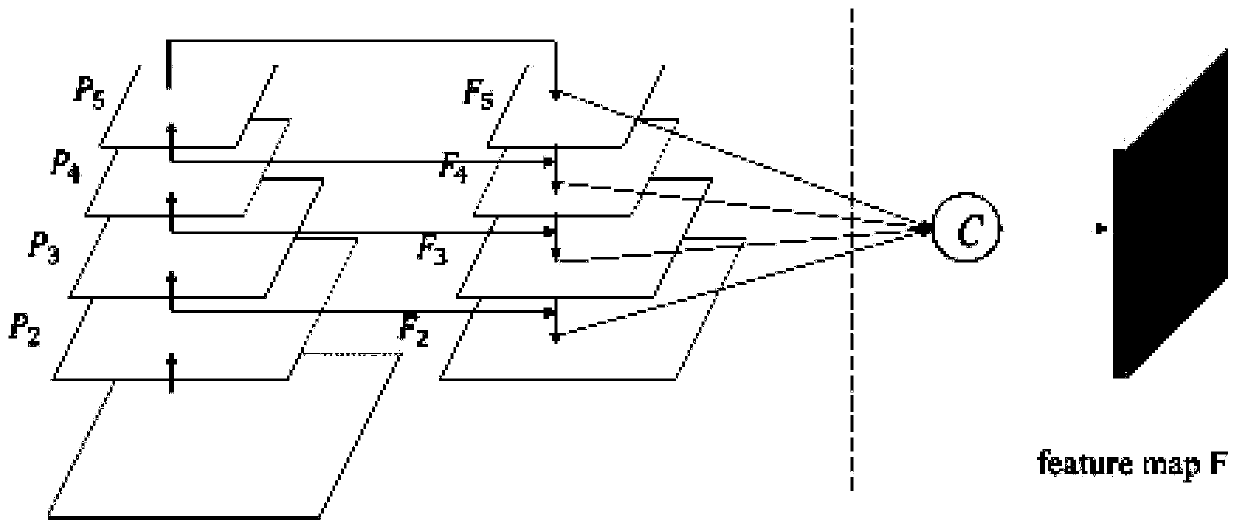

[0047] S101, using the feature pyramid network for feature extraction and multi-feature fusion, refer to figure 2 , the feature pyramid network is a network based on the residual deep convolutional neural network, which consists of a bottom-up connection, a top-down connection and a horizontal connection structure; using the feature pyramid network model from the input data set picture Extract and fuse low-level high-resolution features and high-level high-semantic information features: First, input the training data set images into the bottom-up network structure of the feature pyramid network, that is, the forward process of the network. In the forward process, the network feature map will change after passing through some layers, but will not change when passing through other layers. The convolutional...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com