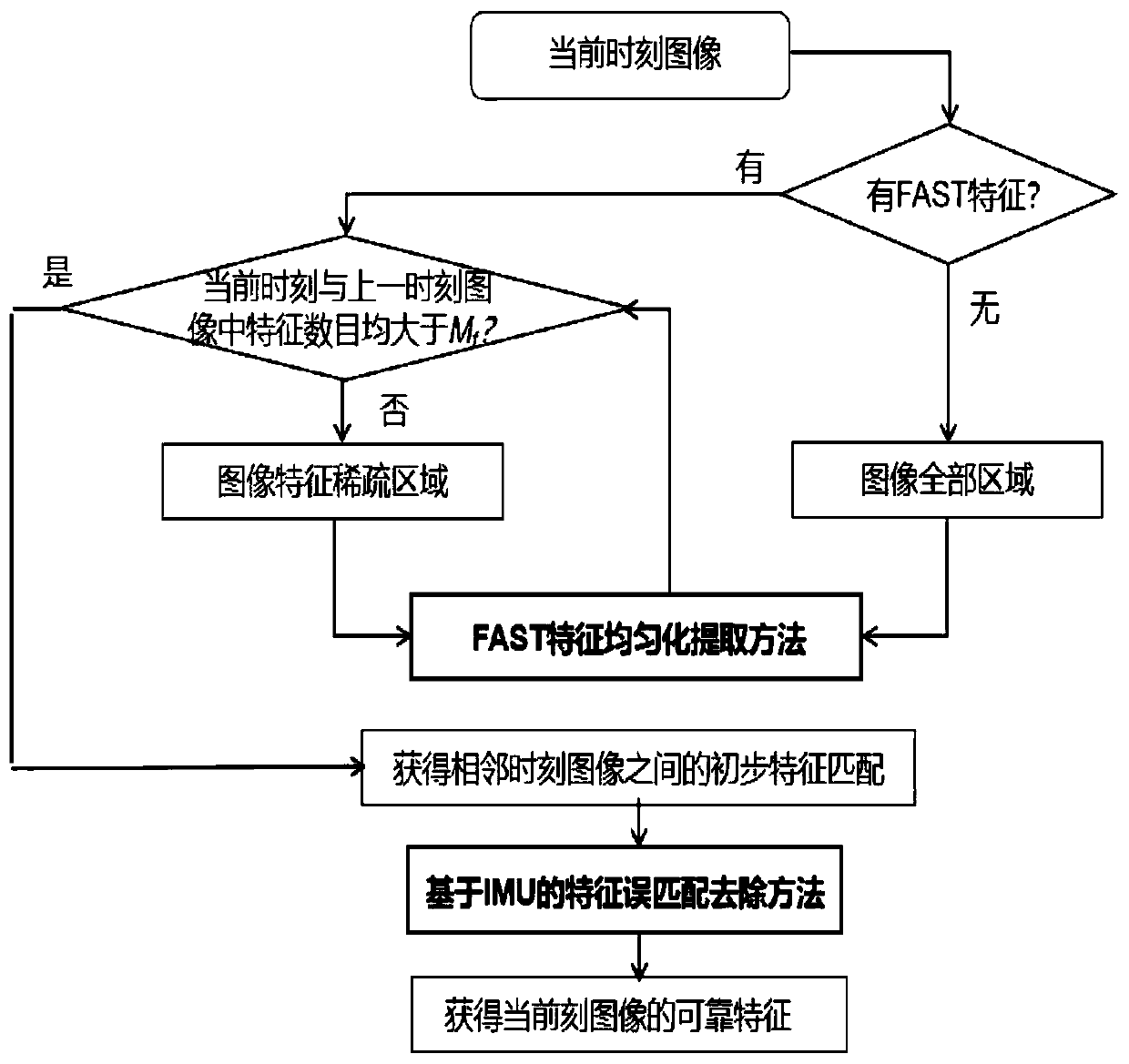

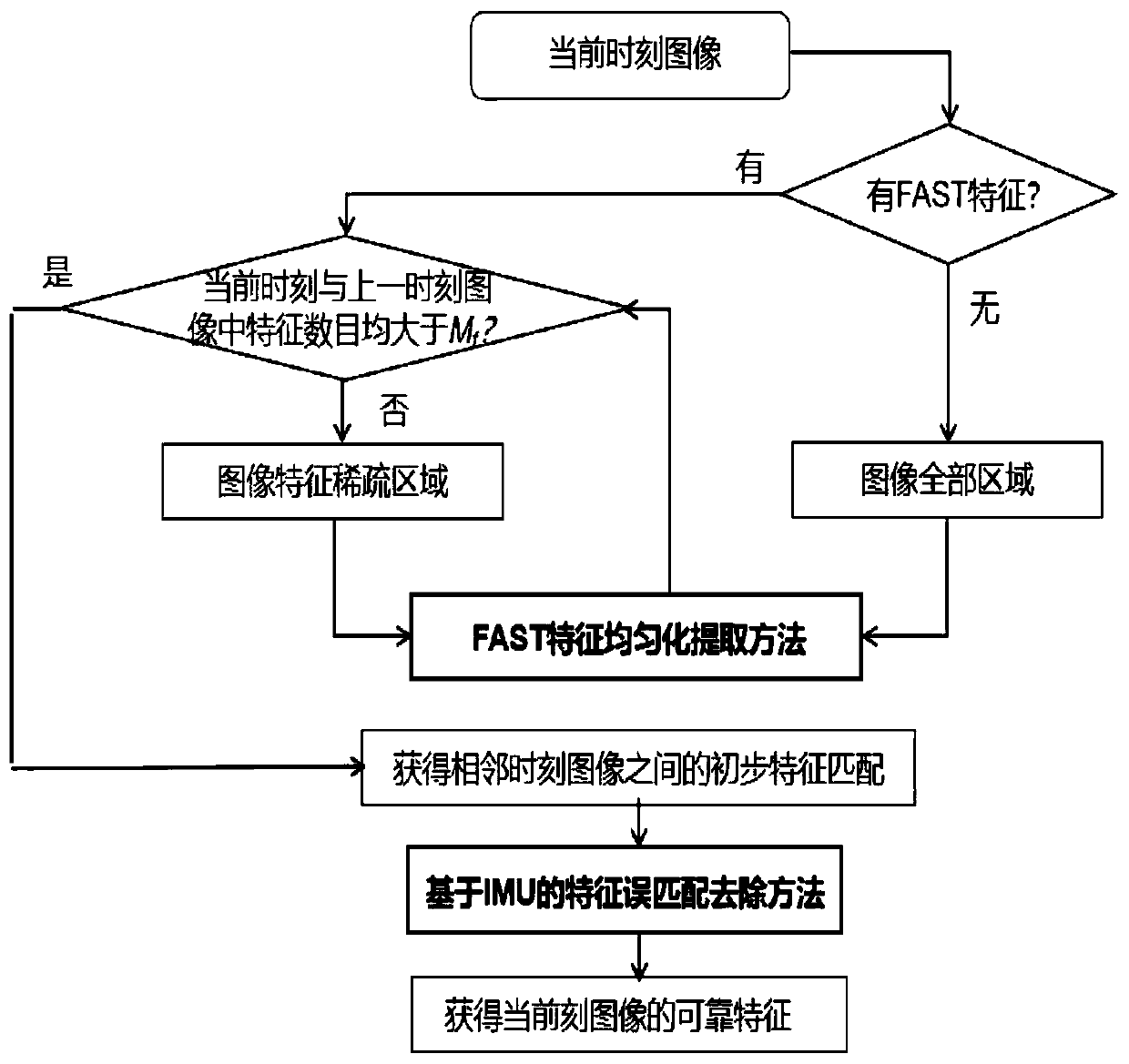

FAST feature homogenization extraction and IMU-based inter-frame feature mismatching removal method

An extraction method and feature extraction technology, applied in the fields of image processing and computer vision, can solve problems such as inability to use well, inability to accurately judge feature mismatches, and reduce the accuracy of feature matching.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0100] An important application field of the method of the present invention includes but is not limited to monocular visual inertial SLAM. Its working principle is the image acquired by the monocular camera, the acceleration and angular velocity acquired by the IMU sensor, and after a series of calculations, the world coordinates of the object are output in real time. The translation and rotation of the system. As an important part of image data processing, the method of the present invention is responsible for extracting feature points in the image and outputting feature matching between adjacent images for subsequent calculations.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com