Visual/inertial integrated navigation method based on online calibration of camera internal parameters

A navigation method and an inertial combination technology, which is applied to navigation, navigation, surveying and navigation through speed/acceleration measurement, and can solve the problem of reduced accuracy of the navigation system

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0058] The technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings.

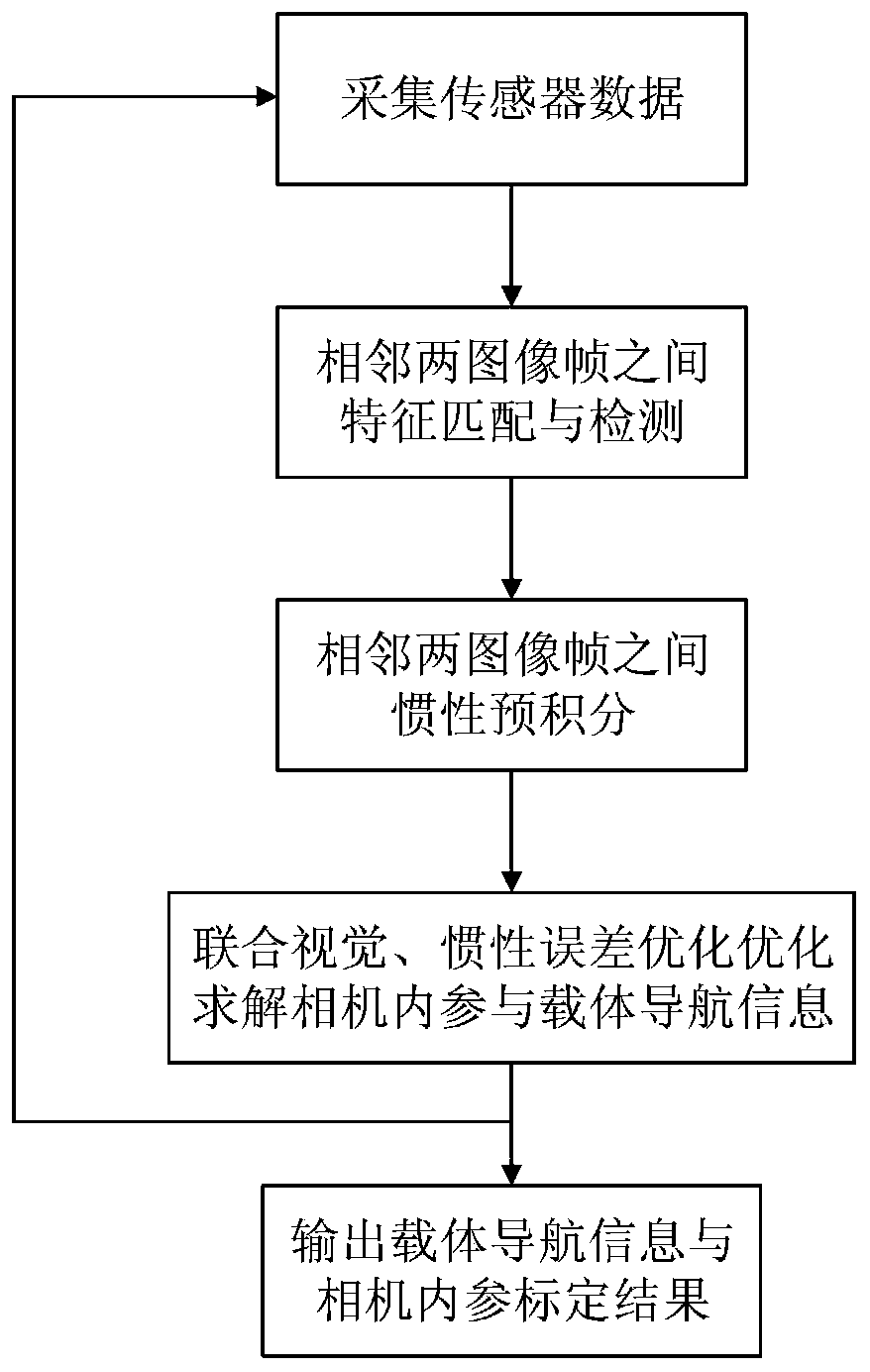

[0059] The present invention designs a visual / inertial integrated navigation method based on camera internal reference online calibration, such as figure 1 As shown, the steps are as follows:

[0060] Step 1: Collect visual sensor data S(k) and accelerometer data at time k and gyroscope data

[0061] Step 2: use the visual sensor data S(k) to perform feature matching and detection between two adjacent image frames;

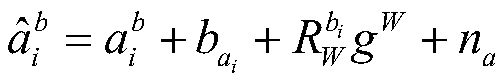

[0062] Step 3: Leverage Inertial Sensor Data with Perform pre-integration between adjacent two image frames;

[0063] Step 4: Combine visual reprojection error and inertial pre-integration error to optimize and solve carrier navigation information and camera internal parameters;

[0064] Step 5: Output carrier navigation information and camera internal parameters, and return to step 1.

[0065] In this embodiment, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com