Multi-appearance model fusion target tracking method and device based on sparse representation

An appearance model and target tracking technology, which is applied in the field of target tracking, can solve problems such as tracking failure, achieve high update efficiency, improve adaptability, and reduce the amount of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

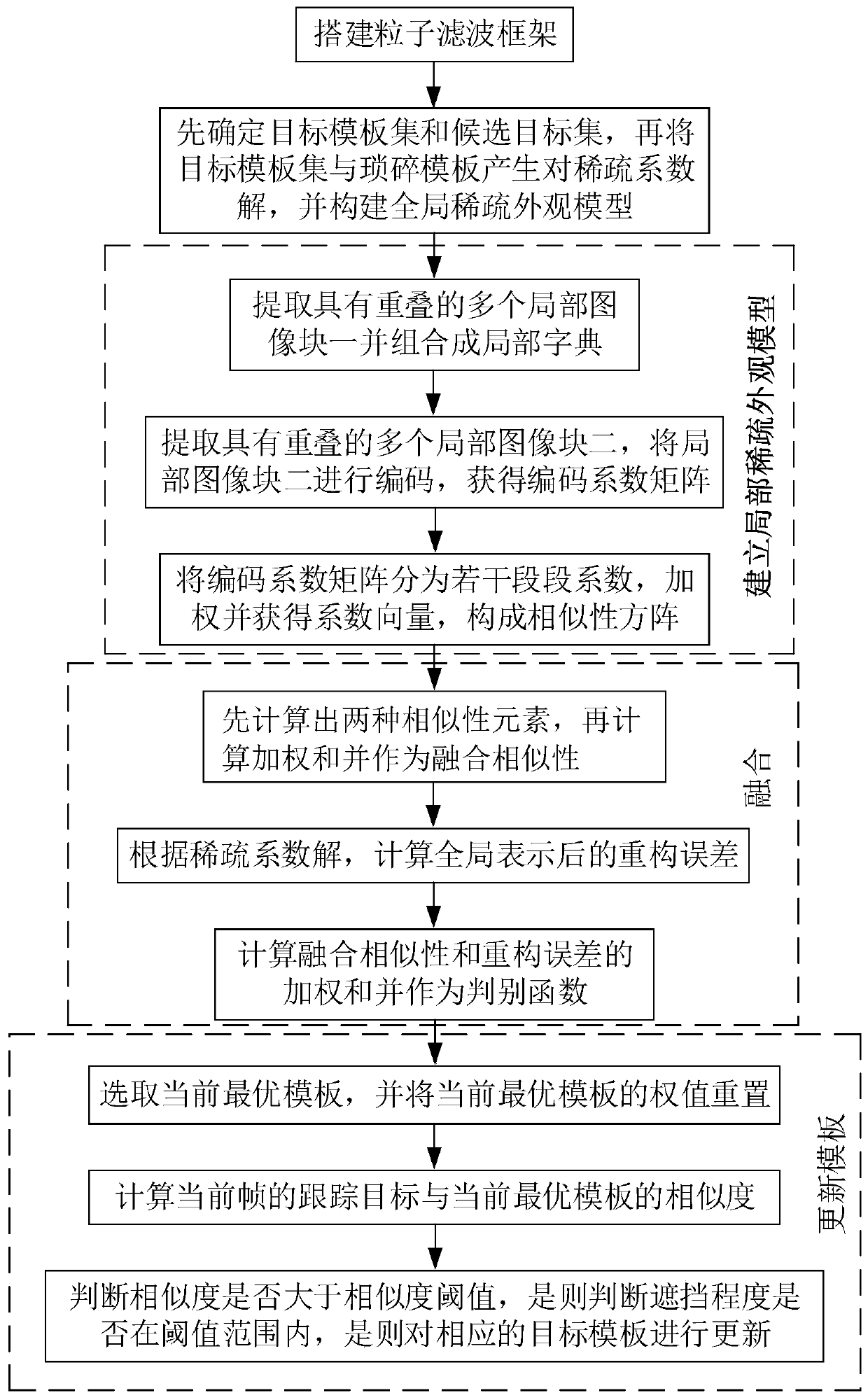

[0071] see figure 1 , the present invention provides a multi-appearance model fusion target tracking method based on sparse representation, and the method is used to track the tracking target in the tracking image. Wherein, the target tracking method of this embodiment includes the following steps ((1)-(5)).

[0072] (1) Build a particle filter framework, and determine the state of the tracking target through multiple affine parameters to build a motion model for the state transition of the tracking target. In this embodiment, the particle filter in the particle filter framework essentially implements a recursive Bayesian estimation through a non-parametric Monte Carlo simulation, that is, a random sample set with corresponding weights is used to approximate the subsequent state of the system. test probability density. Particle filtering generally carries out the following steps: ①Initial state: Simulate X(t) with a large number of particles, and the particles are uniformly ...

Embodiment 2

[0122] This embodiment provides a multi-appearance model fusion target tracking method based on sparse representation. This method has carried out simulation experiments on the basis of Embodiment 1 (in other embodiments, simulation experiments may not be performed, and other experimental schemes may also be used. Conduct experiments to determine relevant parameters and target tracking performance).

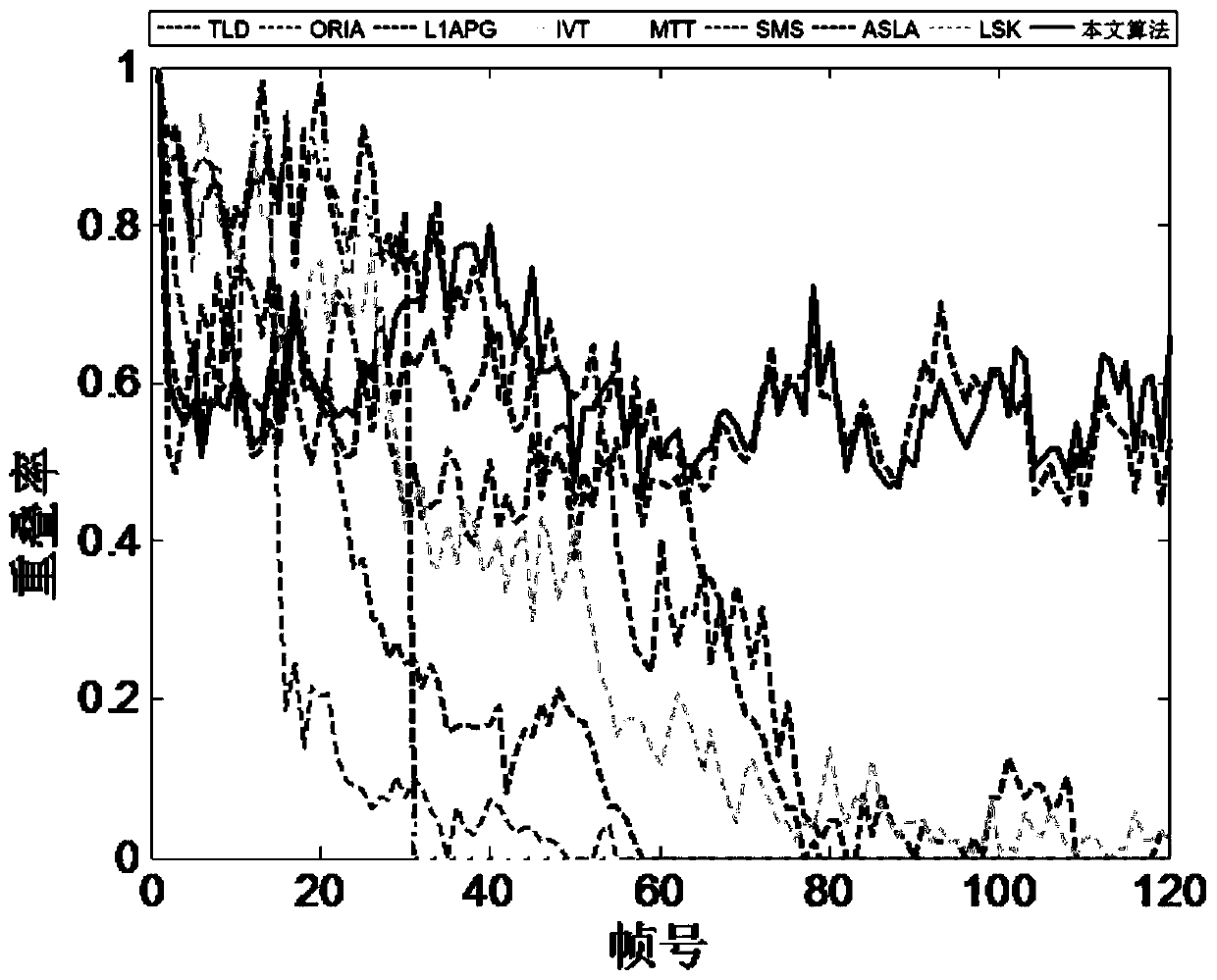

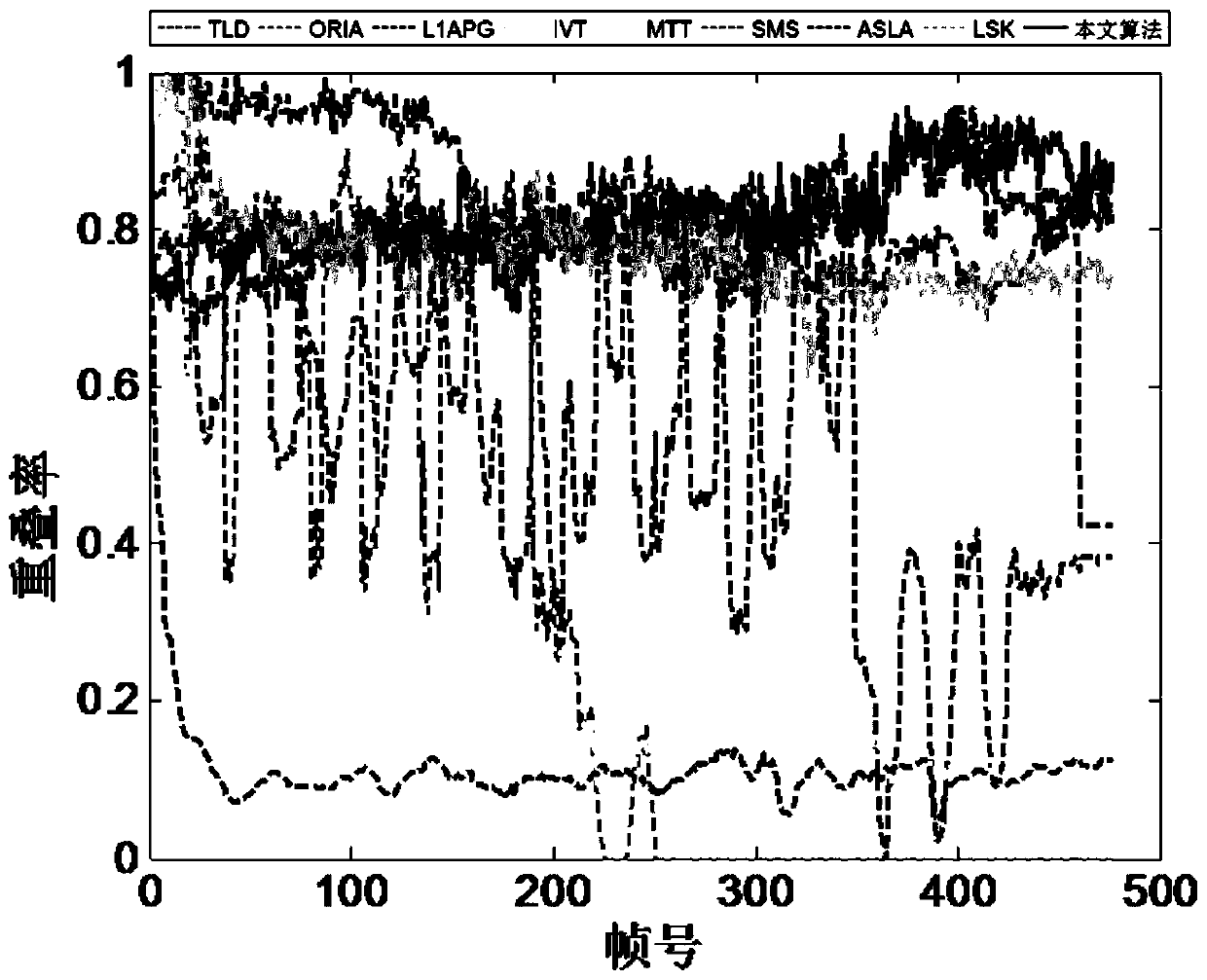

[0123] In this embodiment, IntelCore3.2GHz, 4GB memory, MATLAB2010b platform carry out simulation experiments on the tracking method, the tracking method is carried out under the particle filter framework, the number of particles is 600, and the size of each target image block is 32*32 pixels, from the target area The sizes of the extracted local image blocks are 16*16 pixels and 8*8 pixels respectively. n 1 Corresponding to 16*16 size local block weight coefficient, η 2 Corresponding to the 8*8 size local block weight coefficient. We select 10 representative videos for experim...

Embodiment 3

[0150] This embodiment provides a multi-appearance model fusion object tracking device based on sparse representation, which applies the sparse representation-based multi-appearance model fusion object tracking method in Embodiment 1 or Implementation 2. Wherein, the object tracking device of this embodiment includes a particle filter framework building module, a global sparse appearance model building module, a local sparse appearance model building module, a fusion module and a template updating module.

[0151] The particle filter frame building module is used to build a particle filter frame, and determine the state of the tracking target through multiple affine parameters to build a motion model for tracking the state transition of the target.

[0152] The global sparse appearance model building module is used in the particle filter framework to first determine the target template set and candidate target set of the tracking image, and then use the target template set and th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com