Robot rapid repositioning method and system based on visual dictionary

A visual dictionary and robot technology, applied to radio wave measurement systems, instruments, computer components, etc., can solve the problems of no repositioning, robot inconvenience, etc., and achieve the effect of avoiding manual intervention, simple method, and strong robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

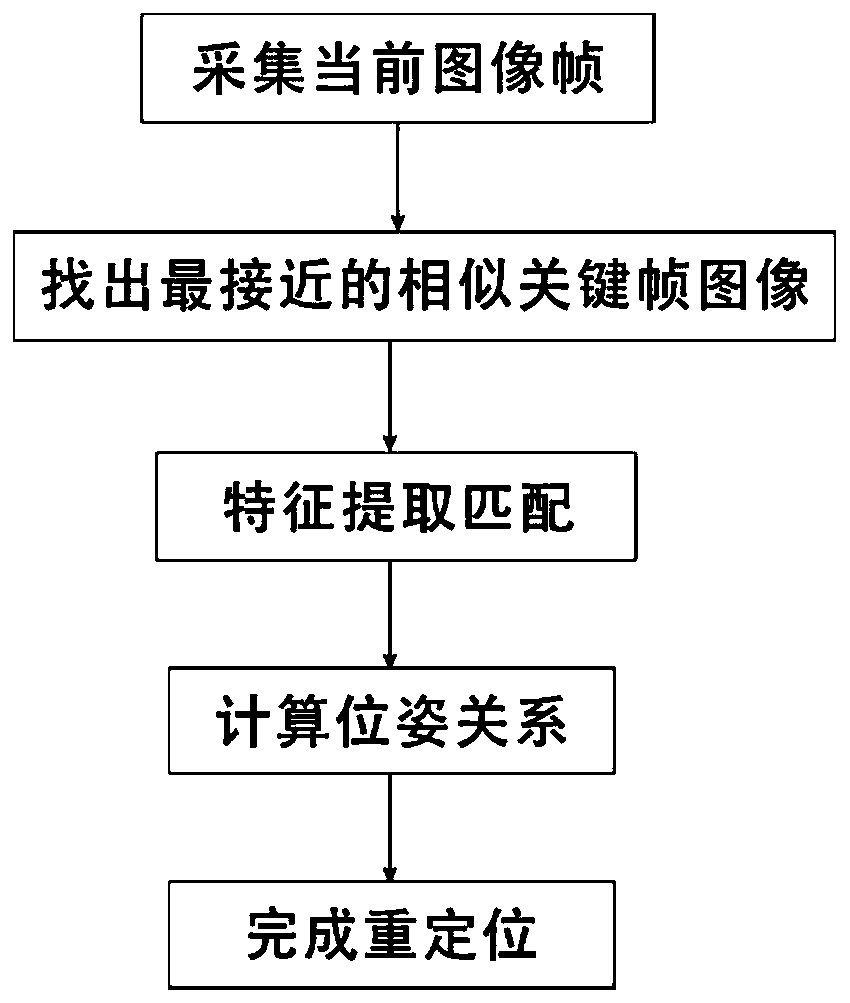

[0028] In the first embodiment of the present invention, a fast relocation method based on a visual dictionary is provided. When the robot fails to locate using the laser radar sensor 200 and loses the location of the robot, the image acquired by the image acquisition module 100 can be used to quickly relocate. Robot relocation. Specifically, refer to figure 1 , including the following steps,

[0029] Step 1: Use the image acquisition module 100 to obtain the current image frame, compare it with the key frames stored in the visual map, and find out the closest similar key frame. In this step, the visual map is established based on the visual SLAM algorithm. The image acquisition module 100 can be a camera or a camera, which can collect a single frame of image information. The image acquisition module 100 is set on the robot and can follow the robot. When the robot fails to locate, The image collection module 100 collects the current image frame, compares the current image fr...

Embodiment 2

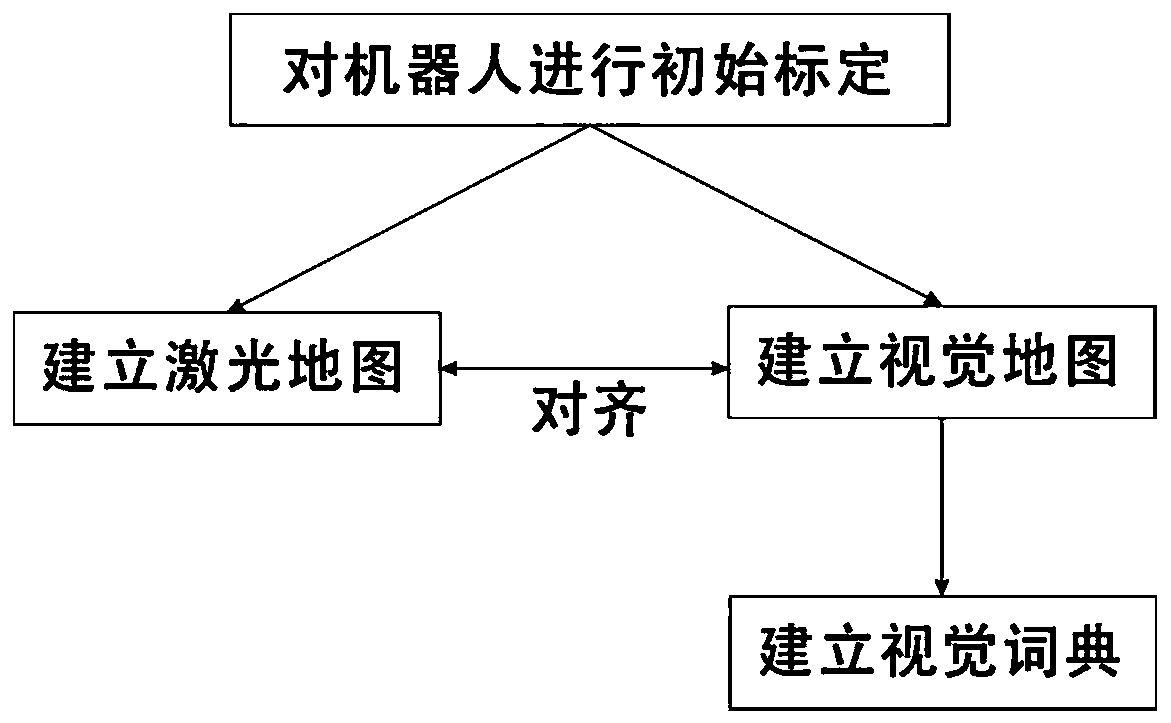

[0057] refer to figure 2 , is the second embodiment of the present invention, and this embodiment is different from the previous embodiment in that it also includes the following steps,

[0058] Step 1: Initially calibrate the robot using the image acquisition module 100 . Specifically, this step includes performing calibration using a checkerboard calibration board and Zhang's calibration method to obtain camera internal parameter coefficients and distortion coefficients. Those in this profession can understand that Zhang’s calibration method is a camera calibration method based on a single-plane checkerboard. This method only requires a printed checkerboard, and the calibration is simple. The camera and calibration board can be placed arbitrarily, and the calibration The precision is high.

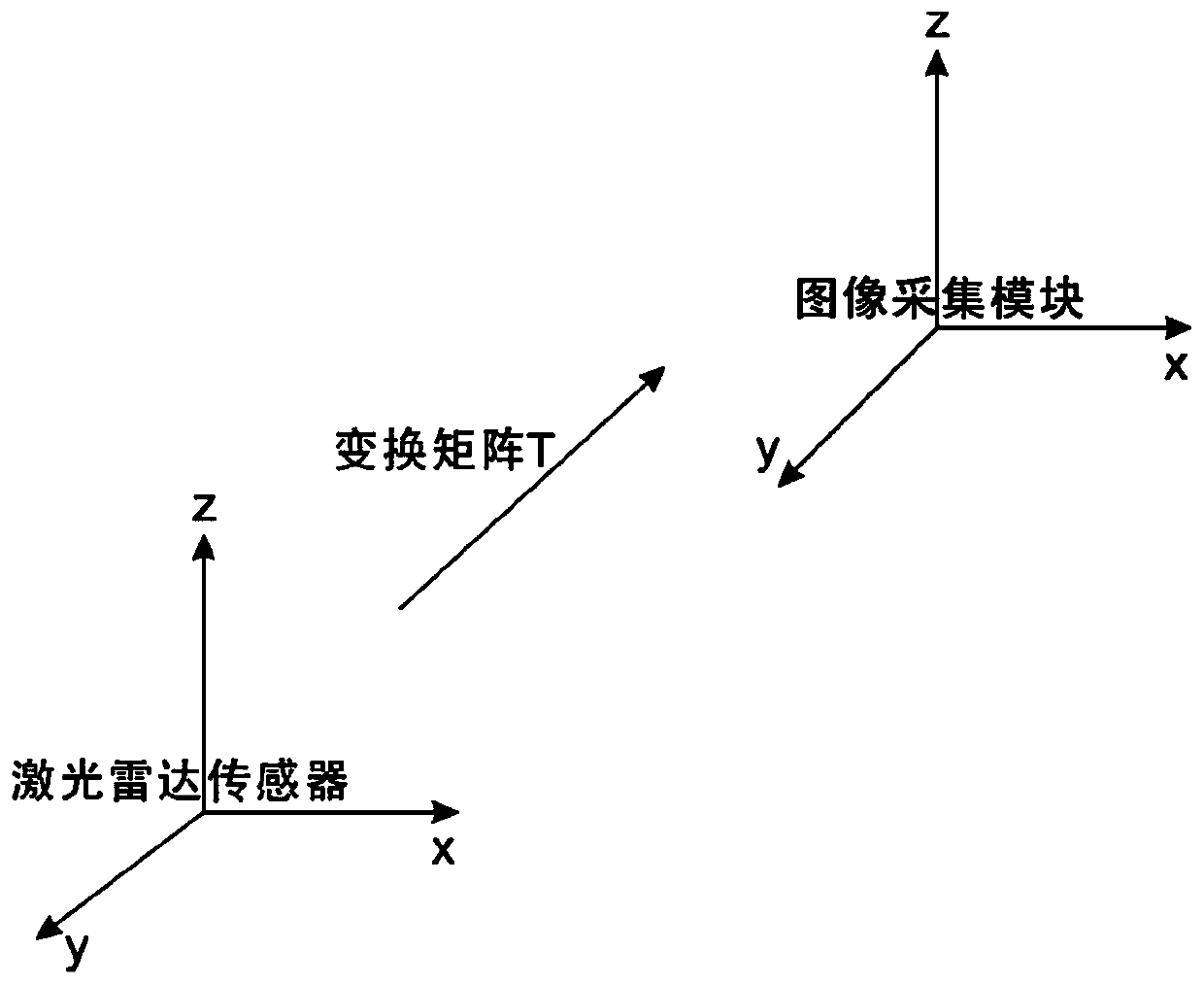

[0059] refer to image 3 , measuring the coordinate system transformation matrix T between the laser radar sensor 200 and the image acquisition module 100, the transformation matrix ...

Embodiment 3

[0093] refer to Figure 4 , which is a visual dictionary-based fast robot relocation system proposed in this embodiment, and the visual dictionary-based fast robot relocation method in the above embodiments can be applied to the robot fast relocation system. Specifically, the system can be divided into a software module and a hardware module, wherein the hardware module includes an image acquisition module 100 and a laser radar sensor 200, the image acquisition module 100 is used to collect image information around the robot, and the laser radar sensor 200 is used to collect the For the distance information between the surrounding objects and the robot, the image acquisition module 100 can use a camera or a camera to purchase image information. The laser radar sensor 200 is a sensor that uses laser technology for measurement, and is composed of a laser, a laser detector and a measurement circuit. Realize non-contact long-distance measurement, fast speed, high precision, large ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com