Class case recommendation method based on text content

A technology for recommending methods and content, applied in text database clustering/classification, neural learning methods, text database query, etc. It can solve the problems of neglect, inability to apply, and general model effect, and achieve the effect of improving the effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

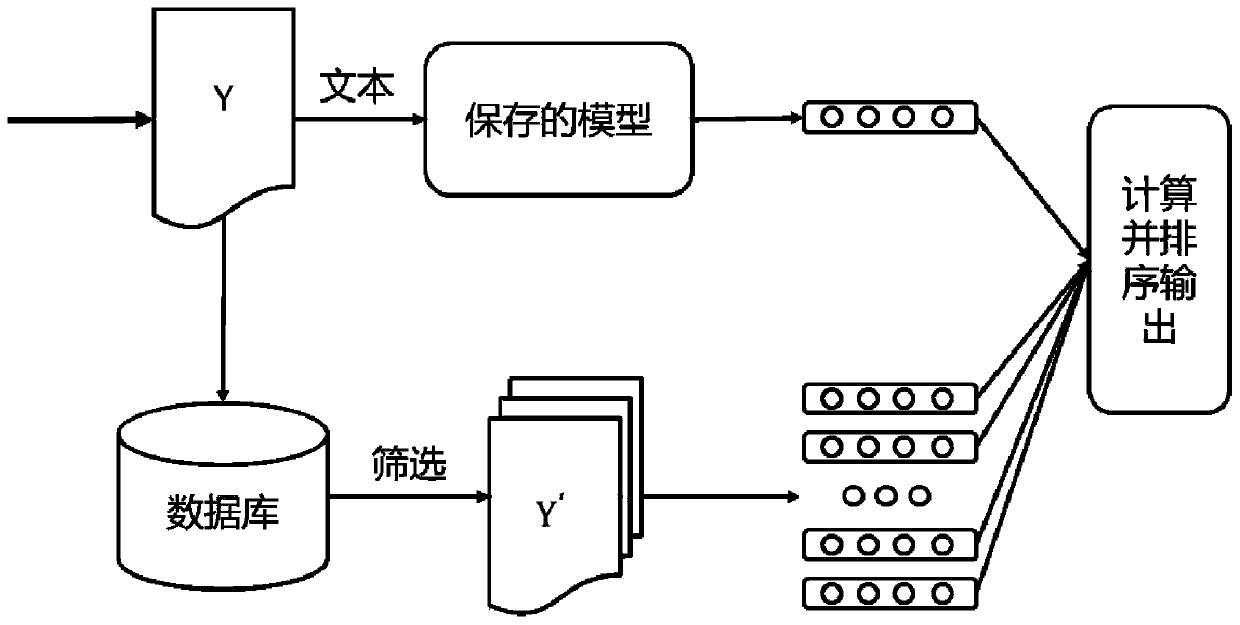

[0080] A method for recommending similar cases based on content includes the following steps:

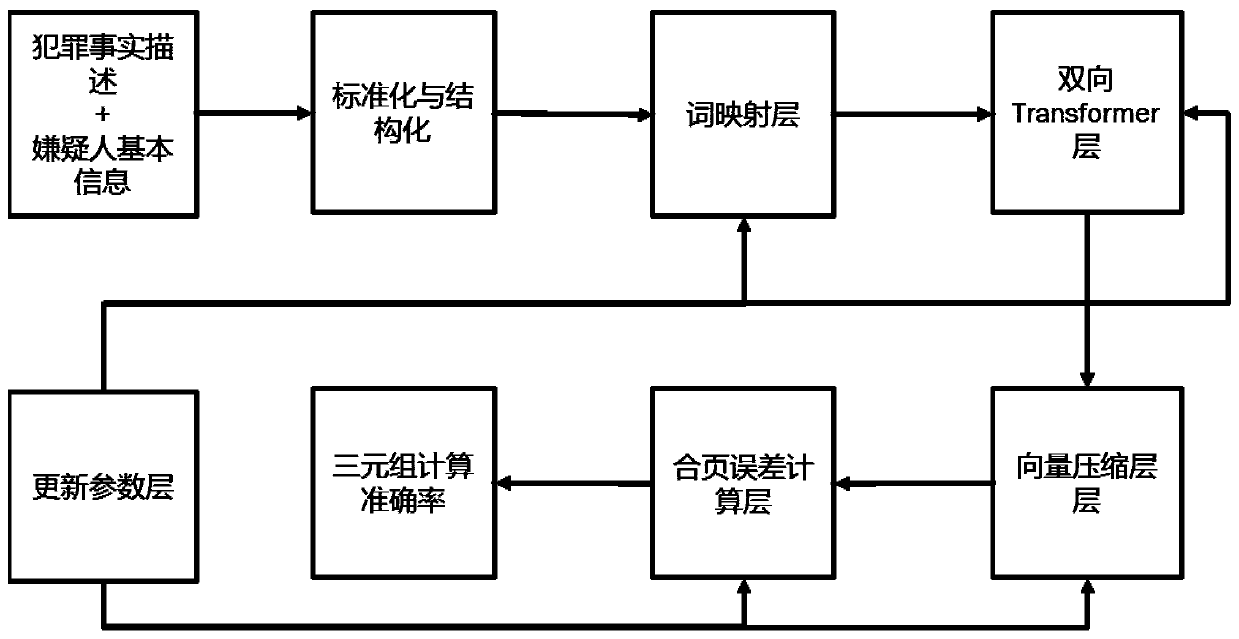

[0081] (1) Construct unstructured data into structured data:

[0082] Use the method of rule matching to extract the required information such as description of criminal facts, basic information of criminal suspects, etc., realize data structuring, and construct a structured data set; required information includes description of criminal facts and basic information data of suspects, basic information of suspects Data includes age, gender, pre-arrest occupation information;

[0083]The non-overlapping structured data set is divided into training data set and test data set. The ratio of training data set and test data set is 7:3, that is, the training data set accounts for 70% of the structured data set, and the test data set accounts for 70% of the structured data set. 30% of the data set;

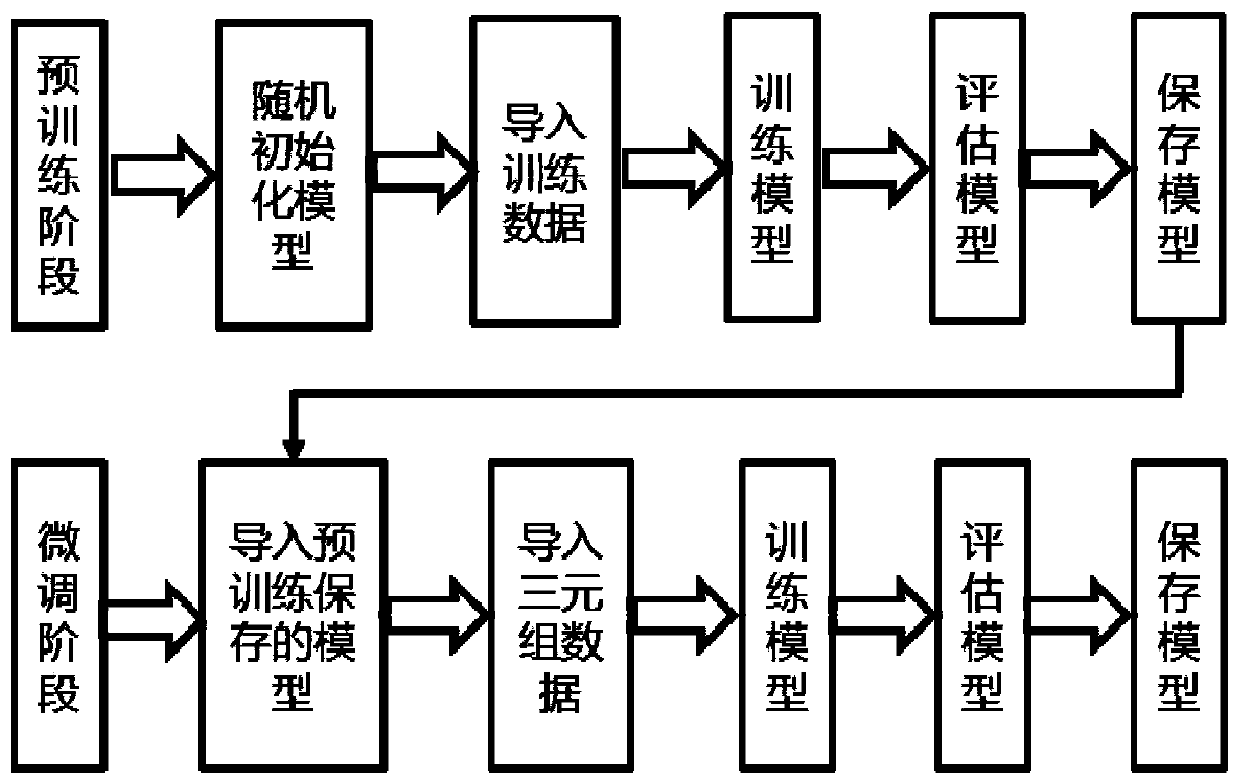

[0084] (2) Model pre-training:

[0085] The model includes sequentially connected word map...

Embodiment 2

[0103] According to a method for recommending similar cases based on content described in Embodiment 1, the difference is that:

[0104] In step (2), the basic structure for the vector compression layer is a self-attention structure, as shown in formulas (I) and (II):

[0105] A=Attention(Q,K,V)=sigmoid(Q T KV T ) (I)

[0106] R=Reduce(A, aixs=-2) (II)

[0107] Formula (I) represents the attention structure, Q, K, V are the output of described two-way transformer layer, namely the input of described vector compression layer, Q, K, V three are query, key, the abbreviation of value, refer to respectively Request matrix, key value matrix, and target matrix, in the present invention, all three are the same matrix; if Q, K, and V are the same input, it is called self-attention, and A represents the self-attention structure The result is the attention matrix of each column vector (that is, word vector) for all other column vectors in the input matrix (input is a two-dimensional ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com