Method for producing high-precision map based on live-action three-dimensional model

A three-dimensional model, high-precision technology, applied in 3D modeling, image data processing, character and pattern recognition, etc., can solve the problems of low precision of DOM road network plane, inability to reflect elevation information, and more manpower consumption. Achieve the effect of breaking the difficult acquisition and low accuracy of the roll angle, improving the accuracy of image recognition and segmentation, and improving automation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

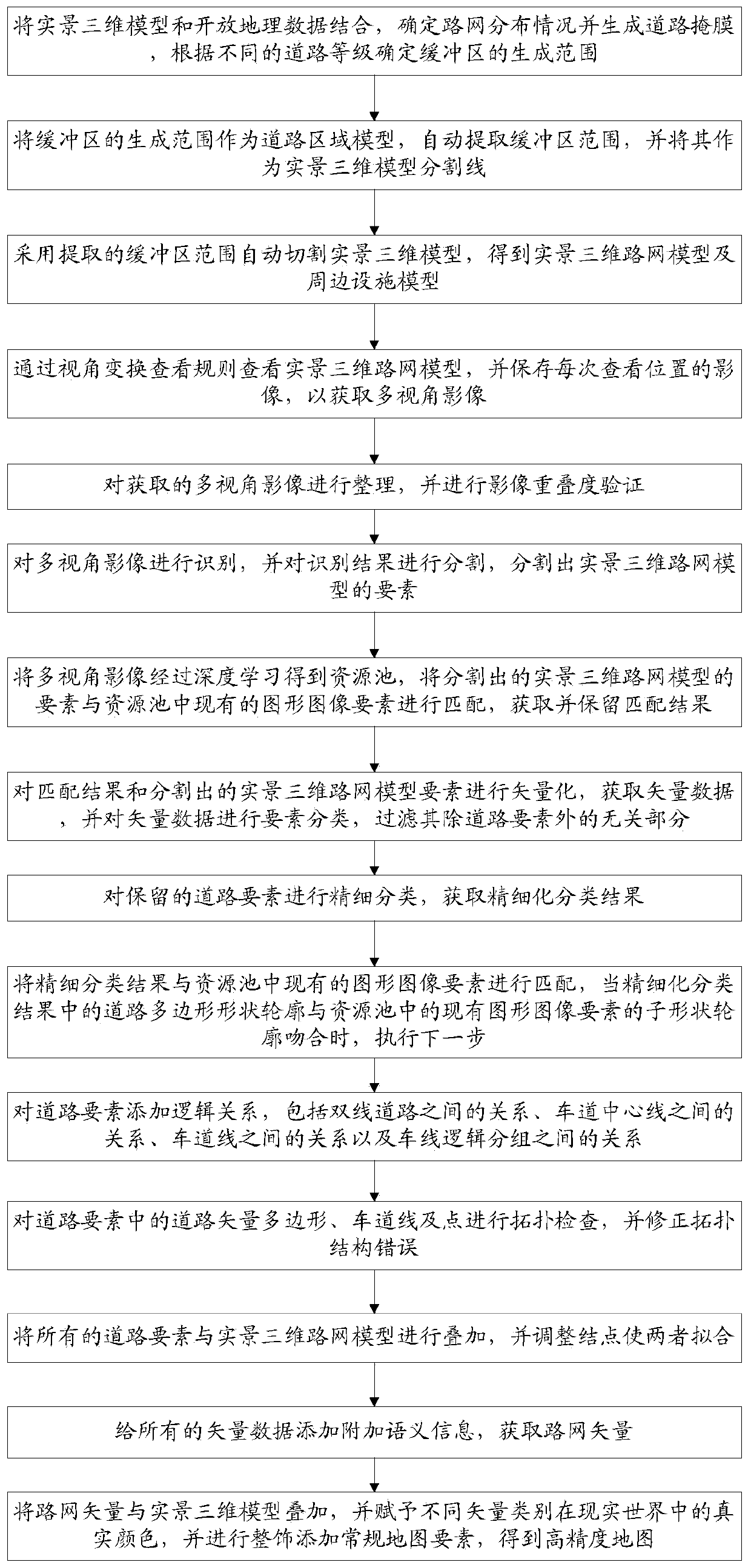

Method used

Image

Examples

example I

[0077] Each pixel in the recognition result is assigned a semantic label and an instance ID;

[0078] The pixels with the same semantic label and instance ID are grouped into the same object, and the elements of the real-world 3D road network model are segmented.

[0079] In this embodiment, the resource pool contains a collection of all elements of the road, including graphic images and individual attributes; the elements of the segmented real-scene 3D road network model are matched one by one with the existing graphic image elements in the resource pool , the matching matching rule is that when the overlap ratio between the segmentation window predicted by the real-world 3D road network model and the original image marker window in the resource pool is greater than 0.5, the two can match, and the matching result is obtained and retained.

[0080] In this embodiment, in step S8, the road elements are classified into three types, which are respectively,

[0081] Lane model; i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com